Enhancing Reasoning in Language Models

Large Language Models (LLMs) such as ChatGPT, Claude, and Gemini have shown impressive reasoning abilities, particularly in mathematics and coding. The introduction of GPT-4 has further increased interest in improving these reasoning skills through advanced inference techniques.

Challenges of Self-Correction

A significant challenge is enabling LLMs to identify and correct their errors, known as self-correction. Although models can refine their responses using external reward signals, this method can be computationally intensive, as it often requires running multiple models simultaneously. Research indicates that accuracy can improve even when feedback comes from proxy models. However, current LLMs face difficulties in self-correcting without external guidance, as their intrinsic reasoning alone is often inadequate.

Innovative Approaches

Recent studies have explored using LLMs as evaluators, where they generate their own reward signals through instruction-following mechanisms instead of relying on pre-trained reward functions. Researchers from the University of Illinois Urbana-Champaign and the University of Maryland, College Park, have investigated self-rewarding reasoning, allowing models to generate reasoning steps, evaluate their correctness, and refine responses independently.

Two-Stage Framework

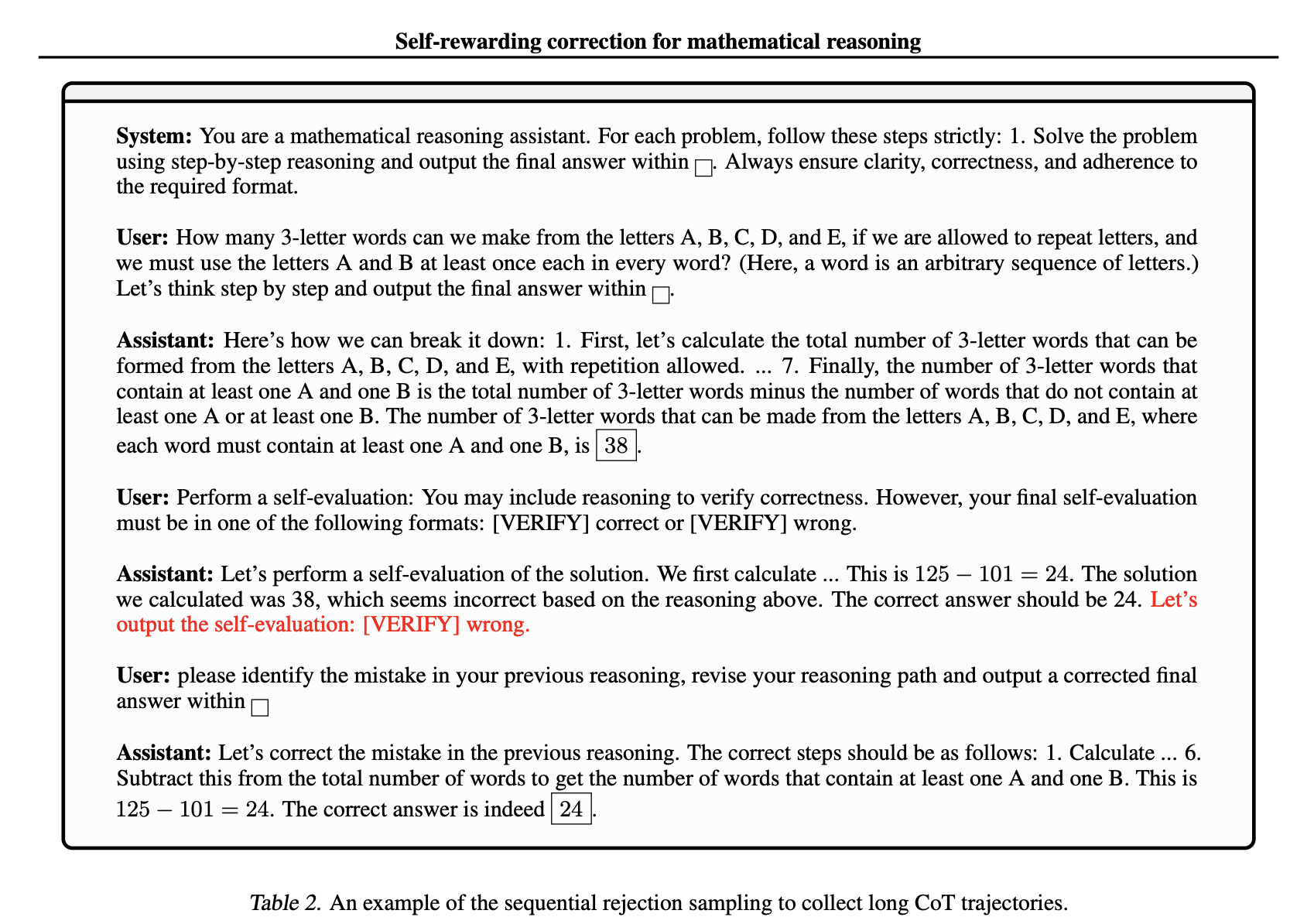

The proposed framework involves a two-stage process. Initially, sequential rejection sampling constructs long chain-of-thought (CoT) trajectories that embed self-rewarding and self-correction behaviors. The second stage involves fine-tuning the models using reinforcement learning with rule-based signals. Experiments have shown that this method enhances self-correction capabilities and matches the effectiveness of models dependent on external rewards.

Multi-Turn Markov Decision Process

Self-rewarding reasoning is conceptualized as a multi-turn Markov Decision Process (MDP). The model generates an initial response and assesses its accuracy. If the response is correct, the process concludes; if not, the model iteratively refines its answer. The training framework comprises self-rewarding instruction fine-tuning (IFT) and reinforcement learning (RL), optimizing correctness assessments through KL-regularized training.

Evaluation and Results

The study evaluates mathematical reasoning models using datasets like MATH500 and OlympiadBench. It assesses performance through metrics such as accuracy improvements and self-correction efficiency. Traditional methods often lead to unnecessary changes and lower accuracy. In contrast, self-rewarding reasoning models consistently enhance performance while minimizing errors. Fine-tuning on self-generated corrections significantly boosts the model’s ability to rectify mistakes without overcorrecting.

Conclusion and Future Directions

The study presents a self-rewarding reasoning framework that improves self-correction and computational efficiency in LLMs. By merging self-rewarding IFT and reinforcement learning, the model can detect and refine errors using internal feedback. Future advancements aim to tackle reward model accuracy challenges and explore multi-turn reinforcement learning methods.

Practical Business Solutions

Explore how artificial intelligence can revolutionize your business processes:

- Identify business processes suitable for automation.

- Pinpoint customer interaction points where AI can add value.

- Establish key performance indicators (KPIs) to measure the effectiveness of AI investments.

- Select customizable tools that align with your business objectives.

- Start with a pilot project to gather data, then gradually expand your AI initiatives.

Contact Us

If you require assistance in managing AI in your business, reach out to us at hello@itinai.ru. Follow us on Telegram, X, and LinkedIn.