“`html

Evaluating Multimodal Large Language Models (MLLMs) in Text-Rich Scenarios

Practical Solutions and Value:

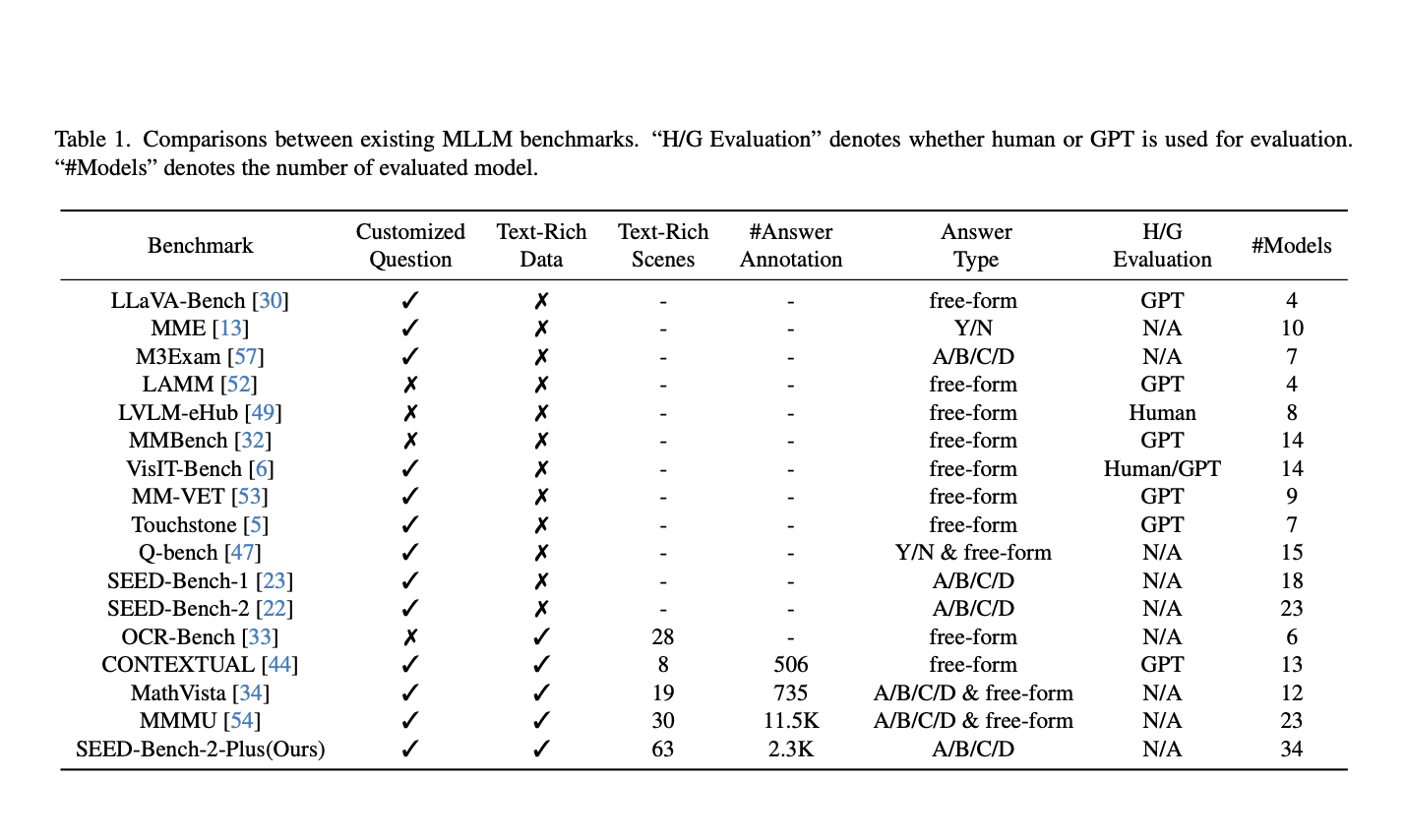

Evaluating MLLMs’ performance in understanding text-rich visual content is crucial for their practical applications. SEED-Bench-2-Plus is a specialized benchmark developed for this purpose. It consists of 2.3K meticulously crafted multiple-choice questions covering real-world scenarios.

SEED-Bench-2-Plus addresses the gap in evaluating MLLMs’ performance in text-rich contexts, offering a comprehensive benchmark for understanding text within images. Unlike existing benchmarks, it encompasses a broad spectrum of real-world scenarios and provides a valuable tool for objective evaluation and advancement in this domain.

The dataset is curated to include charts, maps, and website screenshots rich in textual information. Human annotators ensure accuracy, and evaluation involves an answer ranking strategy, providing a comprehensive evaluation platform with 63 data types across three broad categories.

Insights from the evaluation of 31 open-source and three closed-source MLLMs have underscored the need for further research to enhance MLLMs’ proficiency in text-rich scenarios, ensuring adaptability across diverse data types.

SEED-Bench-2-Plus complements the dataset and evaluation code, fostering advancements in text-rich visual comprehension with MLLMs. It offers a thorough evaluation platform and valuable insights to guide future research.

Discover how AI can redefine your way of work:

Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually.

For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights into leveraging AI, stay tuned on our Telegram or Twitter.

Spotlight on a Practical AI Solution:

Consider the AI Sales Bot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.

“`