Practical Solutions for Safe AI Language Models

Challenges in Language Model Safety

Large Language Models (LLMs) can generate offensive or harmful content due to their training process. Researchers are working on methods to maintain language generation capabilities while mitigating unsafe content.

Existing Approaches

Current attempts to address safety concerns in LLMs include safety tuning and the use of guardrails to monitor exchanges between chat models and users.

Introducing LoRA-Guard

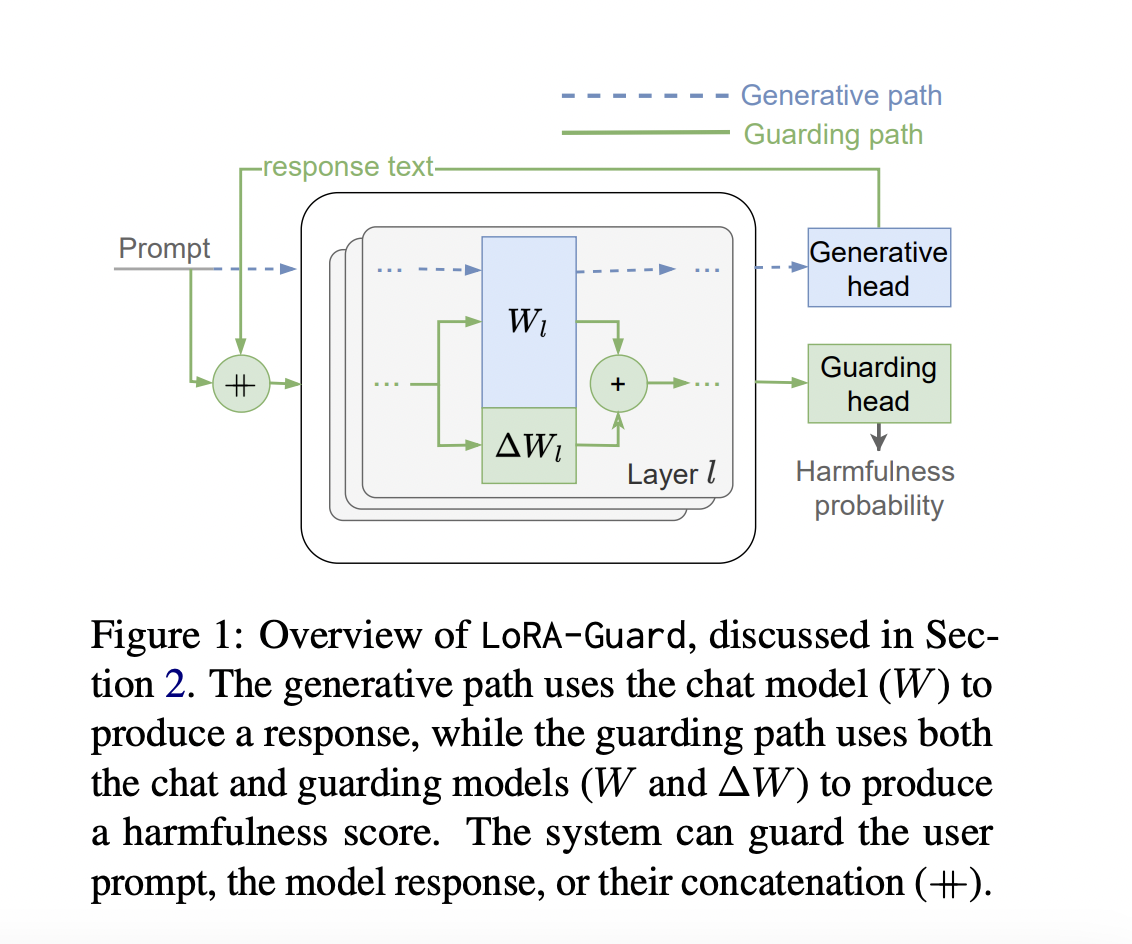

LoRA-Guard is an innovative system that integrates chat and guard models to efficiently detect harmful content, reducing parameter overhead by 100-1000 times compared to previous methods.

Efficient Integration and Performance

LoRA-Guard’s architecture seamlessly switches between chat and guard functions, demonstrating exceptional performance on multiple datasets while using significantly fewer parameters.

Advantages of LoRA-Guard

LoRA-Guard represents a significant leap in moderated conversational systems, reducing guardrailing parameter overhead by 100-1000 times while maintaining or improving performance. It paves the way for safer AI interactions across a broader range of applications and devices.

AI Solutions for Your Company

Discover how AI can redefine your way of work, redefine your sales processes, and customer engagement. Identify automation opportunities, define KPIs, select an AI solution, and implement gradually to leverage AI for your business.