Introduction to PerfCodeGen

Large Language Models (LLMs) play a crucial role in software development by generating code, automating tests, and debugging. However, they often produce code that is not only functionally correct but also inefficient, which can lead to poor performance and increased costs. This challenge is especially significant for less experienced developers who may rely heavily on AI-generated code. Salesforce Research introduces PerfCodeGen, a framework designed to improve the performance and correctness of LLM-generated code.

What is PerfCodeGen?

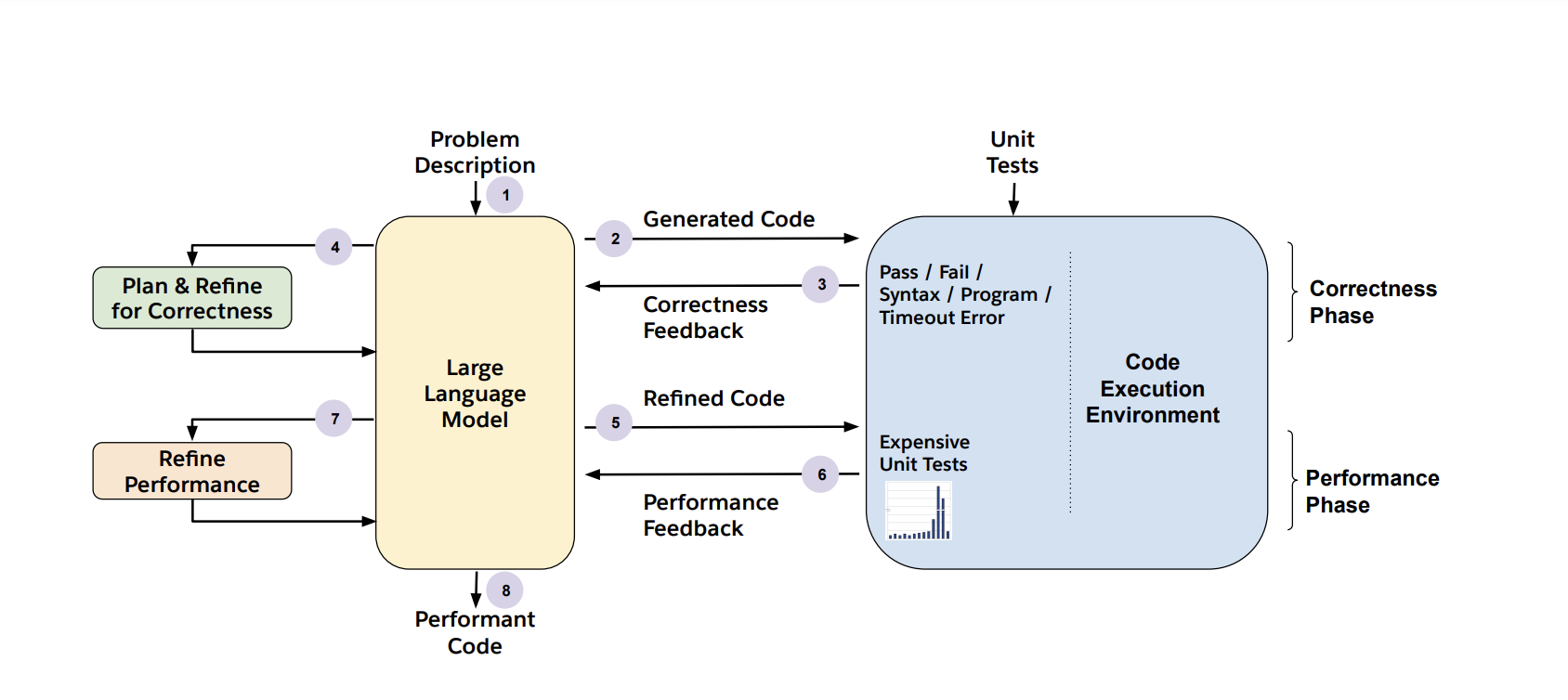

PerfCodeGen is a training-free framework that enhances the runtime efficiency of code generated by LLMs. It does this using a feedback loop that refines code based on its performance metrics during execution, rather than requiring extensive training data. The framework operates in two main phases:

1. Refining Correctness

First, PerfCodeGen ensures that the generated code meets functional requirements by fixing issues identified in unit tests.

2. Optimizing Performance

Next, it focuses on improving runtime efficiency by targeting the most resource-intensive test cases. This process results in solutions that are both correct and efficient.

Technical Insights and Benefits

PerfCodeGen integrates seamlessly with existing LLM workflows, starting by generating multiple candidate solutions. In the first phase, these candidates are tested for correctness. Feedback from any failed tests is used to refine the solutions. Once correctness is confirmed, the framework analyzes runtime metrics to find and address performance bottlenecks.

Key Benefits of PerfCodeGen:

- Increases the likelihood of producing efficient programs.

- Mimics human debugging and optimization techniques.

- Scales across different LLMs and application domains.

- Consistently improves runtime efficiency and correctness.

Performance Results

PerfCodeGen has been tested on various benchmarks, demonstrating its effectiveness:

- Runtime Efficiency: On HumanEval, GPT-4’s optimization rate increased significantly.

- Correctness Improvement: GPT-3.5’s correctness rate rose substantially on MBPP.

- Outperforming Ground Truth: LLMs generated more efficient solutions than the best-known answers in many tasks.

- Scalability: Open models performed comparably to advanced closed models.

Conclusion

PerfCodeGen addresses a major limitation of current LLMs by enhancing both correctness and runtime efficiency. Its innovative feedback-based refinement process makes it easier for developers to produce high-quality code without the need for extensive retraining. The success across various benchmarks showcases its potential to create reliable and efficient AI-driven programming solutions.

For more information, check out the Paper and GitHub Page. Credit goes to the researchers behind this project. Follow us on Twitter, join our Telegram Channel, and participate in our LinkedIn Group. Don’t forget to join our 65k+ ML SubReddit.

Get Started with AI

To leverage AI effectively in your organization, consider:

- Identifying Automation Opportunities: Find key areas that can benefit from AI.

- Defining KPIs: Ensure your AI initiatives have measurable impacts.

- Selecting an AI Solution: Choose tools that meet your specific needs.

- Implementing Gradually: Start with a pilot program, gather data, and expand usage wisely.

For AI KPI management advice, contact us at hello@itinai.com. Stay updated on AI insights via our Telegram or Twitter.

Discover how AI can transform your sales processes and customer engagement at itinai.com.