Advancements in AI with Salesforce’s APIGen-MT and xLAM-2-fc-r Models

Introduction

Salesforce AI has introduced innovative models, APIGen-MT and xLAM-2-fc-r, which enhance the capabilities of AI agents in managing complex, multi-turn conversations. These advancements are particularly relevant for businesses that rely on effective communication and task execution across various sectors, including finance, retail, and customer support.

The Challenge of Multi-Turn Conversations

Traditional chatbots often struggle with multi-turn interactions, which require maintaining context and executing tasks over several exchanges. Businesses face significant challenges in training AI agents to handle these complexities due to:

- The need for high-quality, realistic training datasets.

- The slow and costly process of manually collecting data.

- The limitations of existing models in tracking context and adapting strategies.

As a result, many AI systems fail to perform effectively in real-world scenarios, leading to errors and misalignment with user goals.

Innovative Solutions with APIGen-MT

The APIGen-MT framework addresses these challenges through a two-phase data generation pipeline:

Phase 1: Task Configuration

This phase involves creating a structured task blueprint using a large language model (LLM). The proposed tasks are validated for correctness and coherence through automated checks and a review committee of LLMs. Feedback mechanisms are in place to refine tasks that do not meet standards.

Phase 2: Simulation of Conversations

In this phase, realistic dialogues are generated between simulated users and AI agents. Only those interactions that align with expected outcomes are included in the training dataset, ensuring high fidelity in dialogue flow and functional accuracy.

Performance and Impact

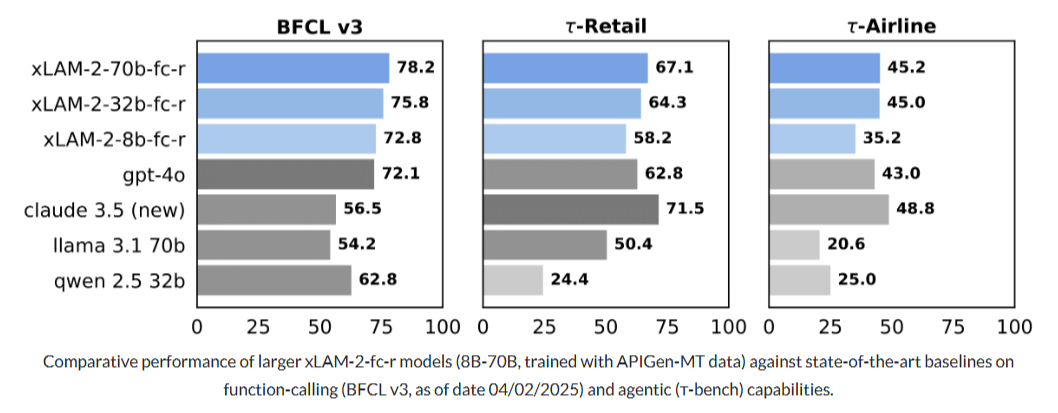

Models trained using the APIGen-MT framework, particularly the xLAM-2-fc-r series, have demonstrated superior performance in industry-standard evaluations:

- The xLAM-2-70b-fc-r model scored 78.2 in the Retail domain, outperforming competitors like Claude 3.5 and GPT-4o.

- In the airline sector, it achieved a score of 67.1, again surpassing GPT-4o.

- Smaller models like xLAM-2-8b-fc-r have also shown better efficiency in complex interactions compared to larger models.

These results highlight the importance of high-quality training data over sheer model size, reinforcing the value of structured feedback loops and task validation.

Scalability and Accessibility

The APIGen-MT framework not only excels in performance but also promotes scalability and accessibility. By making both the synthetic datasets and models open-source, Salesforce AI aims to democratize access to advanced agent training resources. This approach allows researchers and businesses to adapt the framework to their specific needs without compromising on dialogue realism or execution integrity.

Conclusion

The introduction of APIGen-MT and xLAM-2-fc-r models represents a significant leap forward in the training of AI agents for multi-turn interactions. By focusing on realistic data generation, structured validation, and open-source accessibility, Salesforce AI is setting a new standard for the development of effective AI solutions in various industries. Businesses looking to leverage AI can benefit from these advancements by enhancing customer interactions, improving operational efficiency, and ultimately driving growth.