Practical Solutions and Value of SFR-Judge by Salesforce AI Research

Revolutionizing LLM Evaluation

The SFR-Judge models offer a new approach to evaluating large language models, enhancing accuracy and scalability.

Bias Reduction and Consistent Judgments

Utilizing Direct Preference Optimization, SFR-Judge mitigates biases and ensures consistent evaluations, surpassing traditional judge models.

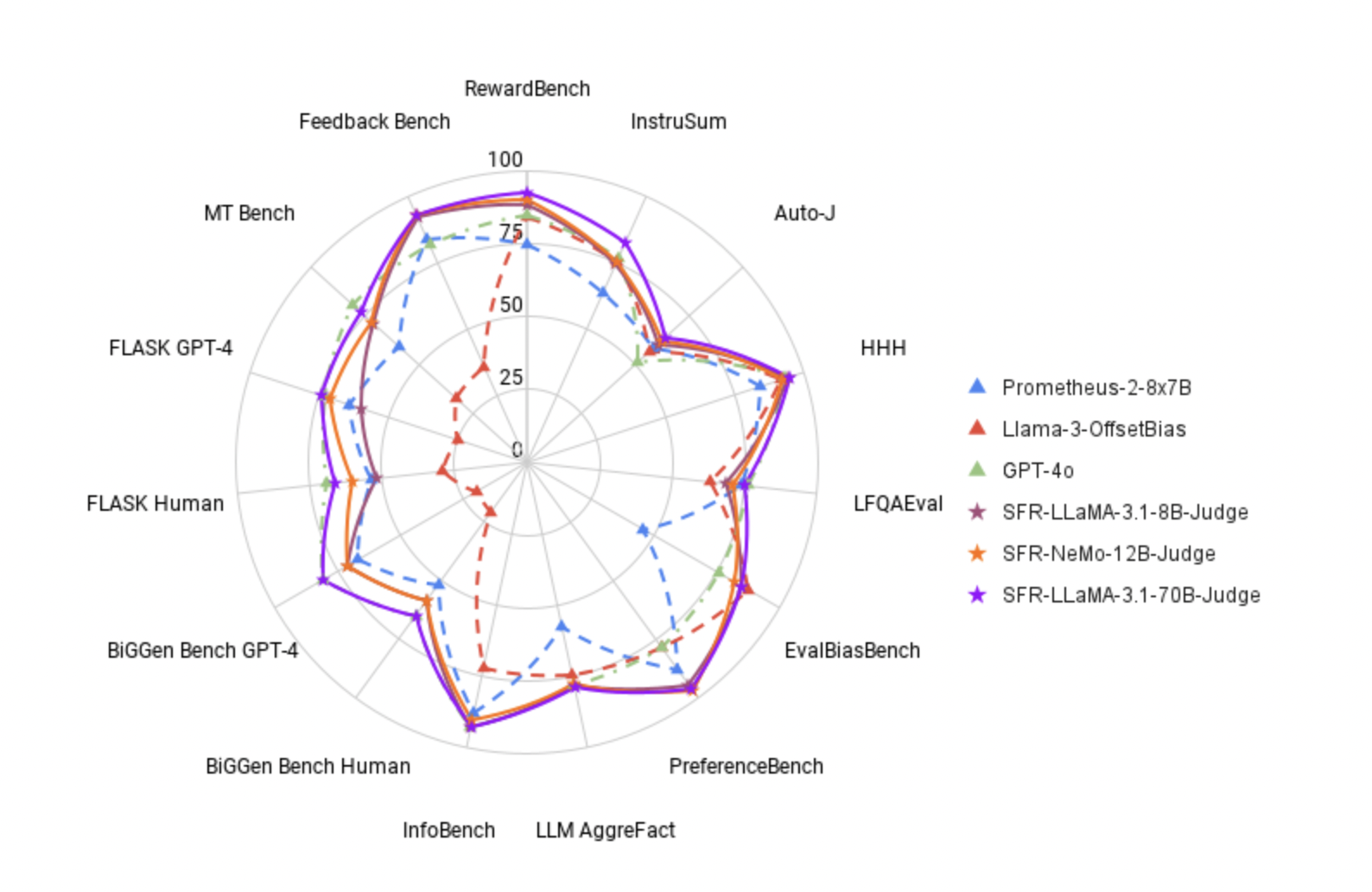

Superior Performance and Benchmark Setting

SFR-Judge outperforms existing models on various benchmarks, achieving top scores and setting new standards in LLM evaluation.

Versatile Evaluation Tasks

Supporting multiple evaluation tasks like pairwise comparisons and binary classification, SFR-Judge adapts to diverse evaluation scenarios.

Structured Explanations and Performance Boost

The detailed explanations provided by SFR-Judge can enhance downstream models, making it a valuable tool for reinforcement learning scenarios.

Reduced Bias and Scalable Automation

With lower bias levels and stable judgments, SFR-Judge offers a reliable solution for automating LLM evaluation, reducing dependence on human annotators.

Key Takeaways

1. High Accuracy

SFR-Judge excels in accuracy, achieving top scores on benchmarks like RewardBench.

2. Bias Mitigation

Demonstrates lower bias levels compared to other judge models, ensuring fair evaluations.

3. Versatile Applications

Supports various evaluation tasks, making it adaptable to different scenarios.

4. Structured Explanations

Trained to provide detailed feedback, reducing the black-box nature of evaluations.

5. Performance Boost in Downstream Models

Enhances the outputs of downstream models, particularly useful in reinforcement learning scenarios.

Conclusion

SFR-Judge by Salesforce AI Research represents a significant advancement in automating the evaluation of large language models, setting a new benchmark in LLM assessment and paving the way for further developments in automated model evaluation.