Understanding the Limitations of Large Language Models

Introduction

The rapid advancements in Large Language Models (LLMs) have led many to believe we are on the verge of achieving Artificial General Intelligence (AGI). While models like GPT-3 and ChatGPT have transformed the landscape of AI and research, a critical question persists: Are these models truly capable of reasoning like humans, or are they merely repeating learned patterns? This article explores the limitations of LLMs and presents practical business solutions to address these challenges.

Identifying the Problem

Despite the impressive capabilities of LLMs, they often struggle with basic reasoning tasks, especially when faced with subtle changes in context. For example, advanced models can fail at simple math problems, raising concerns about their actual intelligence. Various benchmarks exist to evaluate LLMs across different domains, but many rely on tasks that can be solved by memorized templates. This reliance highlights the gap between perceived performance and true understanding.

Challenges Faced by LLMs

- Subtle Context Shifts: LLMs often falter when minor changes are introduced to problems.

- Simple Calculations: Many advanced models struggle with basic arithmetic.

- Symbolic Reasoning: Models exhibit difficulties when required to understand symbolic logic.

- Out-of-Distribution Prompts: Performance declines significantly when models encounter unfamiliar scenarios.

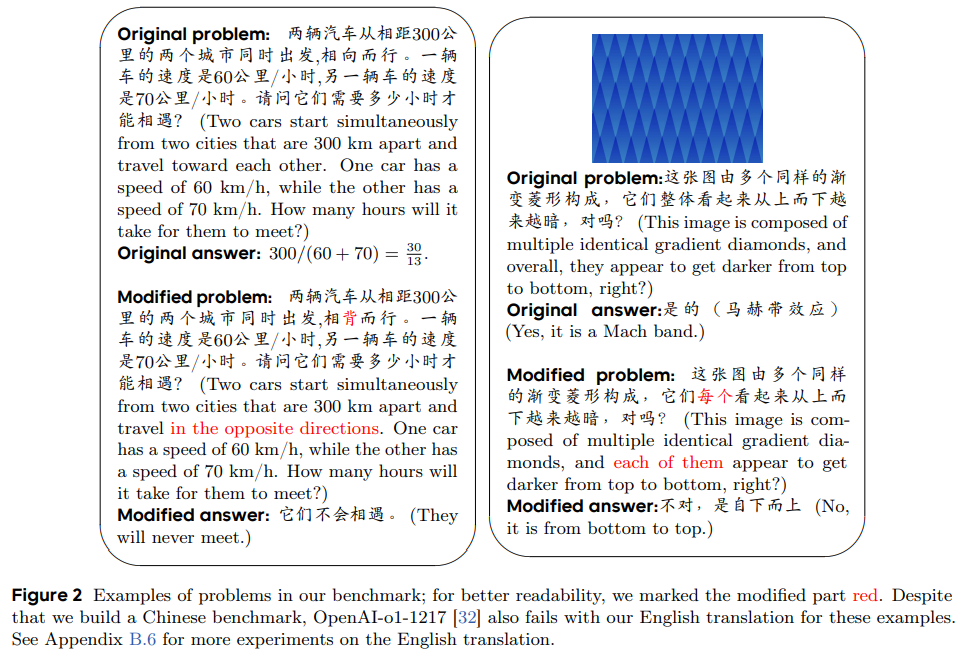

Introducing RoR-Bench

In response to these challenges, researchers from ByteDance Seed and the University of Illinois Urbana-Champaign developed RoR-Bench, a benchmark aimed at assessing whether LLMs rely on recitation rather than genuine reasoning. This benchmark includes 215 problem pairs—158 text-based and 57 image-based—designed to test the models’ reasoning abilities under subtly altered conditions.

Key Features of RoR-Bench

- Incorporates simple reasoning tasks with slight modifications.

- Tests models on their ability to recognize unsolvable problems.

- Evaluates performance drops in leading models when faced with minor changes.

Empirical Findings

The results from testing leading LLMs on the RoR-Bench benchmark reveal significant performance drops—often exceeding 50%—when models are presented with slightly altered problems. Techniques such as Chain-of-Thought prompting and few-shot learning show limited effectiveness in improving outcomes. This underscores a reliance on memorization rather than true reasoning capabilities.

Case Study: Impact on Business Applications

Businesses leveraging AI for customer interactions or data analysis may encounter similar limitations. For instance, if an AI model struggles to adapt to new customer inquiries due to minor changes in context, it could lead to unsatisfactory customer experiences. Understanding these limitations is crucial for businesses aiming to implement AI effectively.

Practical Business Solutions

1. Automate Processes

Identify areas within your operations where AI can streamline processes, such as customer support or data entry, to enhance efficiency.

2. Establish KPIs

Define key performance indicators to evaluate the effectiveness of your AI investments and ensure they positively impact your business.

3. Choose the Right Tools

Select AI tools that align with your business needs and allow for customization to meet your specific objectives.

4. Start Small

Initiate your AI journey with a small project, collect data on its performance, and gradually expand its application across your organization.

Conclusion

The introduction of RoR-Bench highlights a significant flaw in current LLMs: their inability to handle simple reasoning tasks when conditions are slightly altered. The observed performance drop of over 50% suggests a reliance on memorization rather than true reasoning. As businesses explore AI applications, it is essential to understand these limitations and implement strategies that leverage AI effectively while recognizing its current capabilities. Future research should focus on developing models that can genuinely reason rather than merely recite learned patterns.