Revolutionizing Vision-Language Tasks with Sparse Attention Vectors

Overview of Generative Large Multimodal Models (LMMs)

Generative LMMs, like LLaVA and Qwen-VL, are great at tasks that combine images and text, such as image captioning and visual question answering (VQA). However, they struggle with tasks that require specific label predictions, like image classification. The main issue is that it’s hard to get useful features from these models for such tasks.

Current Adaptation Methods

To adapt LMMs for these tasks, researchers often use techniques like prompt engineering, finetuning, or specialized designs. While these methods show potential, they have limitations, including reliance on large training datasets and specific features.

Introducing Sparse Attention Vectors (SAVs)

A research team from top universities and IBM has developed a new solution called Sparse Attention Vectors (SAVs). This method does not require finetuning and uses only a small portion of the model’s attention heads to extract features for classification tasks. Inspired by how the brain works, SAVs use less than 1% of attention heads to achieve excellent results with just a few examples.

How SAVs Work

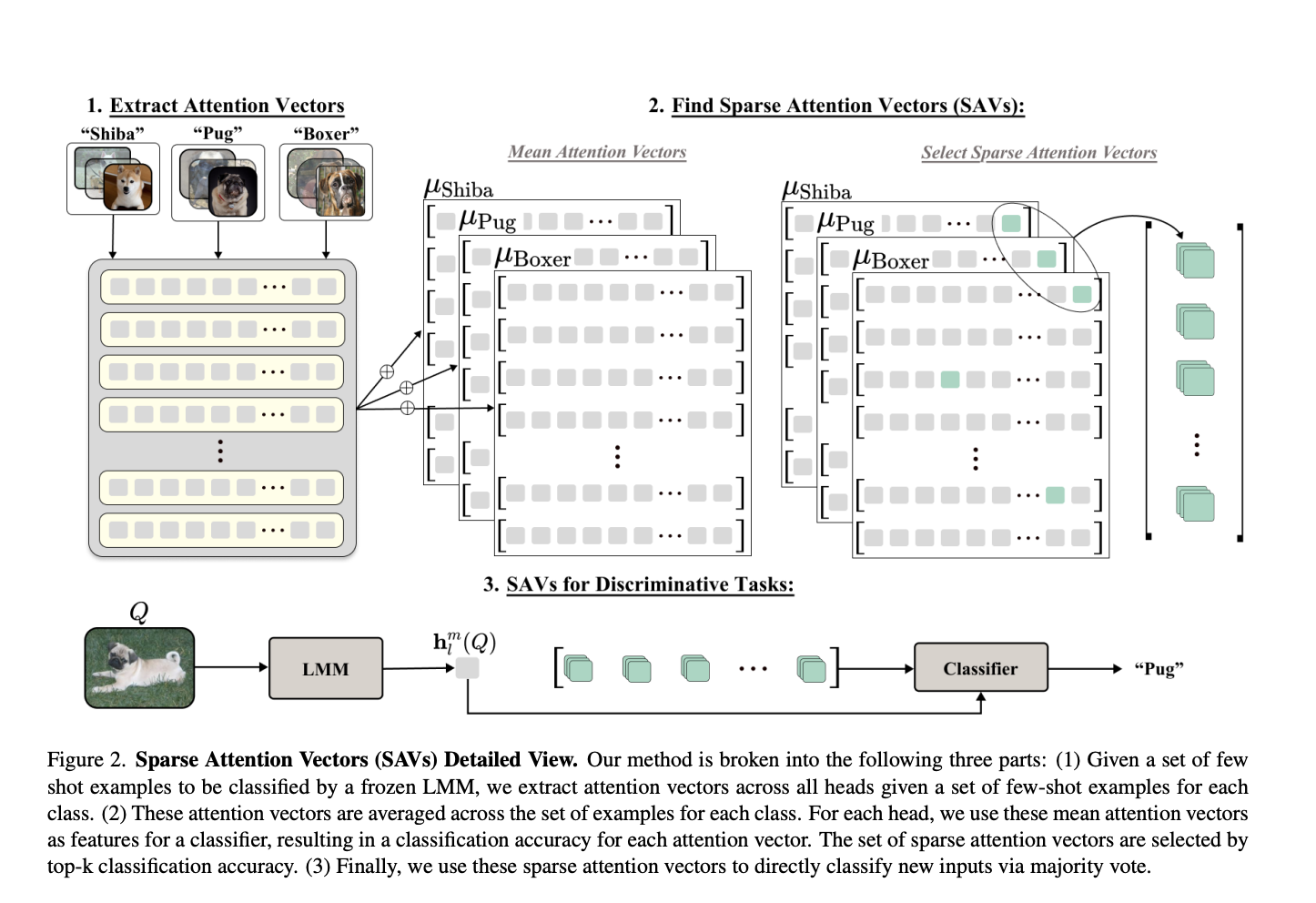

1. **Extracting Attention Vectors**: Attention vectors are gathered from a frozen LMM using a small labeled dataset.

2. **Identifying Relevant Vectors**: The effectiveness of each attention vector is assessed to find the best-performing ones.

3. **Classification Using SAVs**: Predictions are made based on the selected attention heads, allowing for efficient classification.

Performance Evaluation

SAVs were tested on advanced LMMs and showed better performance than various baseline methods, especially in detecting inaccuracies and harmful content. They excelled in challenging datasets and required only a few labeled examples, making them practical for real-world applications.

Benefits of SAVs

– **Efficiency**: Uses less than 1% of attention heads, making it lightweight.

– **Adaptability**: Works well across different tasks with minimal training data.

– **Insights**: Helps understand which parts of the model contribute to classification.

Future Directions

While SAVs are promising, they depend on accessing the internal structure of LMMs, which may limit their use. Future research could enhance SAVs for tasks like multimodal retrieval and data compression.

Get Involved

Check out the research paper and GitHub page for more details. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t miss out on our growing ML SubReddit community!

Transform Your Business with AI

Embrace AI to stay competitive and enhance your operations. Here’s how:

– **Identify Automation Opportunities**: Find areas in customer interactions that can benefit from AI.

– **Define KPIs**: Ensure your AI initiatives have measurable impacts.

– **Select an AI Solution**: Choose tools that fit your needs.

– **Implement Gradually**: Start small, gather data, and scale up.

For AI KPI management advice, reach out to us at hello@itinai.com. Stay updated on AI insights via our Telegram or Twitter. Discover how AI can transform your sales and customer engagement at itinai.com.