Transforming A/B Testing with AI: AgentA/B

Introduction

In the digital landscape, designing effective web interfaces is crucial for user engagement, especially for e-commerce and content streaming platforms. A/B testing is a widely used method to evaluate design changes by comparing user interactions with different webpage versions. However, traditional A/B testing faces significant challenges, including the need for large user traffic, slow feedback cycles, and resource constraints.

Challenges of Traditional A/B Testing

Despite its popularity, traditional A/B testing has several inefficiencies:

- High Traffic Requirements: Achieving statistically valid results often requires hundreds of thousands of user interactions, which can be unfeasible for smaller websites.

- Slow Feedback Cycle: Results can take weeks or months to analyze, delaying decision-making.

- Resource Intensive: Testing multiple variants is limited by the time and manpower available, leading to missed opportunities for optimization.

Innovative Solutions: AgentA/B

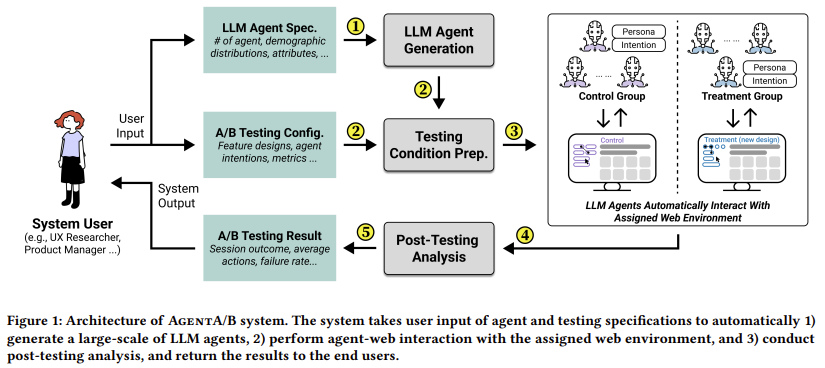

Researchers from Northeastern University, Pennsylvania State University, and Amazon have developed AgentA/B, a scalable AI system that leverages Large Language Model (LLM) agents to simulate real user behavior. This approach addresses the limitations of traditional A/B testing by enabling automated testing without the need for live user interactions.

How AgentA/B Works

The system consists of four main components:

- Persona Generation: Agent personas are created based on specified demographics and behavioral diversity.

- Scenario Definition: Testing scenarios are established, including control and treatment groups and the webpage variants to be tested.

- Interaction Execution: Agents interact with real webpages in a simulated environment, performing actions like searching, filtering, and making purchases.

- Result Analysis: Metrics such as clicks, purchases, and interaction durations are analyzed to evaluate design effectiveness.

Case Study: Practical Application

During testing, 100,000 virtual customer personas were generated, with 1,000 selected for simulation. The experiment compared two webpage layouts: one with a full filter panel and another with reduced filters. The results were compelling:

- Agents using the reduced-filter layout made more purchases and performed more filtering actions.

- LLM agents demonstrated more efficient behavior, completing tasks with fewer actions compared to one million real user interactions.

Key Benefits of AgentA/B

AgentA/B offers several advantages over traditional A/B testing:

- Automated testing without the need for live user deployment.

- Ability to evaluate multiple interface changes quickly, saving months of development time.

- Modular and extensible design, adaptable to various web platforms and testing goals.

- Addresses core challenges such as long testing cycles, high traffic requirements, and high experiment failure rates.

Conclusion

AgentA/B represents a significant advancement in web interface evaluation, providing a complementary method to traditional A/B testing. By utilizing AI agents to simulate user behavior, businesses can gain rapid feedback, optimize design processes, and make data-informed decisions more efficiently. This innovative approach not only enhances the testing experience but also paves the way for a more agile and responsive web design strategy.

For further insights on how artificial intelligence can transform your business processes, feel free to reach out to us at hello@itinai.ru or connect with us on social media.