The Power of Visual Language Models

Advancements in Language Models

The field of language models has made significant progress, driven by transformers and scaling efforts. OpenAI’s GPT series and innovations like Transformer-XL, Mistral, Falcon, Yi, DeepSeek, DBRX, and Gemini have pushed the capabilities of language models further.

Advancements in Visual Language Models

Visual language models (VLMs) have also rapidly advanced, with models like CLIP, BLIP, BLIP-2, LLaVA, InstructBLIP, Kosmos-2, and PaLI-X enhancing capabilities across various visual tasks.

Enhancing Visual Language Models

Recent advancements in VLMs have focused on aligning visual encoders with large language models to improve capabilities across various visual tasks. Researchers are exploring VLM-based data augmentation to enhance datasets and improve model performance.

Auto-regressive Visual Language Models

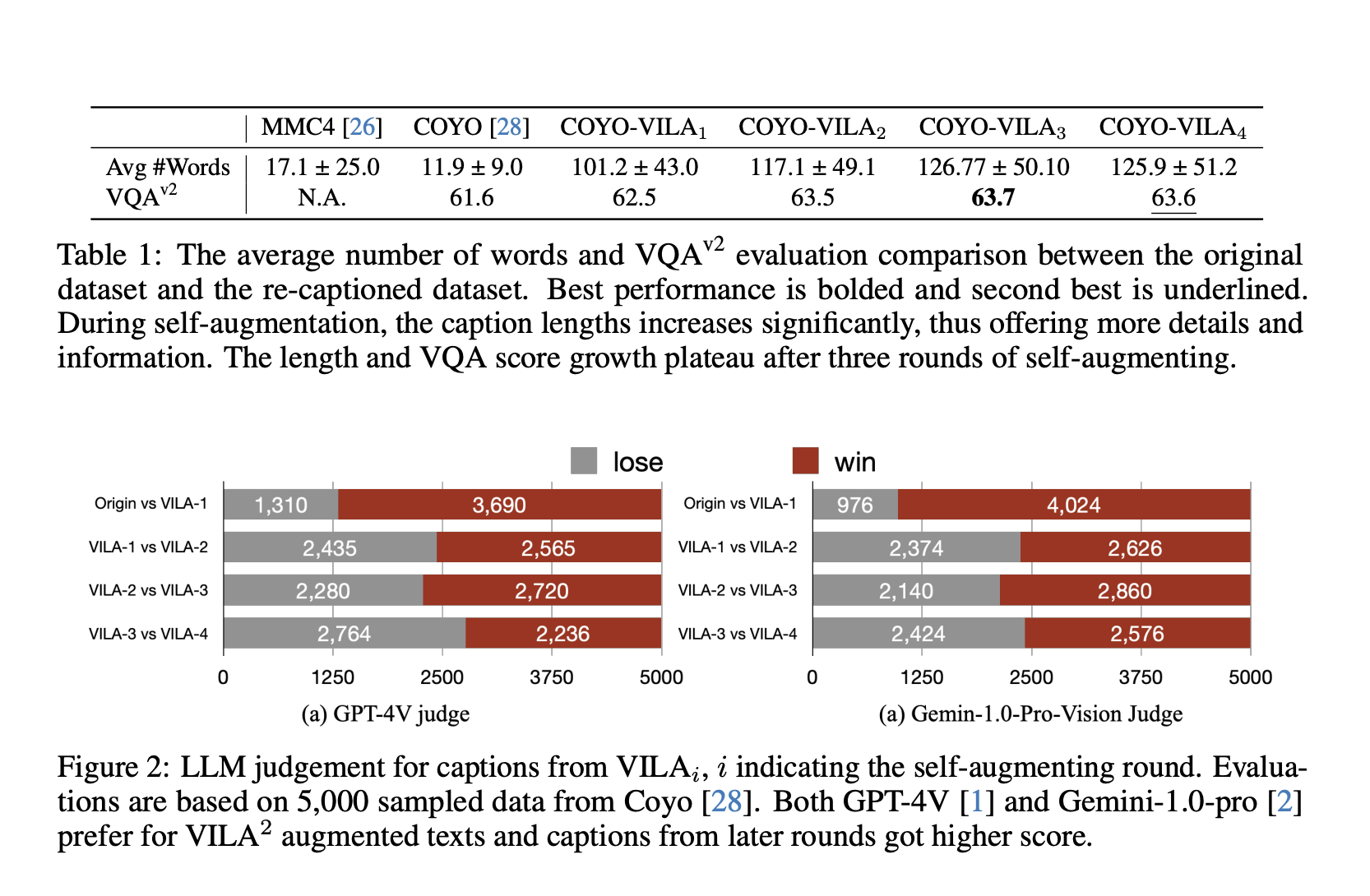

The research focuses on auto-regressive Visual Language Models (VLMs), employing a three-stage training paradigm: align-pretrain-SFT. The methodology introduces a novel augmentation training regime to enhance data quality and boost VLM performance.

State-of-the-Art Performance

VILA 2 achieves state-of-the-art performance on various benchmarks, demonstrating the effectiveness of enhanced pre-training data quality and the combination of self-augmented and specialist-augmented training strategies.

Revolutionizing Visual-Language Understanding

VILA 2 represents a significant leap forward in visual language models, achieving state-of-the-art performance through innovative self-augmentation and specialist-augmentation techniques.

AI Solutions for Business

Discover how AI can redefine your way of work and sales processes, and identify automation opportunities, define KPIs, select an AI solution, and implement gradually to stay competitive and evolve your company with AI.

Connect with Us

For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com or stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.