Practical Solutions and Value of Minimal LSTMs and GRUs in AI

Enhancing Sequence Modeling Efficiency

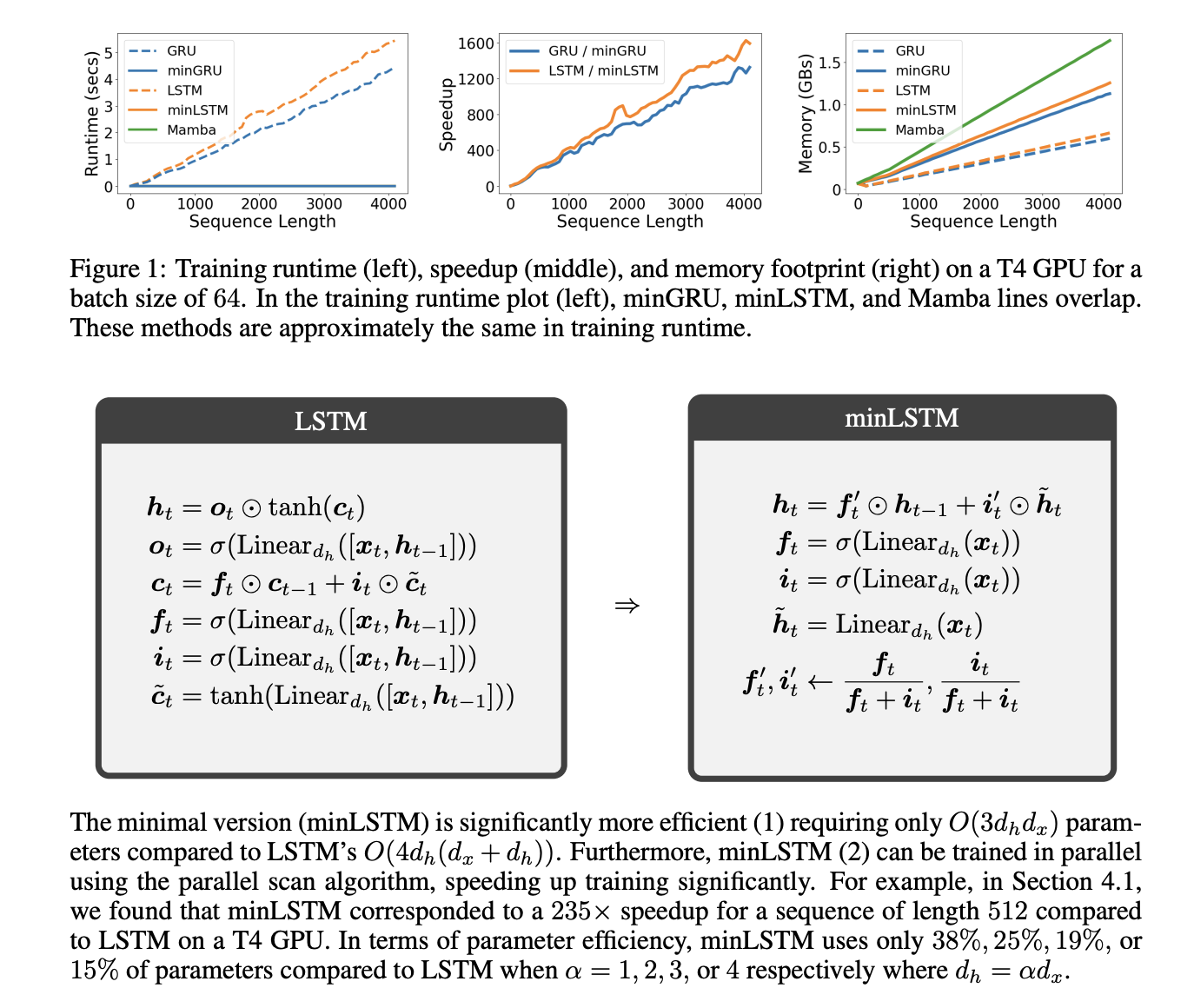

Recurrent neural networks (RNNs) like LSTM and GRU face challenges with long sequences due to computational inefficiencies.

Transforming Sequences with Minimal Models

Minimal versions of LSTM and GRU, named minLSTM and minGRU, eliminate complex gating mechanisms and reduce parameters by up to 33%.

Efficient Parallel Training

Minimal RNNs allow for parallel training, resulting in up to 175 times faster processing compared to standard models.

Improved Performance and Speed

Minimal models showcase significant speedups in training times, making them ideal for real-world applications requiring handling of long sequences.

Competitive Results and Robustness

The minimal RNNs perform competitively with modern architectures in tasks like reinforcement learning and language modeling, demonstrating efficiency and reliability.

Future of AI with Minimal RNNs

Minimal LSTMs and GRUs offer a promising solution for efficient parallel training and maintaining strong empirical performance in sequence-based applications.

Unlocking AI Potential

Identify automation opportunities, define KPIs, select suitable AI tools, implement gradually, and leverage AI to redefine work processes efficiently.

Interested in exploring AI solutions for your business? Contact us at hello@itinai.com for AI KPI management advice and stay updated on AI insights via our Telegram channel and Twitter.

Discover how AI can revolutionize your sales processes and customer engagement at itinai.com.