Practical Solutions in Advancing AI Research

Challenges in Neural Network Flexibility

Neural networks often face limitations in practical performance, impacting applications such as medical diagnosis, autonomous driving, and large-scale language models.

Current Methods and Limitations

Methods like overparameterization, convolutional architectures, optimizers, and activation functions have notable limitations in achieving optimal practical performance.

Novel Approach for Understanding Flexibility

A team of researchers proposes a comprehensive empirical examination of neural networks’ data-fitting capacity using the Effective Model Complexity (EMC) metric, offering new insights beyond theoretical bounds.

Key Technical Aspects and Insights

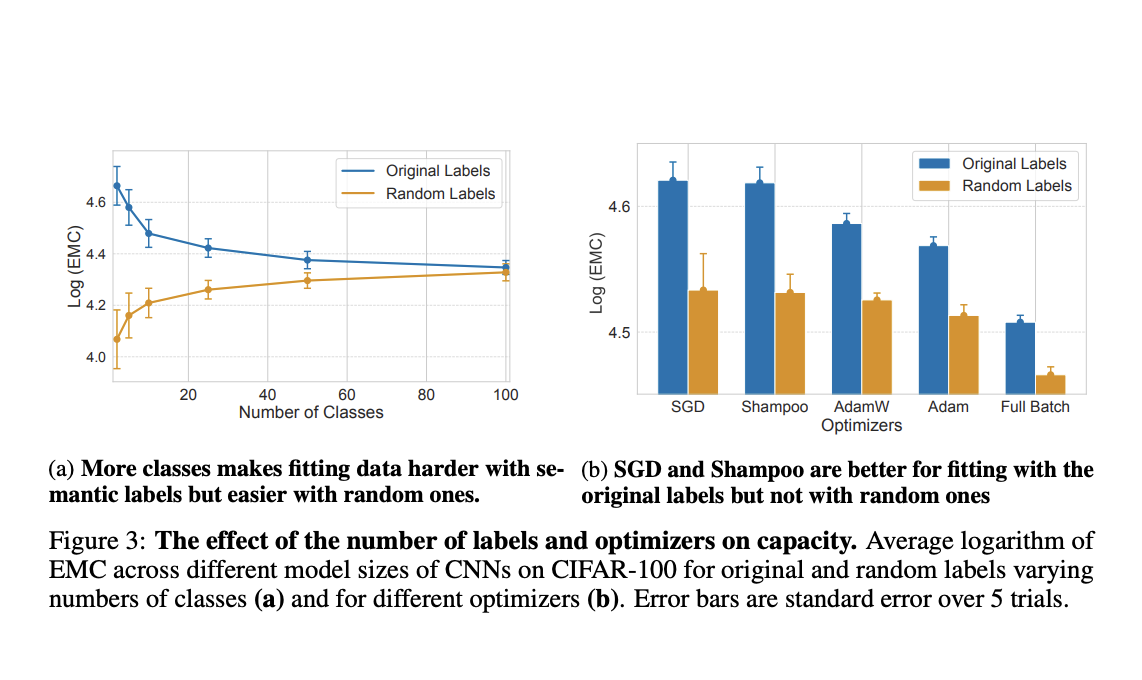

The EMC metric is calculated through an iterative approach involving various neural network architectures and optimizers. The study reveals that standard optimizers limit data-fitting capacity, while CNNs are more parameter-efficient even on random data.

Implications for AI Research

The study challenges conventional wisdom on neural network data-fitting capacity, revealing the influence of optimizers and activation functions. These insights have substantial implications for improving neural network training and architecture design.

Evolve Your Company with AI

Discover how AI can redefine your way of work by identifying automation opportunities, defining KPIs, selecting AI solutions, and implementing gradually.

Connect with Us

For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com. Join our Telegram and Twitter for updates.

Redefine Sales Processes and Customer Engagement

Explore how AI can redefine your sales processes and customer engagement by discovering solutions at itinai.com.