Transforming Language Model Training with Critique Fine-Tuning

Limitations of Traditional Training Methods

Traditional training for language models often relies on imitating correct answers. While this works for simple tasks, it limits the model’s ability to think critically and reason deeply. As AI applications grow, we need models that can not only generate responses but also evaluate their own accuracy and logic.

The Need for Improved Reasoning

Imitation-based training has serious drawbacks. It restricts models from analyzing their outputs, leading to responses that may sound correct but lack true reasoning. Simply increasing the data size doesn’t guarantee better quality responses, highlighting the need for new methods that enhance reasoning skills instead of just adding more data.

Current Solutions and Their Challenges

Some existing methods, like reinforcement learning and self-critique, aim to address these issues. However, they often require extensive computational resources and may lack consistency. Most techniques still focus on data volume rather than enhancing reasoning capabilities, limiting their effectiveness in complex problem-solving.

Introducing Critique Fine-Tuning (CFT)

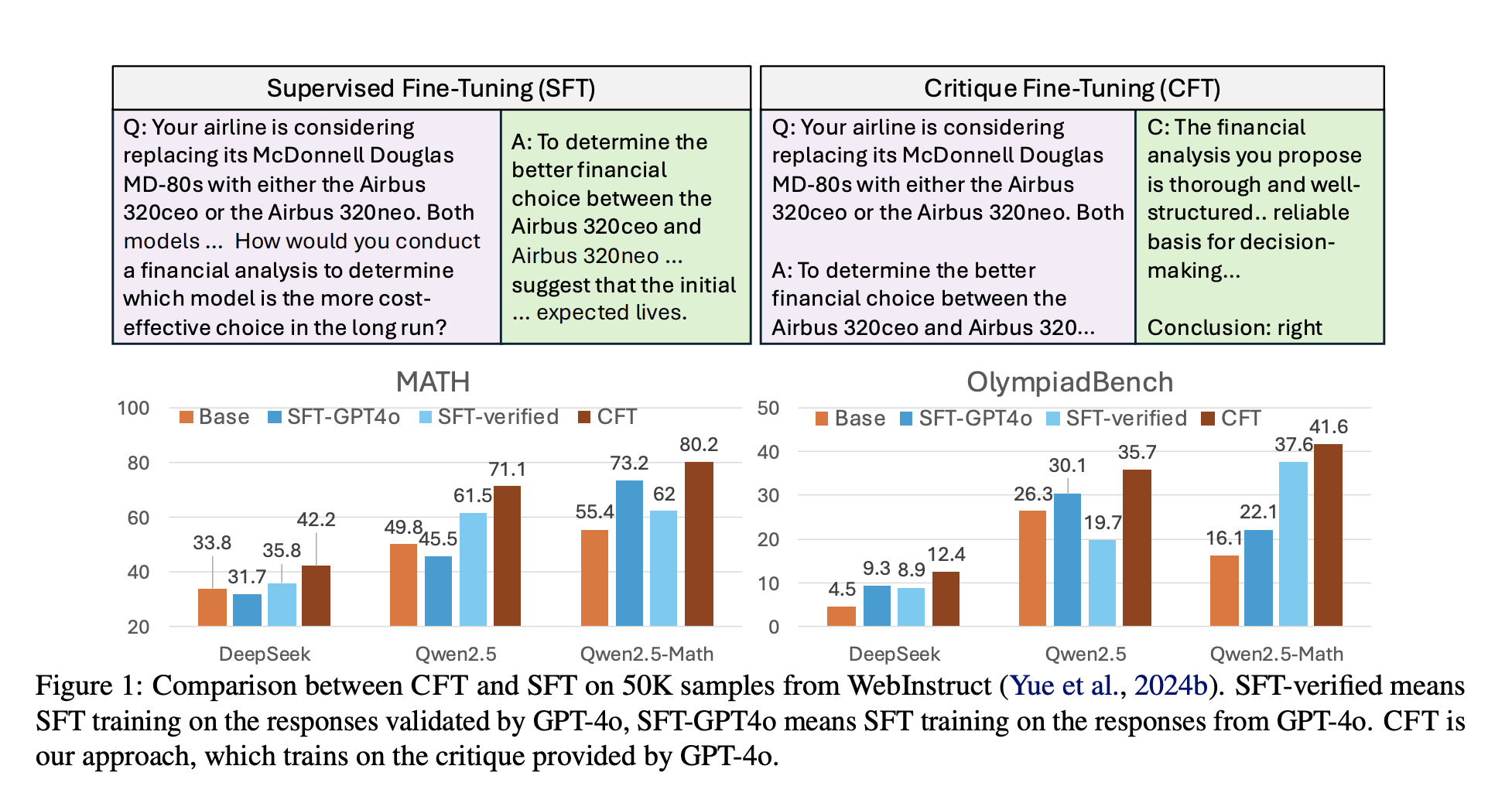

A research team from the University of Waterloo, Carnegie Mellon University, and the Vector Institute has developed a new method called Critique Fine-Tuning (CFT). This approach focuses on training models to critique and improve their responses instead of simply imitating them. Researchers created a dataset of 50,000 critique samples using GPT-4o to help models identify flaws and suggest improvements, particularly in structured reasoning tasks like math.

How CFT Works

CFT uses structured critique datasets instead of traditional question-response pairs. During training, models receive a question, an initial answer, and a critique that evaluates the answer’s accuracy. This encourages models to enhance their analytical skills, leading to more reliable and explainable outputs.

Proven Effectiveness of CFT

Experimental results show that models trained with CFT consistently outperform those trained with traditional methods. For example, Qwen2.5-Math-CFT, trained with just 50,000 examples, competes effectively with models trained on over 2 million samples. CFT models demonstrated a 7.0% improvement in accuracy on the MATH benchmark and 16.6% on Minerva-Math compared to standard methods, proving that critique-based learning is efficient and effective.

The Future of AI Training

This research highlights the benefits of critique-based learning in training language models. By focusing on critique generation rather than imitation, models can improve their accuracy and reasoning skills. This innovative approach not only enhances performance but also reduces computational costs. Future research may incorporate additional critique mechanisms to further improve model reliability across various problem-solving areas.

Get Involved and Learn More

Check out the Paper and GitHub Page. All credit for this research goes to the dedicated researchers involved. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to join our 75k+ ML SubReddit!

Elevate Your Business with AI

To stay competitive and leverage AI effectively, consider the following steps:

- Identify Automation Opportunities: Find key areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot project, gather data, and expand AI usage thoughtfully.

For AI KPI management advice, connect with us at hello@itinai.com. For ongoing insights into leveraging AI, follow us on Telegram or @itinaicom.

Revolutionize Your Sales and Customer Engagement

Discover how AI can transform your sales processes and enhance customer interactions. Explore solutions at itinai.com.