Current Limitations of Multimodal Retrieval-Augmented Generation (RAG)

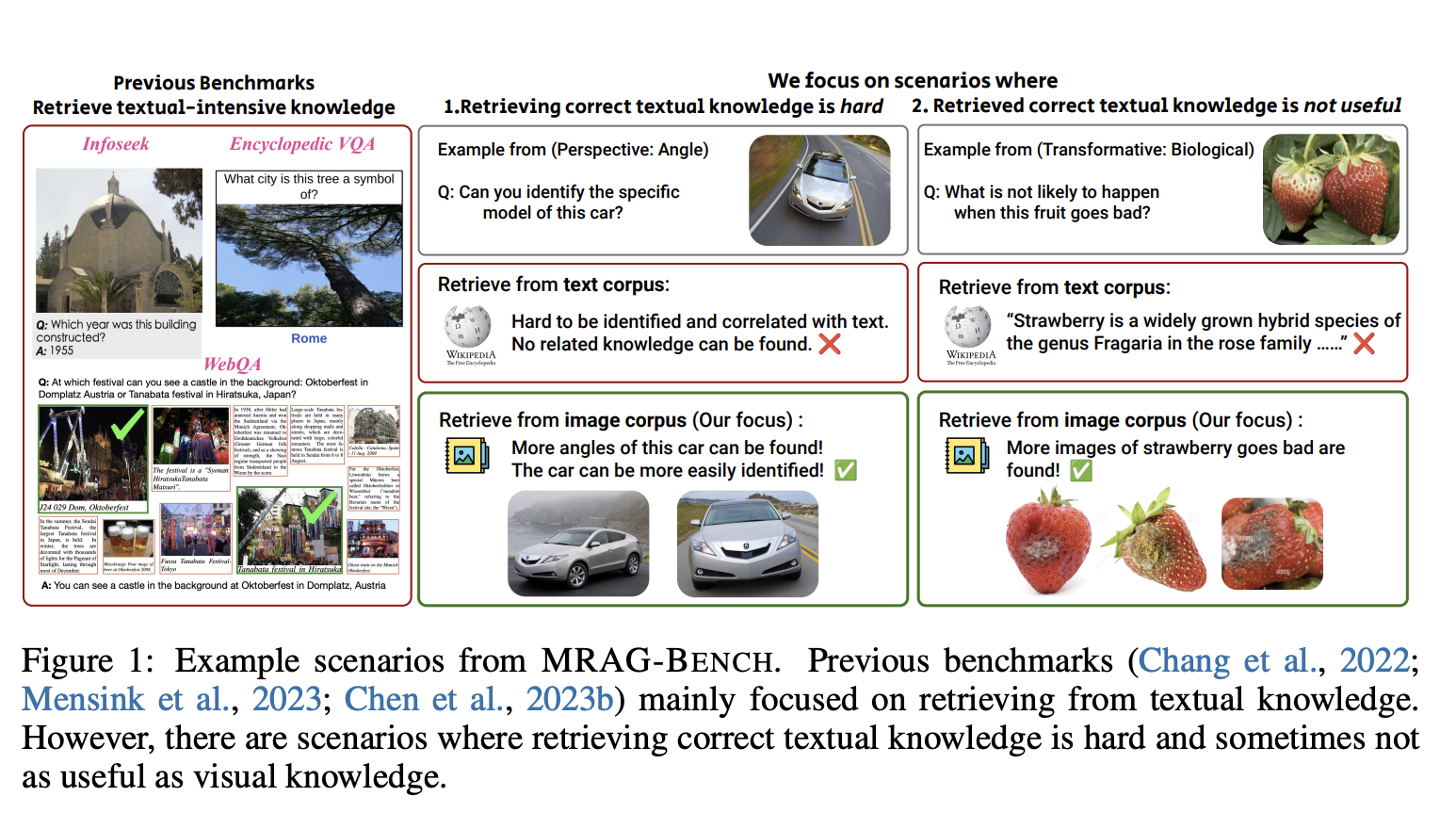

Most existing benchmarks for RAG focus mainly on text for answering questions, which can be limiting. In many cases, it’s easier and more useful to retrieve visual information instead of text. This gap hinders the progress of large vision-language models (LVLMs) that need to effectively use various types of information.

Introducing MRAG-Bench

Researchers from UCLA and Stanford have developed MRAG-Bench, a benchmark that emphasizes visual information. This tool helps evaluate how well LVLMs perform in scenarios where visuals are more useful than text. MRAG-Bench includes:

- 16,130 images

- 1,353 human-annotated multiple-choice questions

- Nine distinct scenarios focused on visual knowledge advantages

Benchmark Structure

MRAG-Bench is organized into two main areas:

- Perspective Changes: Challenges models with different angles, visibility, and resolution.

- Transformative Changes: Focuses on how visual entities change over time or physically.

It includes a carefully curated set of 9,673 ground-truth images to ensure the benchmark reflects real-world visual understanding.

Evaluation Results

The results show that using visual information improves model performance significantly compared to text alone. For example:

- The best proprietary model, GPT-4o, improved by only 5.82% with visual augmentation.

- In contrast, human participants saw a 33.16% improvement, showcasing the gap in performance.

Proprietary models are also better at distinguishing high-quality visuals compared to open-source models, which often struggle.

Conclusion

MRAG-Bench is a groundbreaking evaluation tool for LVLMs, focusing on where visual information is more beneficial than text. This research highlights the significant gap between human and model performance in using visual data effectively.

Get Involved

Check out the Paper, Dataset, GitHub, and Project. Follow us on Twitter, join our Telegram Channel, and be part of our LinkedIn Group. If you appreciate our work, subscribe to our newsletter and join our 50k+ ML SubReddit.

Upcoming Event

RetrieveX – The GenAI Data Retrieval Conference on Oct 17, 2024

Transform Your Business with AI

Stay competitive and leverage AI to your advantage:

- Identify Automation Opportunities: Find key customer interaction points that can benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose the right tools that meet your needs and can be customized.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights into leveraging AI, follow us on Telegram or Twitter.

Enhance Your Sales and Customer Engagement with AI Solutions

Explore more at itinai.com.