Enhancing Multi-Step Reasoning in Large Language Models

Practical Solutions and Value

Large language models (LLMs) have shown impressive capabilities in content generation and problem-solving. However, they face challenges in multi-step deductive reasoning. Current LLMs struggle with logical thought processes and deep contextual understanding, limiting their performance in complex reasoning tasks.

Existing methods to enhance LLMs’ reasoning capabilities include integrating external memory databases and employing techniques like Recursive Model Training (RMT). However, these approaches introduce challenges such as potential biases and handling long sequence limitations in multi-turn dialogues.

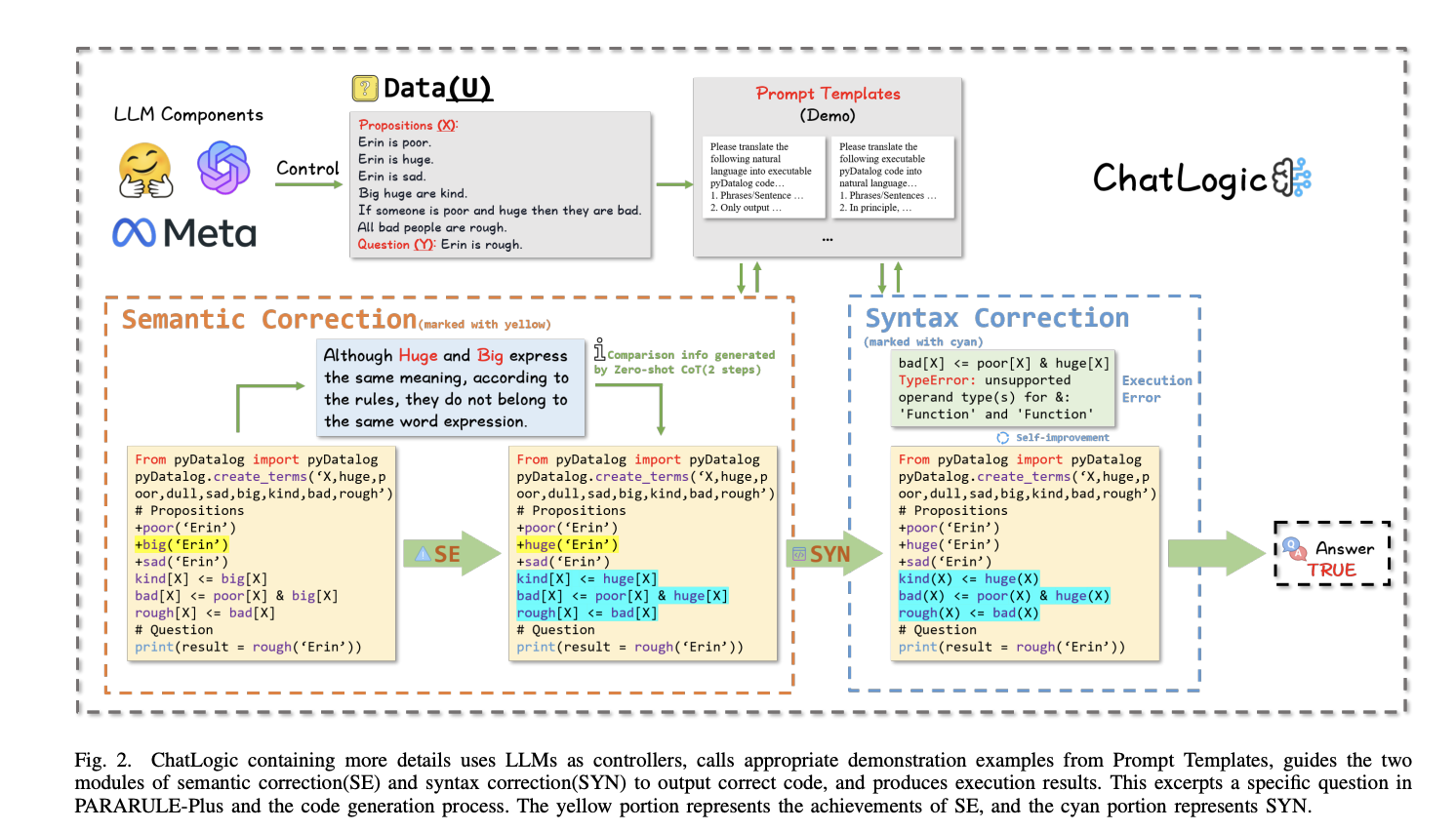

Researchers from the University of Auckland have introduced ChatLogic, a framework designed to augment LLMs with a logical reasoning engine. ChatLogic aims to enhance multi-step deductive reasoning by converting logic problems into symbolic representations that LLMs can process. It employs a unique approach called ‘Mix-shot Chain of Thought’ (CoT) to guide LLMs efficiently through logical reasoning steps.

Experimental results demonstrate that LLMs integrated with ChatLogic significantly outperform baseline models in multi-step reasoning tasks. For instance, on the PARARULE-Plus dataset, GPT-3.5 with ChatLogic achieved an accuracy of 0.5275, compared to 0.344 for the base model. Similarly, GPT-4 with ChatLogic showed an accuracy of 0.73, while the base model only reached 0.555.

In conclusion, ChatLogic presents a robust solution to the multi-step reasoning limitations of current LLMs. By integrating logical reasoning engines and employing innovative prompt engineering techniques, the researchers have significantly enhanced the accuracy and reliability of LLMs in complex reasoning tasks.