Understanding Vision-Language Models (VLMs)

Vision-language models (VLMs) are essential for tasks like image retrieval, captioning, and medical diagnostics. They work by connecting visual data with language. However, they struggle with understanding negation, which is important for specific applications, such as telling the difference between “a room without windows” and “a room with windows.” This limitation affects their use in critical fields like safety monitoring and healthcare.

The Challenge of Negation

Current VLMs, like CLIP, align images and text but falter with negated statements. They often treat negations and affirmatives as the same due to biases in their training data. Existing benchmarks do not adequately reflect the complexity of negation in natural language. This makes it difficult for VLMs to handle precise queries, especially in medical imaging.

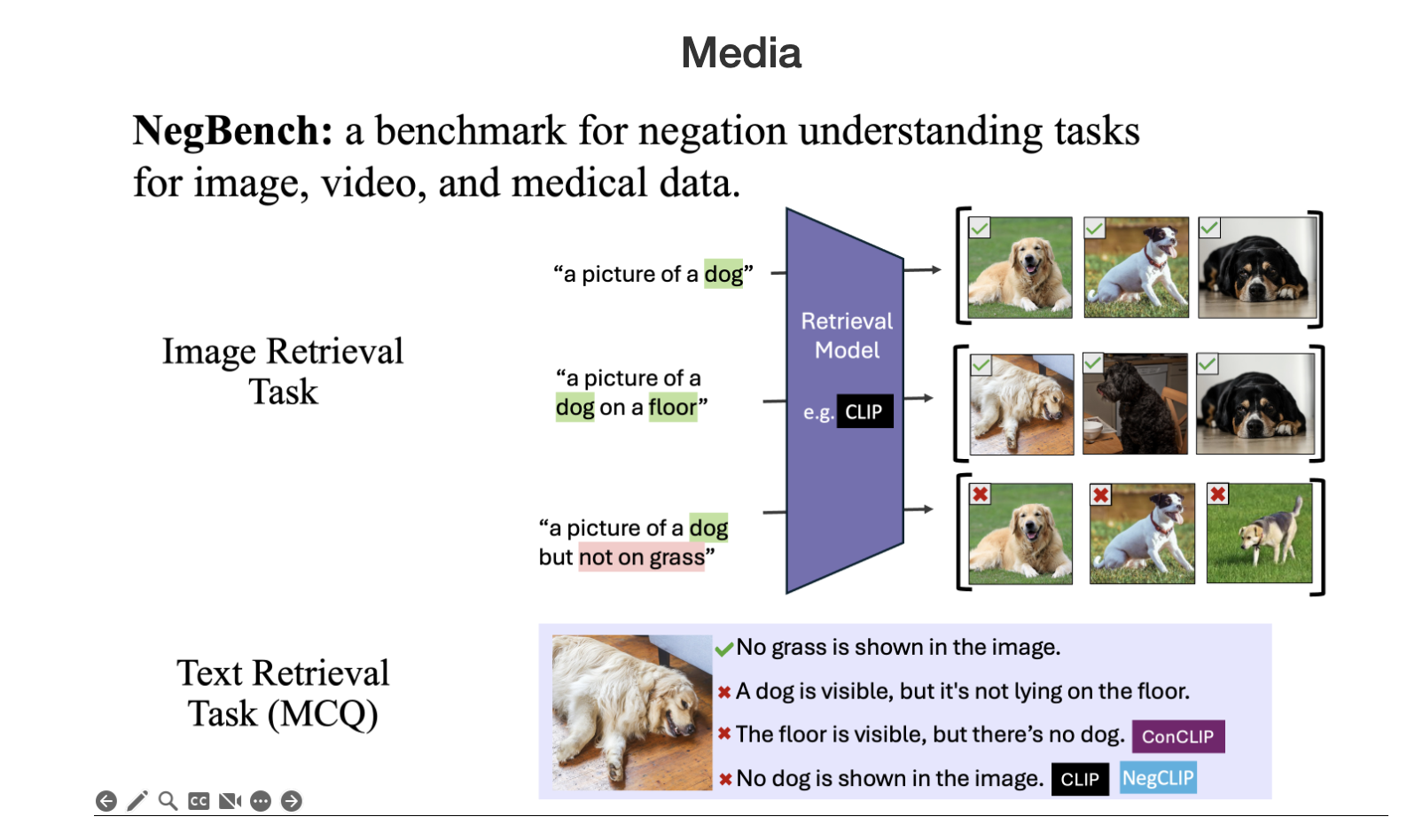

Introducing NegBench

To tackle these issues, researchers from MIT, Google DeepMind, and the University of Oxford developed the NegBench framework. This tool evaluates and improves how VLMs understand negation through:

- Retrieval with Negation (Retrieval-Neg): Tests the model’s ability to find images based on both affirmative and negated descriptions.

- Multiple Choice Questions with Negation (MCQ-Neg): Challenges models to choose correct captions from subtle variations.

NegBench uses extensive synthetic datasets, like CC12M-NegCap, which includes millions of captions with various negation scenarios. It also adapts standard datasets to include negated captions, enhancing linguistic diversity and robustness.

Testing and Improving Models

NegBench employs both real and synthetic datasets to assess negation understanding. For example, it modifies datasets like COCO and CheXpert to include negation scenarios. The framework also uses templates for multiple-choice questions to ensure diversity. The fine-tuning of models focuses on two main objectives: improving the alignment of image-caption pairs and enhancing the model’s ability to make fine-grained negation judgments.

Results and Impact

Fine-tuned models show significant improvements:

- 10% increase in recall for negated queries, matching standard retrieval tasks.

- Up to 40% accuracy improvement in multiple-choice tasks, better distinguishing between affirmative and negated captions.

These advancements demonstrate the effectiveness of incorporating diverse negation examples in training, reducing affirmation bias.

Conclusion

NegBench addresses a vital gap in VLMs by enhancing their understanding of negation. This leads to better performance in retrieval and comprehension tasks, paving the way for more robust AI systems capable of nuanced language understanding. This has significant implications for important fields like medical diagnostics and semantic content retrieval.

Get Involved

Explore the Paper and Code for more information. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to join our 65k+ ML SubReddit.

Leverage AI for Your Business

To keep your company competitive with AI:

- Identify Automation Opportunities: Find key customer interactions that can benefit from AI.

- Define KPIs: Ensure your AI projects have measurable impacts.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, reach out to us at hello@itinai.com. Stay updated on AI insights via our Telegram (t.me/itinainews) or Twitter (@itinaicom).

Discover how AI can transform your sales processes and customer engagement at itinai.com.