Advancements in Neural Networks

The development of neural networks has transformed fields like natural language processing, computer vision, and scientific computing. However, training these models can be expensive in terms of computation. Using higher-order tensor weights helps capture complex relationships but can lead to memory issues.

Challenges in Scientific Computing

In scientific computing, layers that use tensors to model complex systems, such as solving partial differential equations (PDEs), require a lot of memory for optimizer states. Flattening these tensors into matrices can result in losing vital information, affecting efficiency and performance. Innovative solutions are needed to keep model accuracy intact.

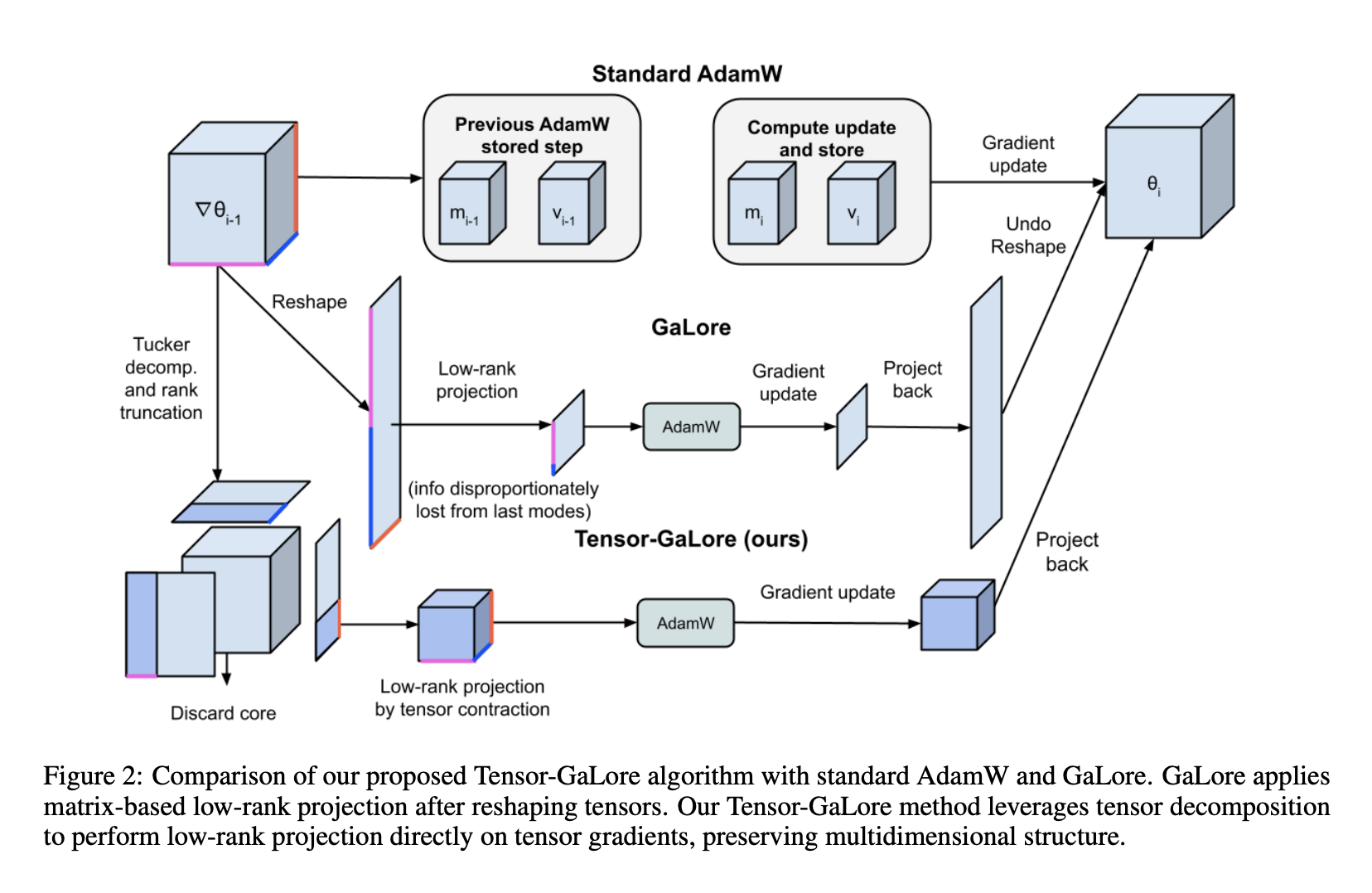

Introducing Tensor-GaLore

Researchers from Caltech, Meta FAIR, and NVIDIA AI have created Tensor-GaLore, a method for efficient training of neural networks with higher-order tensor weights. It works directly in high-order tensor space, using tensor factorization techniques to optimize gradients during training.

Benefits of Tensor-GaLore

- Memory Efficiency: Reduces memory usage for optimizer states by up to 75%.

- Preservation of Structure: Maintains the tensor’s original structure, keeping essential information intact.

- Implicit Regularization: Helps avoid overfitting and supports smoother optimization.

- Scalability: Features like per-layer weight updates help manage memory usage effectively.

Technical Insights

Tensor-GaLore uses Tucker decomposition for gradients, breaking tensors into a core tensor and orthogonal factor matrices. This innovative approach ensures convergence and stability while often outperforming traditional methods.

Results and Performance

Tensor-GaLore has shown impressive results in various PDE tasks:

- Navier-Stokes Equations: Reduced optimizer memory usage by 76% while maintaining performance.

- Darcy Flow Problem: Achieved a 48% improvement in test loss with significant memory savings.

- Electromagnetic Wave Propagation: Improved test accuracy by 11% and reduced memory consumption.

Conclusion

Tensor-GaLore presents a practical solution for memory-efficient neural network training using higher-order tensor weights. Its ability to retain multidimensional relationships makes it a valuable tool for scientific computing and beyond. With demonstrated success in various applications, it paves the way for more efficient AI-driven research.

Explore More

Check out the Paper for detailed insights. Follow our updates on Twitter, Telegram, and LinkedIn. Join our community of over 60k on ML SubReddit.

Join Our Webinar

Gain actionable insights into improving LLM model performance while ensuring data privacy.

Stay Competitive with AI

Evolve your company with AI and harness its potential by:

- Identifying Automation Opportunities: Find areas in customer interactions that can benefit from AI.

- Defining KPIs: Ensure measurable impacts from your AI initiatives.

- Selecting AI Solutions: Choose tools that fit your needs and allow customization.

- Implementing Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For continuous insights, follow us on Telegram or Twitter.

Transform Your Sales and Customer Engagement

Explore AI solutions that can redefine your business processes at itinai.com.