The Challenge of LLMs in Handling Long-context Inputs

Large language models (LLMs) like GPT-3.5 Turbo and Mistral 7B struggle with accurately retrieving information and maintaining reasoning capabilities across extensive textual data. This limitation hampers their effectiveness in tasks that require processing and reasoning over long passages, such as multi-document question answering (MDQA) and flexible length question answering (FLenQA).

Enhancing LLMs’ Performance in Long-context Settings

Current methods to enhance the performance of LLMs in long-context settings typically involve finetuning on real-world datasets. However, these datasets often include outdated or irrelevant information, leading to inaccuracies. LLMs tend to exhibit a “lost-in-the-middle” behavior, where their performance is optimal at the beginning or end of the input context but deteriorates for information in the middle.

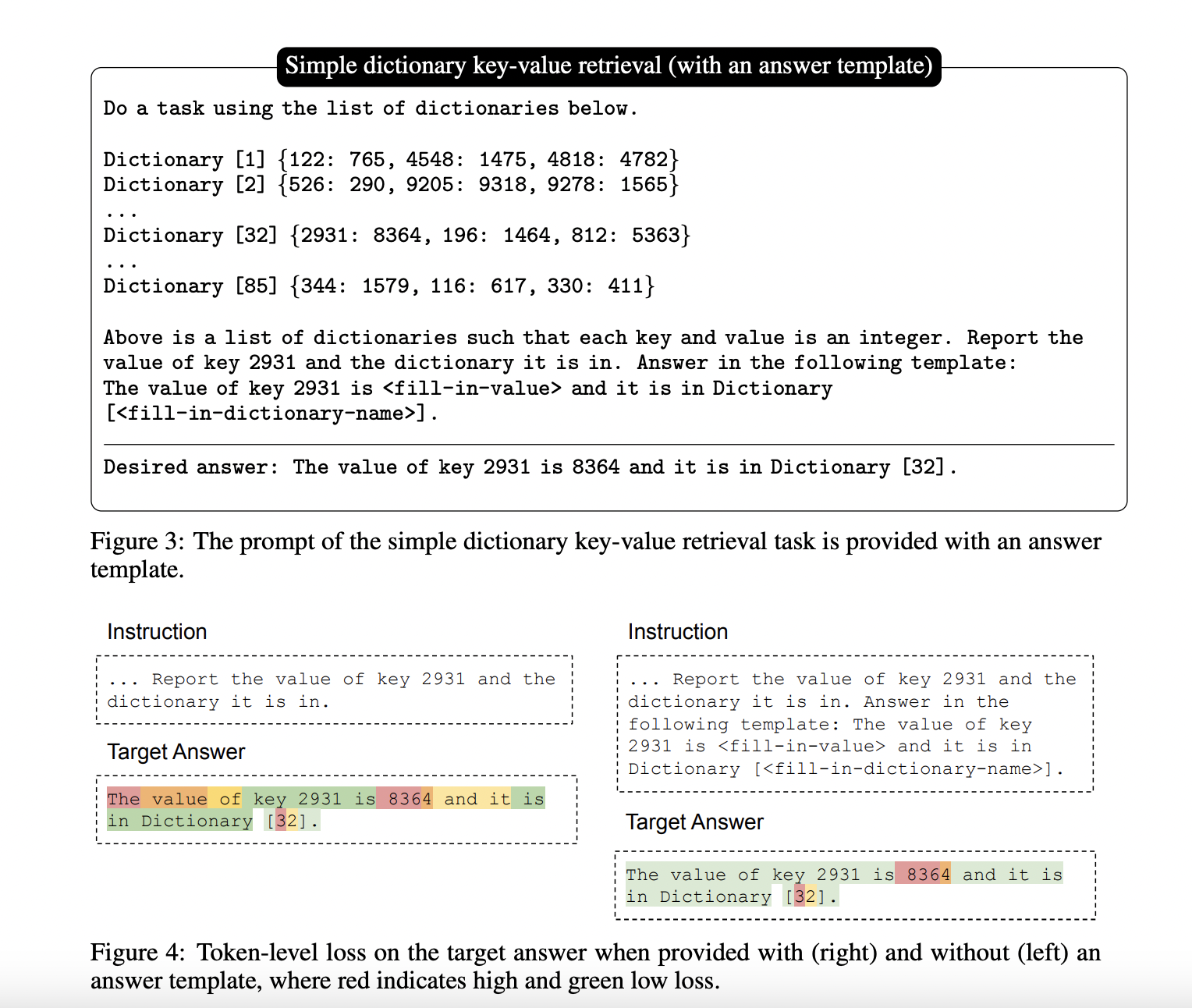

The Proposed Solution: Synthetic Dataset Finetuning

A team of researchers from the University of Wisconsin-Madison proposes a novel finetuning approach utilizing a carefully designed synthetic dataset to address these challenges. This dataset comprises numerical key-value retrieval tasks designed to enhance the LLMs’ ability to handle long contexts more effectively. By using synthetic data that avoids the pitfalls of outdated or irrelevant information, the researchers aim to improve LLMs’ information retrieval and reasoning capabilities without introducing hallucinations.

Impact and Results

Experiments demonstrate that this approach significantly enhances the performance of LLMs in long-context tasks. For example, finetuning GPT-3.5 Turbo on the synthetic data resulted in a 10.5% improvement on the 20 documents MDQA benchmark at the tenth position. Moreover, this method mitigates the “lost-in-the-middle” phenomenon and reduces the primacy bias, leading to more accurate information retrieval across the entire input context.

The Potential of Synthetic Datasets in Overcoming Limitations

The study introduces an innovative approach to finetuning LLMs using synthetic data, significantly enhancing their performance in long-context settings. The proposed method demonstrates substantial improvements over traditional finetuning techniques by addressing the “lost-in-the-middle” phenomenon and reducing primacy bias. This research highlights the potential of synthetic datasets in overcoming the limitations of real-world data, paving the way for more effective and reliable LLMs in handling extensive textual information.

Evolve Your Company with AI

Discover how AI can redefine your way of work. Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually. For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com or follow us on Telegram and Twitter.

Redefining Sales Processes and Customer Engagement with AI

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.