The Value of HW-GPT-Bench: Optimizing Language Model Efficiency

Practical Solutions and Benefits

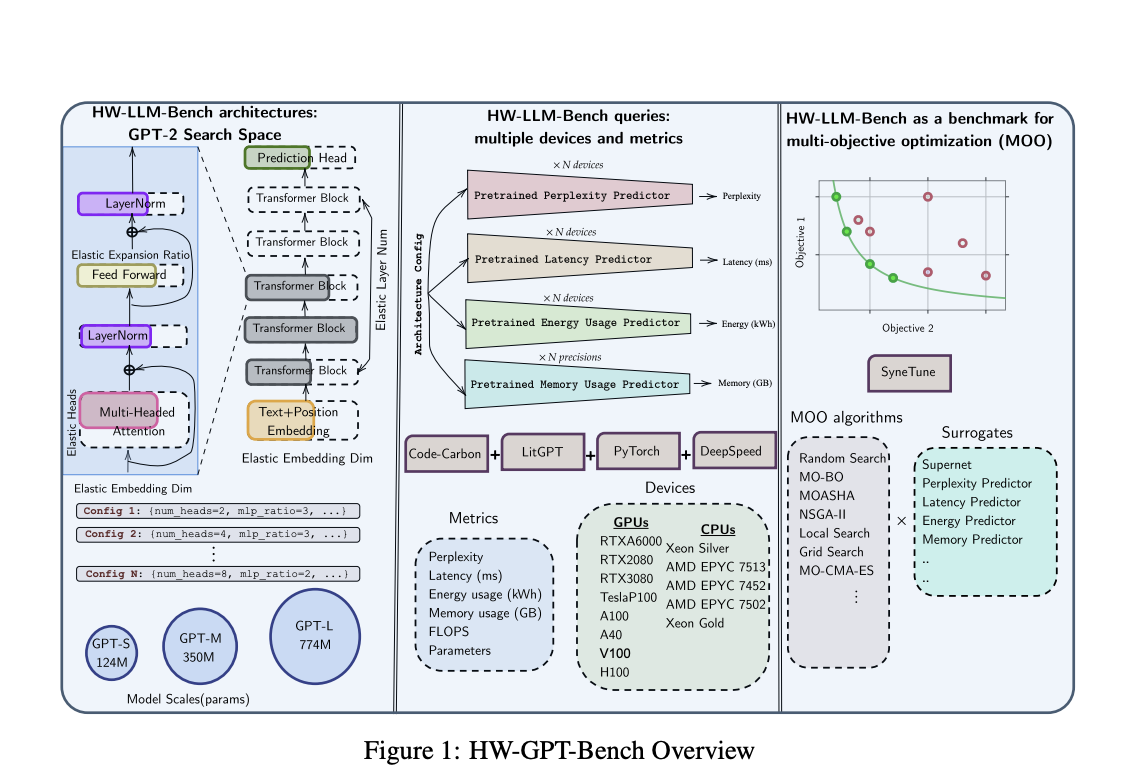

Large language models (LLMs) are crucial for complex reasoning tasks and language interpretation. However, they come with high inference and training costs. HW-GPT-Bench addresses this by benchmarking LLMs for efficient hardware utilization, leading to practical benefits for businesses.

Optimizing Inference Efficiency

Efficient designs at inference time are essential for broader LLM usage. HW-GPT-Bench offers strategies like pruning and KV-Cache optimization to improve inference efficiency, helping reduce costs and enabling diverse applications.

Hardware-Aware Benchmarking

HW-GPT-Bench considers hardware metrics to evaluate and optimize LLMs, using weight-sharing methods to cover different setups. This helps in choosing the best configurations across various devices, saving time and resources in algorithm development.

Environmental and Economic Impact

By optimizing LLM configurations to lower energy consumption, HW-GPT-Bench contributes to environmentally friendly AI. It also leads to significant cost savings and economic benefits for organizations deploying large-scale AI solutions, particularly in data-intensive industries.

Long-Term Goals

The team aims to explore quantization methods, create surrogates for larger models, and combine NAS with pruning strategies, ensuring continuous advancements in AI hardware efficiency.

Explore AI Solutions for Your Business

Automation Opportunities with AI

Identify key customer interaction points that can benefit from AI and choose tools aligned with business needs for gradual implementation, leading to measurable impacts on business outcomes.

AI Sales Bot from itinai.com/aisalesbot

Discover the AI Sales Bot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages, redefining sales processes and customer engagement.

Connect with Us

For AI KPI management advice and continuous insights into leveraging AI, stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.