Understanding Vision Models and Their Importance

Vision models are essential for helping machines understand and analyze visual data. They play a crucial role in tasks like image classification, object detection, and image segmentation. These models, such as convolutional neural networks (CNNs) and vision transformers, convert raw image pixels into meaningful features through training. Efficient training is key to improving performance, especially at the very first layer where crucial data is created for deeper analysis.

Challenges in Training Vision Models

A significant challenge during training is the different impacts of image qualities like brightness and contrast. Bright or high-contrast images can cause large changes in model weights, while low-contrast images have much less effect. This imbalance can slow down training and lead to inefficiencies. It’s vital to fix this issue so all types of images can contribute fairly to the learning process and enhance overall model performance.

Current Solutions and Their Limitations

Traditional solutions often include preprocessing or altering the model design, using techniques like batch normalization and weight normalization. While these methods help, they do not address the core problem of uneven gradient effects on the first layer and can complicate the model, making it less compatible with existing systems.

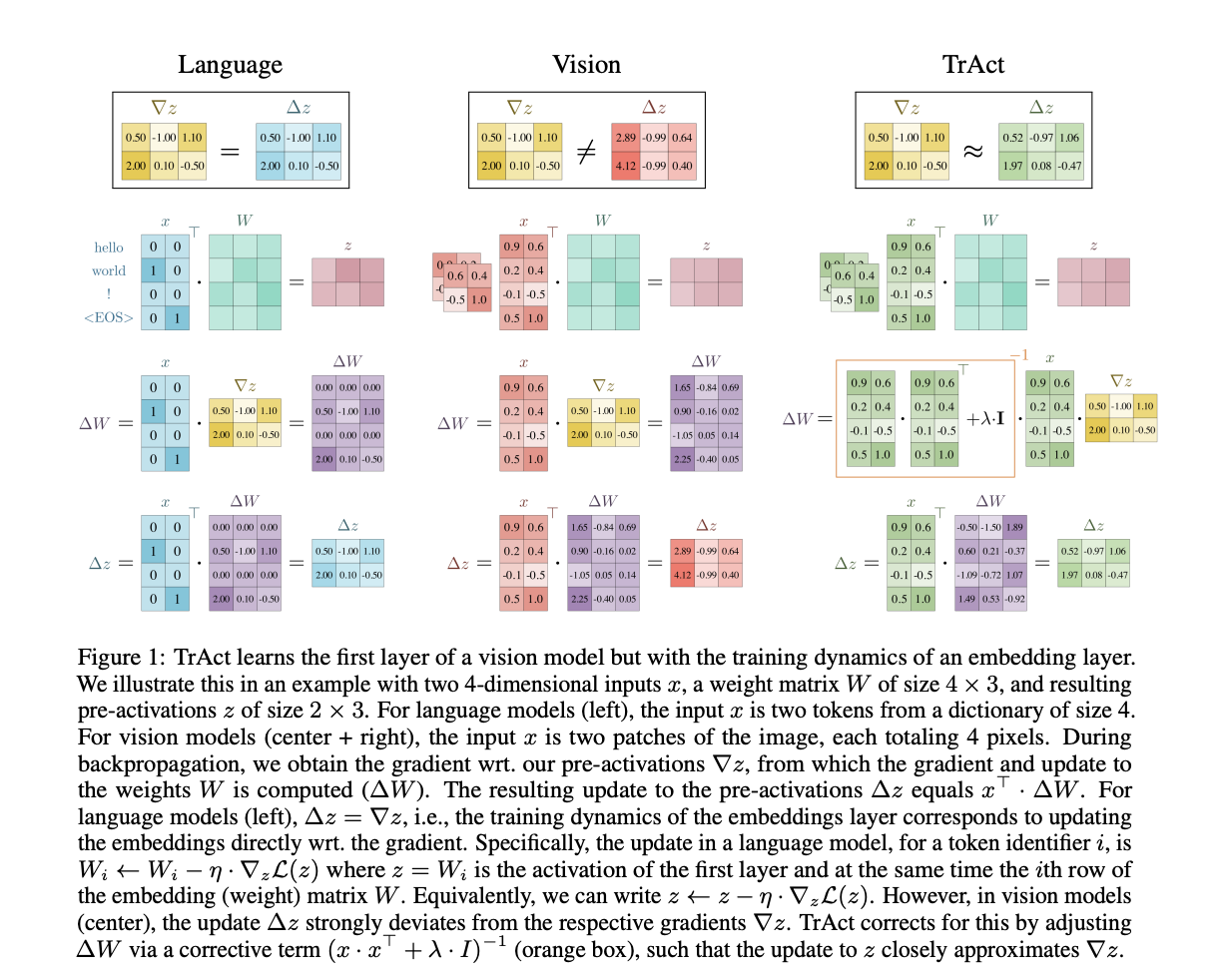

Introducing TrAct: An Innovative Approach

Researchers from Stanford University and the University of Salzburg have developed TrAct (Training Activations), a new technique to improve the training of the first layer in vision models. Unlike traditional methods, TrAct keeps the original model structure intact while changing the way training is done. It helps maintain consistent gradient updates, ensuring they are not influenced by image variability.

How TrAct Works

The TrAct method uses a simple two-step process:

- Gradient Descent: It starts by calculating gradients for the first-layer activations, creating an activation proposal.

- Weight Update: Then, it adjusts the first-layer weights to get closer to this proposal.

This approach is computationally efficient and introduces a controllable hyperparameter, λ, to balance input dependence and gradient size. The default value works well across many models, making it easy to implement without major changes to existing training setups.

Results of Using TrAct

Experimental tests showed that TrAct has remarkable benefits:

- Faster Training: For instance, in CIFAR-10 tests, ResNet-18 trained with TrAct achieved similar accuracy to traditional models but in only 100 epochs instead of 400.

- Improved Accuracy: On CIFAR-100, TrAct offered an average accuracy boost of 0.49% for top-1 and 0.23% for top-5 metrics across many model architectures.

- Efficiency on Large Models: Even with larger models like vision transformers, the runtime added was minimal.

Benefits of Adopting TrAct

TrAct not only speeds up training but also enhances accuracy without needing to change your current systems. It adapts well across different datasets and setups, ensuring high performance regardless of the model type or input variability.

Take Action with AI Solutions

If you want to transform your company with AI and stay ahead of the competition:

- Identify Automation Opportunities: Find interactions that can benefit from AI.

- Define KPIs: Make sure your AI initiatives have measurable outcomes.

- Select AI Solutions: Choose tools that meet your needs and can be customized.

- Implement Gradually: Start small, gather data, and expand wisely.

For advice on AI KPI management, reach out to us at hello@itinai.com. For insights into using AI effectively, follow us on Telegram and @itinaicom.

Explore how AI can redefine your sales processes and improve customer engagement. Visit us at itinai.com.