Introduction to ReasonFlux

Large language models (LLMs) are great at solving problems, but they struggle with complex tasks like advanced math and coding. These tasks require careful planning and detailed steps. Current methods improve accuracy but are often costly and inflexible. The new framework, ReasonFlux, offers practical solutions to these challenges by changing how LLMs plan and execute reasoning steps.

Current Approaches and Their Limitations

Recent methods to enhance LLM reasoning can be divided into two types: deliberate search and reward-guided methods. Techniques like Tree of Thoughts (ToT) help LLMs explore different reasoning paths, while Monte Carlo Tree Search (MCTS) breaks problems into manageable steps. However, these methods can be inefficient due to high computational demands and manual design. For example, MCTS needs to evaluate thousands of steps, making it impractical for real-world use.

Additionally, methods like Buffer of Thought (BoT) use stored templates for problem-solving but struggle to adaptively combine them, limiting their effectiveness in complex situations.

What is ReasonFlux?

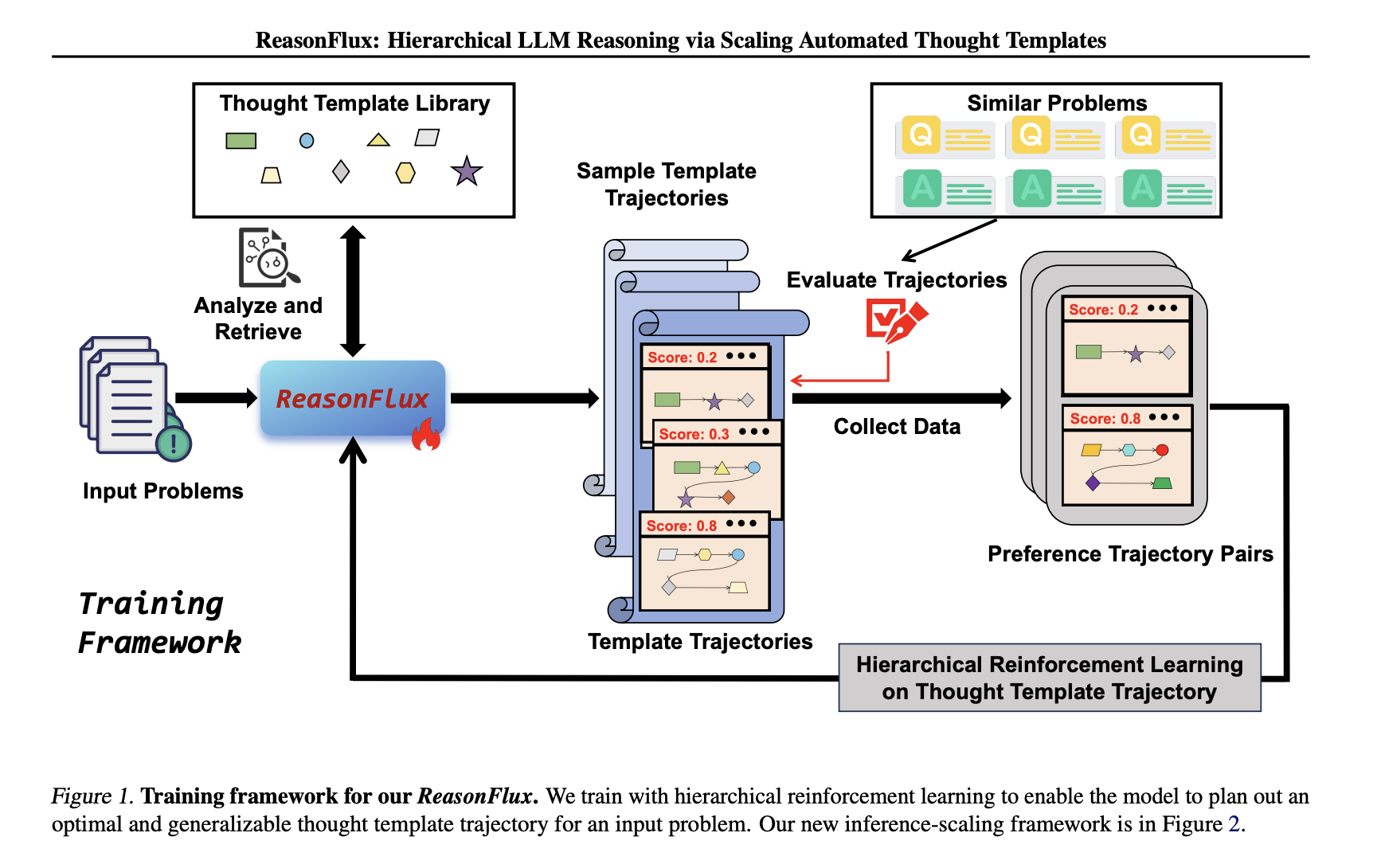

ReasonFlux combines a library of problem-solving templates with hierarchical reinforcement learning (HRL) to improve reasoning. Instead of focusing on individual steps, it optimizes sequences of strategies from a structured knowledge base, making it easier to adapt to different problems.

Main Components of ReasonFlux

- Structured Template Library: A collection of 500 templates that outline problem-solving strategies, making it easy to retrieve relevant information.

- Hierarchical Reinforcement Learning:

- Structure-Based Fine-Tuning: The base LLM is trained to understand when and how to use each template.

- Template Trajectory Optimization: The model learns to rank template sequences based on their effectiveness, improving its planning skills.

- Adaptive Inference Scaling: ReasonFlux acts as a navigator, adjusting its approach based on the problem’s progress, similar to human problem-solving.

Performance and Results

ReasonFlux was tested on challenging benchmarks like MATH, AIME, and OlympiadBench, outperforming leading models. Key results include:

- 91.2% accuracy on MATH, exceeding OpenAI’s previous model by 6.7%.

- 56.7% on AIME 2024, outperforming DeepSeek-V3 by 45%.

- 63.3% on OlympiadBench, a 14% improvement over earlier methods.

Additionally, the template library showed strong adaptability, allowing smaller models to perform better than larger ones. ReasonFlux also required 40% fewer computational steps than MCTS for complex tasks.

Conclusion

ReasonFlux transforms how LLMs tackle complex reasoning by separating high-level strategies from execution. Its structured approach reduces computational costs while enhancing accuracy and flexibility. This framework sets a new standard for efficient reasoning, proving that smaller, well-guided models can compete with larger systems. This innovation opens up new possibilities for using advanced reasoning in various fields, from education to automated coding.

Get Involved

Check out the Paper for more details. Follow us on Twitter and join our 75k+ ML SubReddit community.

Unlock AI Potential for Your Business

To stay competitive, consider using ReasonFlux to enhance your operations:

- Identify Automation Opportunities: Find key areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot project, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Discover how AI can transform your sales processes and customer engagement at itinai.com.