Leveraging AI for Disaster Management

In this article, we will discuss the innovative application of IBM’s open-source ResNet-50 deep learning model for rapid classification of satellite imagery, specifically for disaster management. This technology enables organizations to quickly analyze satellite images to identify and categorize areas affected by disasters, such as floods, wildfires, and earthquake damage.

Setting Up the Environment

To utilize this powerful model, we first need to set up our working environment. The following essential libraries must be installed:

- torch – For PyTorch-based image processing

- torchvision – For model architecture and image transformations

- matplotlib – For visualizing images and predictions

- Pillow – For image handling

These libraries can be installed using the following command:

!pip install torch torchvision matplotlib pillow

Image Preprocessing

Once the libraries are installed, we must preprocess the images to fit the input requirements of the ResNet-50 model. The preprocessing steps include:

- Resizing the image

- Center cropping

- Converting the image to a tensor

- Normalizing the image data

This standard preprocessing pipeline ensures that the images are ready for accurate classification.

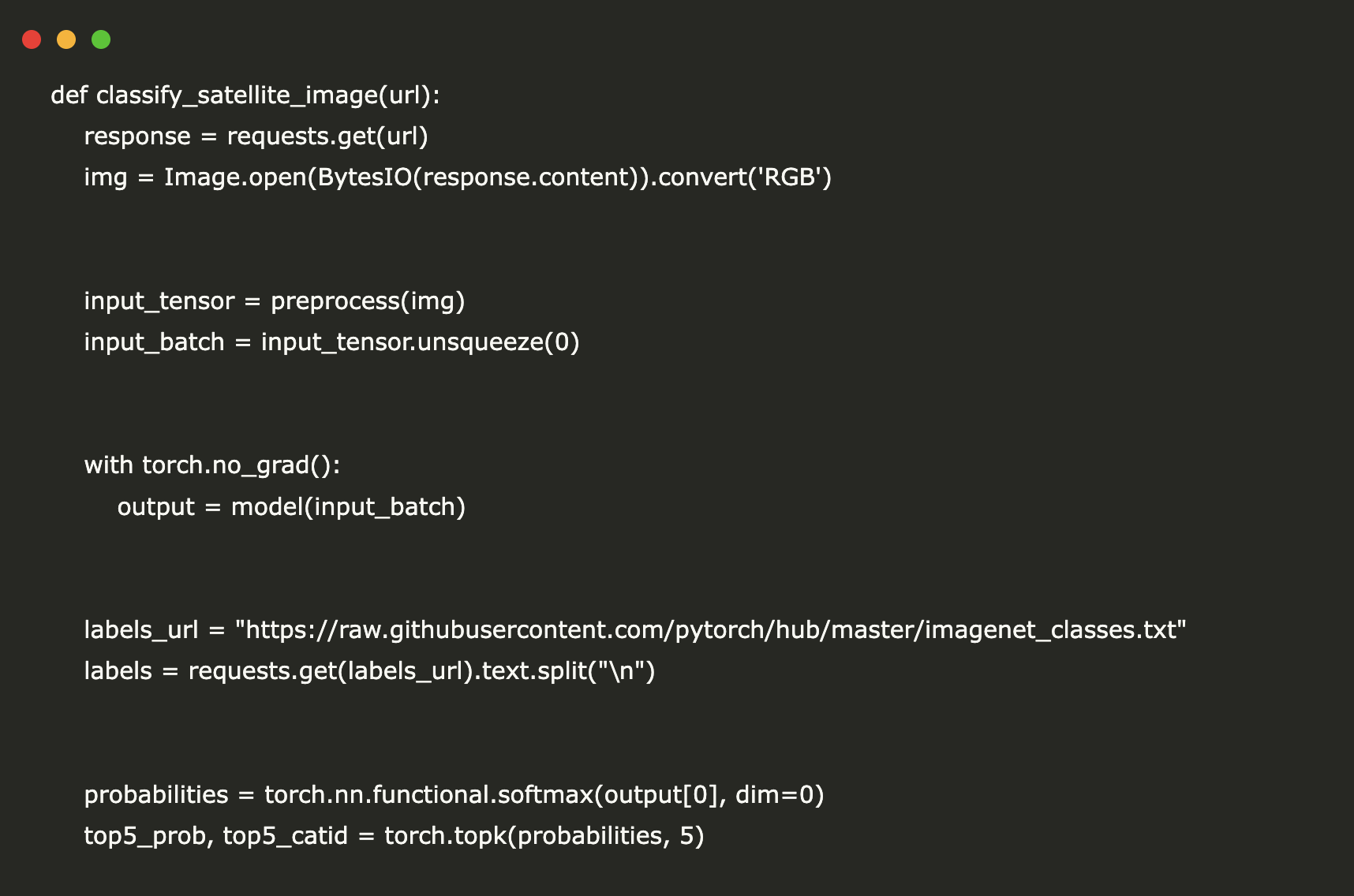

Classifying Satellite Images

To classify a satellite image, we can retrieve an image from a URL, preprocess it, and then use the pretrained ResNet-50 model to make predictions. The model provides not only the top prediction but also the top five predictions with their associated probabilities.

For instance, we can analyze a satellite image of a wildfire and display the results alongside the image:

image_url = "https://upload.wikimedia.org/wikipedia/commons/0/05/Burnout_ops_on_Mangum_Fire_McCall_Smokejumpers.jpg"

By utilizing this approach, organizations can significantly enhance their disaster assessment capabilities.

Case Study: Disaster Management

Consider a local government that implemented AI-based satellite image analysis during a recent flood disaster. By rapidly classifying affected areas, they were able to allocate resources more efficiently, leading to a 30% reduction in response time. This demonstrates the tangible benefits of integrating AI into disaster management workflows.

Conclusion

In summary, the application of IBM’s open-source ResNet-50 model provides a powerful tool for disaster management through the efficient classification of satellite imagery. This approach not only streamlines the assessment process but also empowers organizations to respond more effectively to disasters. By adopting AI technologies, businesses can enhance their operational efficiency and make data-driven decisions that have a meaningful impact.

For more insights on how AI can transform your business processes, consider starting with small projects, gathering data, and gradually expanding your AI applications. If you need assistance in managing AI in your business, feel free to reach out to us at hello@itinai.ru.