The Value of RAGate: Enhancing Conversational AI with Adaptive Knowledge Retrieval

Practical Solutions and Value

The rapid advancement of Large Language Models (LLMs) has significantly improved conversational systems, generating natural and high-quality responses. However, recent studies have identified limitations in using LLMs for conversational tasks, such as the need for up-to-date knowledge and restricted domain adaptability. To address these issues, RAGate proposes an adaptive solution to enhance conversational responses by dynamically determining the need for external knowledge augmentation based on the conversation context and relevant inputs.

Adaptive Knowledge Retrieval

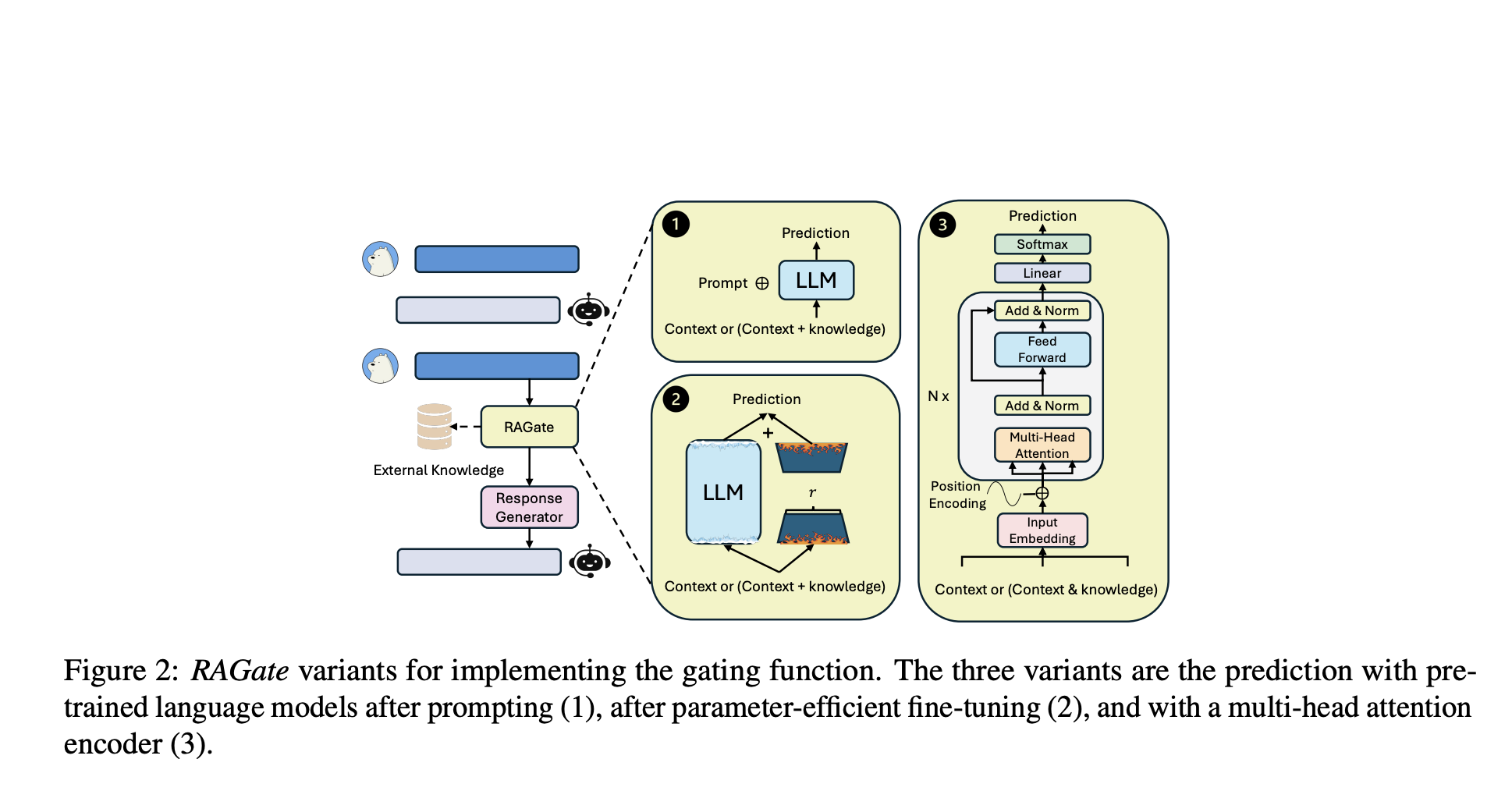

RAGate leverages a gating model to determine when external knowledge augmentation is necessary, improving the efficiency and effectiveness of conversational systems. It employs a binary knowledge gate mechanism to manipulate external knowledge for conversational systems, ensuring natural, relevant, and contextually appropriate responses.

Experimental Results

Extensive experiments on an annotated Task-Oriented Dialogue (TOD) system dataset show that RAGate enables conversational systems to efficiently use external knowledge at appropriate conversational turns, producing high-quality system responses. The solution effectively controls the conversation system to make confident and informative responses, reducing the likelihood of hallucinated outputs.

Enhancing User Experience

RAGate’s dynamic determination of the need for augmentation based on confidence levels leads to more accurate and relevant responses, enhancing the overall user experience. The solution effectively identifies conversation turns that require augmentation, ensuring natural, relevant, and contextually appropriate responses.

AI Solutions for Business

Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually to leverage AI for your business. For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com or stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.