Enhancing Efficiency of Large Language Models (LLMs) with Q-Sparse

Practical Solutions and Value

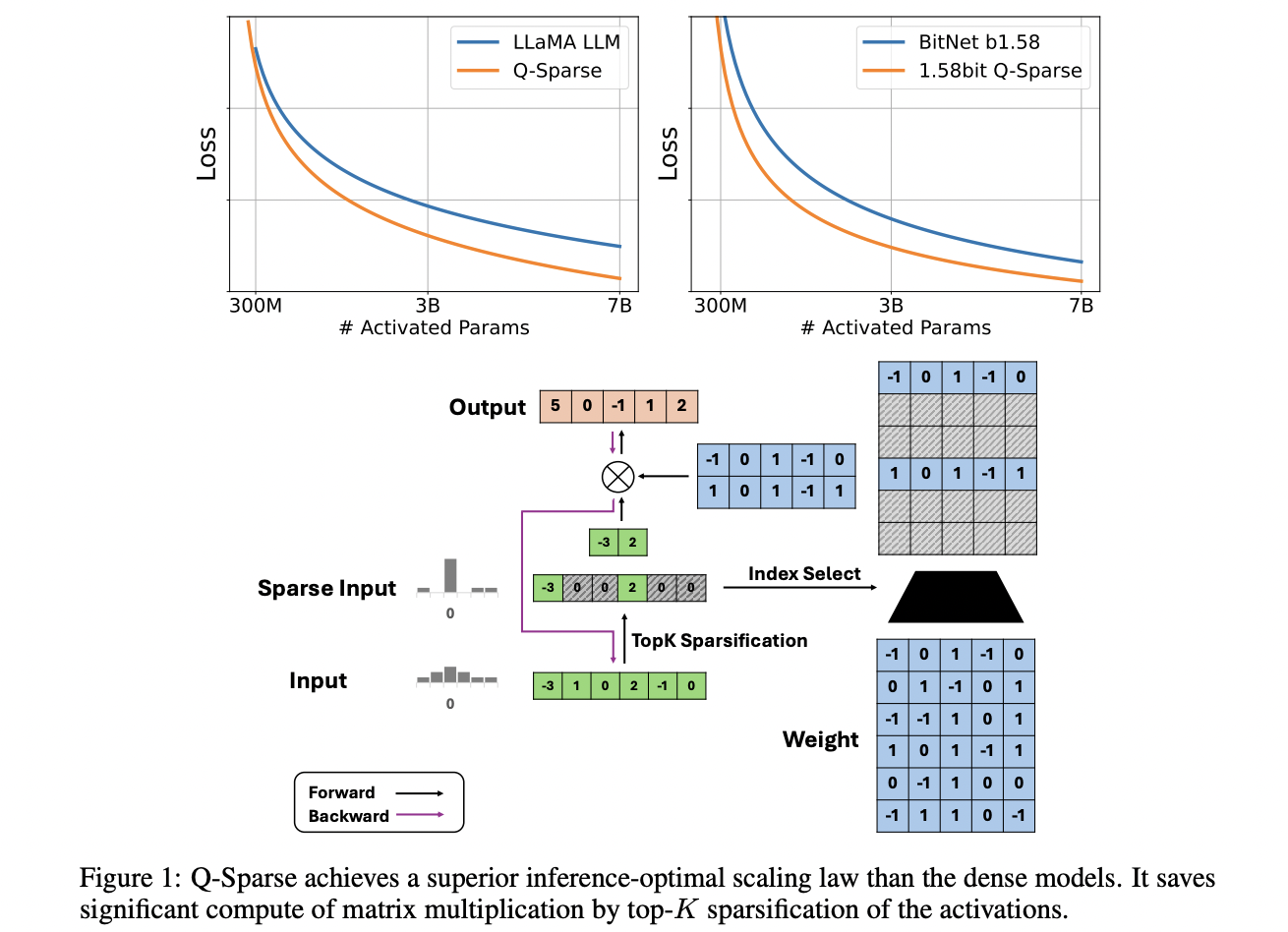

Recent research aims to enhance Large Language Model (LLM) efficiency through quantization, pruning, distillation, and improved decoding. Q-Sparse enables full activation sparsity, significantly enhancing inference efficiency, achieving baseline LLM performance with lower inference costs, and offering a path to more efficient, cost-effective, and energy-saving LLMs.

Q-Sparse enhances the Transformer architecture by enabling full sparsity in activations through top-K sparsification and the straight-through estimator (STE). It supports full-precision and quantized models, including 1-bit models. The approach applies a top-K function to the activations during matrix multiplication, reducing computational costs and memory footprint.

Studies show that LLM performance scales with model size and training data follow a power law. Q-Sparse complements MoE and will be adapted for batch processing to enhance its practicality. It is effective across various settings and compatible with full-precision and 1-bit models, making it a pivotal approach for improving LLM efficiency and sustainability.

Q-Sparse is effective for training from scratch, continue-training, and fine-tuning, maintaining efficiency and performance across various settings. The performance gap between sparse and dense models diminishes with increasing model size, indicating that sparse models can efficiently match or outperform dense models with proper sparsity.

Join our AI Evolution

If you want to evolve your company with AI, stay competitive, and use Q-Sparse to redefine your work processes, consider connecting with us. We provide AI KPI management advice, continuous insights into leveraging AI, and solutions to redefine your sales processes and customer engagement.

Discover how AI can redefine your way of work. Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually. For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com or stay tuned on our Telegram and Twitter channels.