Value of Q-GaLore in Practical AI Solutions

Efficiently Training Large Language Models (LLMs)

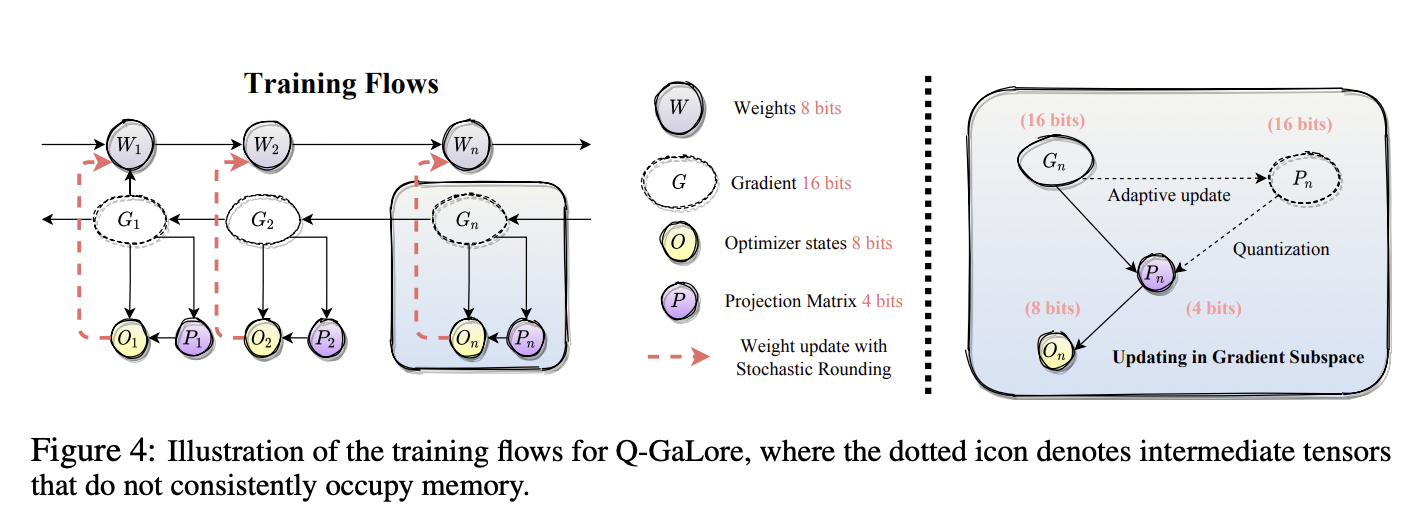

Q-GaLore offers a practical solution to the memory constraints traditionally associated with large language models, enabling efficient training while reducing memory consumption. By combining quantization and low-rank projection, Q-GaLore achieves competitive performance and broadens the accessibility of powerful language models.

Practical Implementation and Performance

Q-GaLore has demonstrated exceptional performance in pre-training and fine-tuning scenarios, enabling the training of a 7B model from scratch on hardware with only 16GB of memory. In fine-tuning tasks, Q-GaLore reduced memory consumption by up to 50% compared to other methods while consistently outperforming competitors on benchmarks at the same memory cost.

Application Across Model Sizes

Q-GaLore’s efficiency has been evaluated across various model sizes, from 60 million to 7 billion parameters, demonstrating significant memory savings and maintaining comparable pre-training performance compared to baseline methods.

Access to Cutting-Edge Language Processing Technologies

Q-GaLore’s practical approach highlights the potential for optimizing large-scale models for more commonly available hardware configurations, making cutting-edge language processing technologies more accessible to a wider audience.

AI Solutions for Business Transformation

Discover how AI can redefine your company’s way of work and sales processes. Identify automation opportunities, define KPIs, select AI solutions, and implement them gradually to stay competitive and evolve your business with AI.

Connect with Us for AI KPI Management

For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com. Stay tuned on our Telegram or Twitter for more insights.

Explore AI Solutions for Sales and Customer Engagement

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.