Challenges in Video Data for Machine Learning

The increasing use of video data in machine learning has revealed some challenges in video decoding. Efficiently extracting useful frames or sequences for model training can be complicated. Traditional methods are often slow, require a lot of resources, and are hard to integrate into machine learning systems. The absence of streamlined APIs makes it difficult for researchers and developers. This highlights the need for effective tools to simplify tasks like temporal segmentation, action recognition, and video synthesis.

Introducing torchcodec

PyTorch has launched torchcodec, a library designed to decode videos into PyTorch tensors. This tool connects video processing with deep learning workflows, allowing users to decode, load, and prepare video data directly within PyTorch. By integrating into the PyTorch ecosystem, torchcodec minimizes the need for extra tools and additional processing steps, making video-based machine learning projects easier and faster.

User-Friendly APIs

torchcodec provides simple APIs for all users, from beginners to experts. Its integration capabilities support various tasks that require efficient video data handling, whether for individual videos or large datasets.

Technical Advantages

torchcodec features advanced sampling methods that enhance video decoding for machine learning training. It allows decoding of specific frames, sub-sampling of sequences, and direct conversion into PyTorch tensors. This streamlining speeds up workflows and reduces computing needs.

Performance Optimization

The library is optimized for both CPU and CUDA-enabled GPU performance, ensuring fast decoding without losing frame quality. This balance of speed and accuracy is essential for training complex models needing high-quality video inputs.

Customizable APIs

Users can adjust frame rates, resolution, and sampling intervals, making torchcodec adaptable for various applications like video classification, object tracking, and generative modeling.

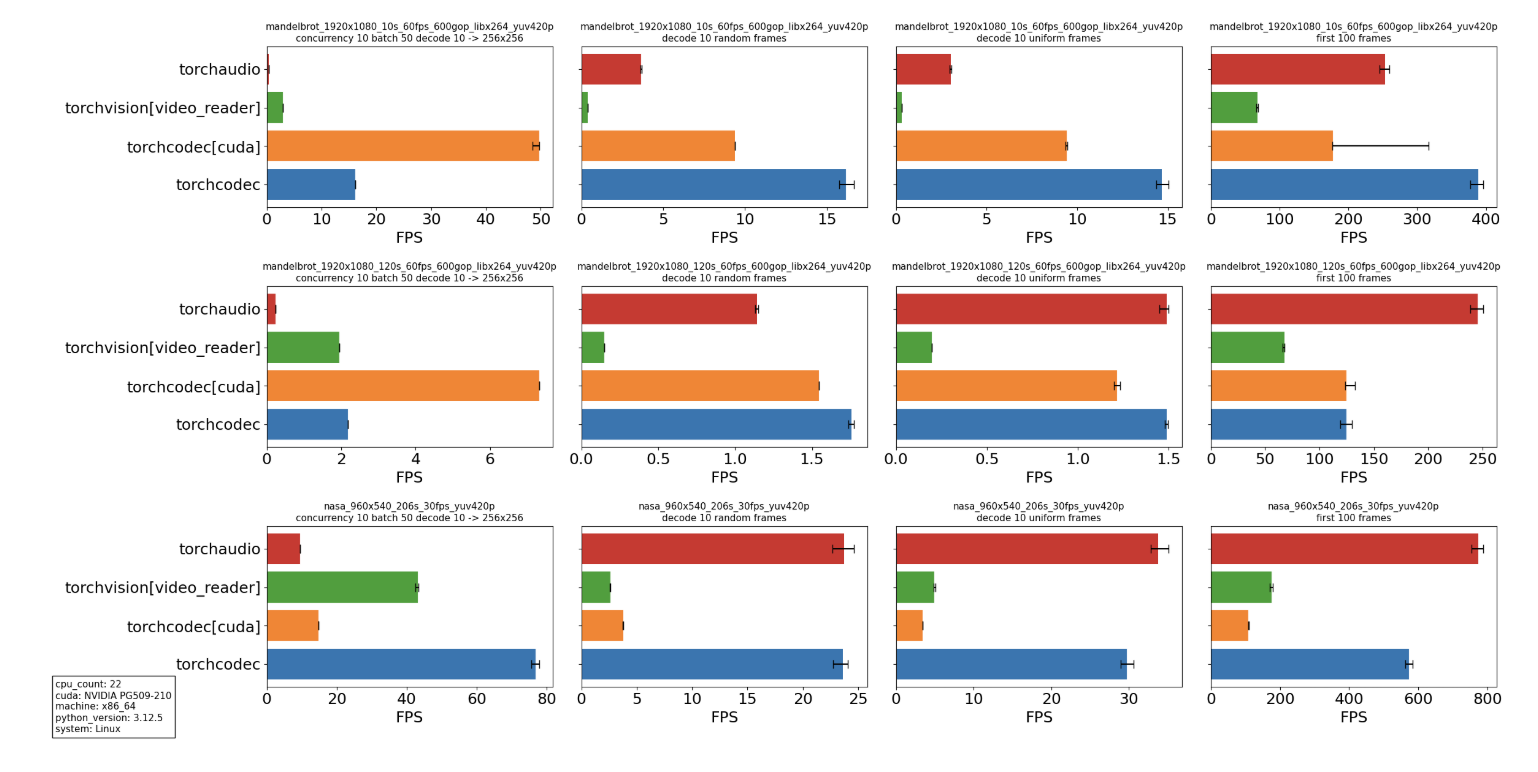

Performance Insights

Benchmarks show that torchcodec significantly outperforms traditional decoding methods. On CPU systems, decoding is up to three times faster, while CUDA setups can be five times quicker for large datasets. The library maintains high accuracy in frame decoding, ensuring no important information is lost.

Addressing Sampling Challenges

torchcodec’s advanced sampling methods tackle issues like sparse temporal sampling and variable frame rates, allowing for richer datasets that enhance model performance.

Conclusion

The launch of torchcodec by PyTorch is a significant step forward in video decoding for machine learning. Its easy-to-use APIs and optimized performance tackle major challenges in video workflows. By efficiently converting video data into PyTorch tensors, developers can focus more on building models rather than dealing with preprocessing issues.

For researchers and practitioners, torchcodec offers a practical solution for utilizing video data in machine learning. As video applications grow, tools like torchcodec will be crucial in fostering new innovations and simplifying workflows.

Get Involved

Check out the Details and GitHub Page. All credit for this research goes to the project’s researchers. Also, follow us on Twitter, join our Telegram Channel, and our LinkedIn Group. Don’t forget to join our 60k+ ML SubReddit.

Transform Your Business with AI

Stay competitive by leveraging torchcodec for your AI needs:

- Identify Automation Opportunities: Find key areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that fit your needs and offer customization.

- Implement Gradually: Start small with a pilot, gather data, and expand thoughtfully.

For AI KPI management advice, reach out to us at hello@itinai.com. For ongoing insights into leveraging AI, follow us on Telegram at t.me/itinainews or Twitter at @itinaicom.

Discover how AI can transform your sales processes and customer engagement. Explore more solutions at itinai.com.