What is Promptfoo?

Promptfoo is a command-line interface (CLI) and library that helps improve the evaluation and security of large language model (LLM) applications. It allows users to create effective prompts, configure models, and build retrieval-augmented generation (RAG) systems using specific benchmarks for different use cases.

Key Features:

- Automated Security Testing: Supports red teaming and penetration testing to ensure application security.

- Faster Evaluations: Utilizes caching, concurrency, and live reloading for quicker results.

- Custom Metrics: Offers automated scoring through customizable evaluation metrics.

- Wide Compatibility: Works with various platforms and APIs like OpenAI, Anthropic, and HuggingFace.

- CI/CD Integration: Easily fits into continuous integration and deployment workflows.

Benefits of Using Promptfoo

Promptfoo is designed for developers, providing:

- User-Friendly Experience: Fast processing and features like live reloading and caching.

- Collaboration Tools: Built-in sharing and a web viewer to facilitate teamwork.

- Open-Source and Privacy-Focused: Operates locally to secure user data while interacting directly with LLMs.

How to Get Started

Getting started with Promptfoo is easy:

- Run npx promptfoo@latest init to set up a YAML configuration file.

- Edit the YAML file to write the prompt you want to test, using double curly braces for variables.

- Add model providers and specify the models to test.

- Include example inputs and optional assertions for output requirements.

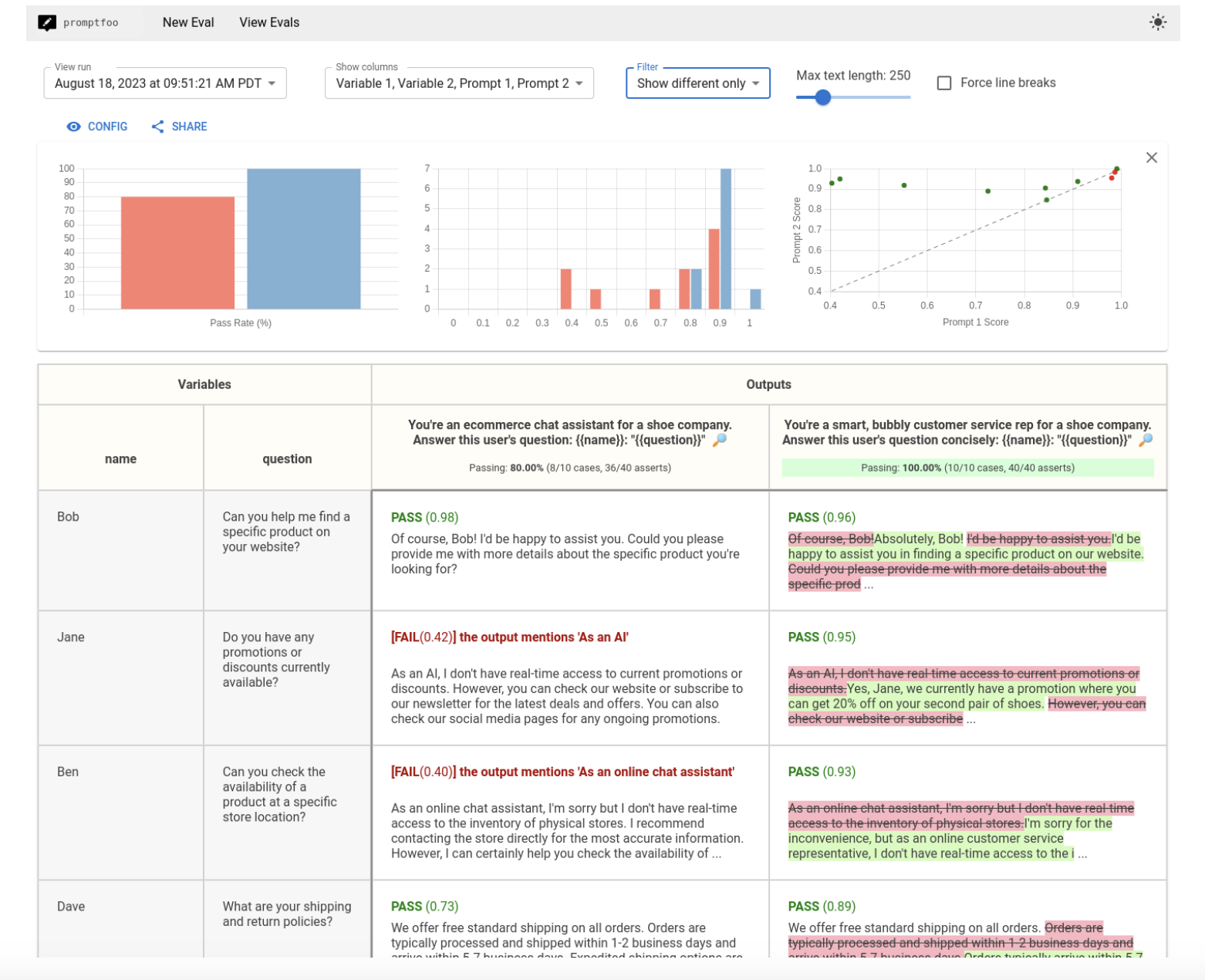

- Run the evaluation to test all prompts and models, then review results in the web viewer.

Enhancing Dataset Quality

Promptfoo improves the quality of LLM evaluations by allowing users to create diverse datasets. Use the promptfoo generate dataset command to:

- Combine existing prompts and test cases for unique evaluations.

- Customize dataset generation to fit different evaluation needs.

Securing RAG Applications

Promptfoo also focuses on securing retrieval-augmented generation (RAG) applications against vulnerabilities:

- Detecting Vulnerabilities: Identifies issues like prompt injection that can lead to unauthorized actions.

- Preventing Data Poisoning: Addresses harmful information that can distort outputs.

- Handling Context Window Overflow: Provides custom policies to maintain response accuracy.

Conclusion

In summary, Promptfoo is a powerful CLI tool for testing, securing, and optimizing LLM applications. It supports developers in creating strong prompts, integrating with various LLM providers, and conducting automated evaluations. With its open-source nature, local execution, and collaborative features, Promptfoo enhances data privacy and improves evaluation accuracy. It also fortifies RAG applications against potential attacks, making it a comprehensive solution for secure LLM deployment.

Connect with Us

For more information, check out our GitHub. Follow us on Twitter, join our Telegram Channel, and connect with us on LinkedIn. If you enjoy our work, subscribe to our newsletter and join our 55k+ ML SubReddit.

Explore AI Solutions

To leverage AI for your business, consider using Promptfoo:

- Identify Automation Opportunities: Find key areas for AI implementation.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select AI Solutions: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot project and expand based on data.

For AI KPI management advice, contact us at hello@itinai.com. Stay updated on AI insights via our Telegram or @itinaicom.