Understanding In-Context Learning in Large Language Models

What Are Large Language Models (LLMs)?

LLMs can learn tasks from examples without needing extra training. One key challenge is understanding how the number of examples affects their performance, known as the In-Context Learning (ICL) curve.

Why is the ICL Curve Important?

Predicting the ICL curve helps us determine the best number of examples to use, foresee issues in complex scenarios, and evaluate necessary adjustments to avoid unwanted behaviors. This knowledge enhances decision-making for deploying LLMs and reduces risks.

Research Insights

Studies are exploring how LLMs learn in context, with various theories emerging. Some suggest they act like Bayesian learners, while others see them as following gradient descent. Power laws are often used to model LLM behavior, but existing research has gaps. Notably, no one has directly modeled the ICL curve based on core learning assumptions.

Introducing Bayesian Laws for ICL

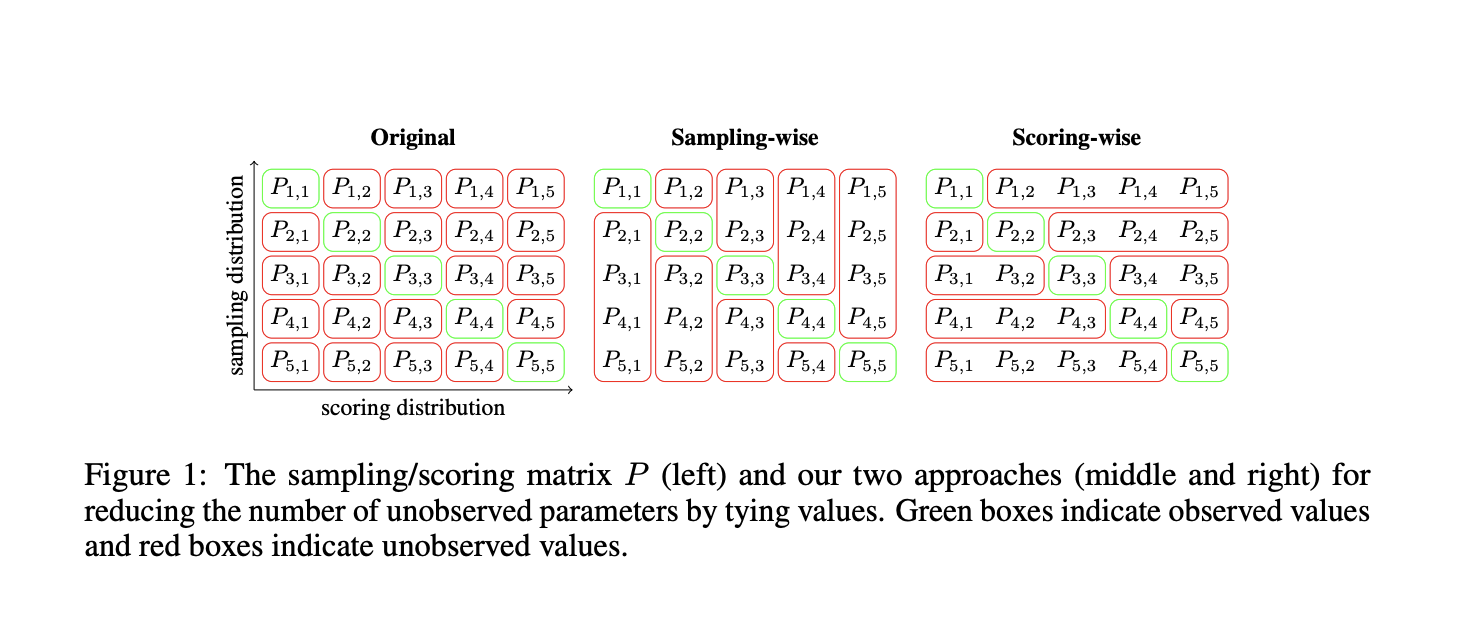

Researchers propose a new method that uses Bayesian laws to predict ICL curves across different scenarios. This study combines synthetic data tests with real-world benchmarks. The approach goes beyond simple predictions, offering understandable parameters that reflect task distribution and learning efficiency.

Experimental Methodology

The research involves two main phases:

1. Comparing Bayesian laws to existing models in predicting curves.

2. Analyzing how post-training changes influence ICL in different tasks.

Key Findings

The Bayesian laws showed better performance in predicting ICL compared to other methods. They provided valuable insights into model behavior, revealing that larger models learn faster, especially with informative examples.

Insights on Instruction-Tuning

Comparing Llama 3.1 models showed that instruction-tuning reduces unsafe behavior probabilities but does not effectively prevent many-shot jailbreaking. This indicates that instruction-tuning changes task priorities but does not fundamentally alter the model’s knowledge.

Contributions of the Research

The study successfully links two significant questions about in-context learning by developing Bayesian scaling laws. These laws offer clear insights into efficiency and task probabilities, proving useful for understanding ICL capabilities and the effects of fine-tuning.

How to Leverage AI for Your Business

If you want to enhance your business with AI, consider these practical steps:

– **Identify Automation Opportunities**: Find customer interactions that can benefit from AI.

– **Define KPIs**: Ensure your AI projects have measurable impacts.

– **Select an AI Solution**: Choose tools that fit your needs and allow customization.

– **Implement Gradually**: Start small, gather data, and expand AI use wisely.

For more insights and support on AI implementation, connect with us at hello@itinai.com or follow us on our social media channels.

Explore More

Check out the Paper and GitHub Page for detailed research. Follow us on Twitter, join our Telegram Channel, and LinkedIn Group for updates. If you enjoy our work, subscribe to our newsletter and join our 55k+ ML SubReddit.

Sponsorship Opportunity

Promote your research, product, or webinar to our audience of over 1 million monthly readers and 500k community members.

Transform Your Sales and Customer Engagement

Discover how AI can enhance your processes by exploring solutions at itinai.com.