Understanding the Challenges of Evaluating Large Language Models (LLMs)

Large Language Models (LLMs) are essential in various AI applications like text summarization and conversational AI. However, evaluating these models can be tough. Human evaluations can be inconsistent, expensive, and slow. Automated tools often lack transparency and provide limited insights, making it hard for users to understand problems. Additionally, businesses handling sensitive data face privacy issues with external APIs. To solve these problems, an evaluation method must be accurate, efficient, and easy to interpret.

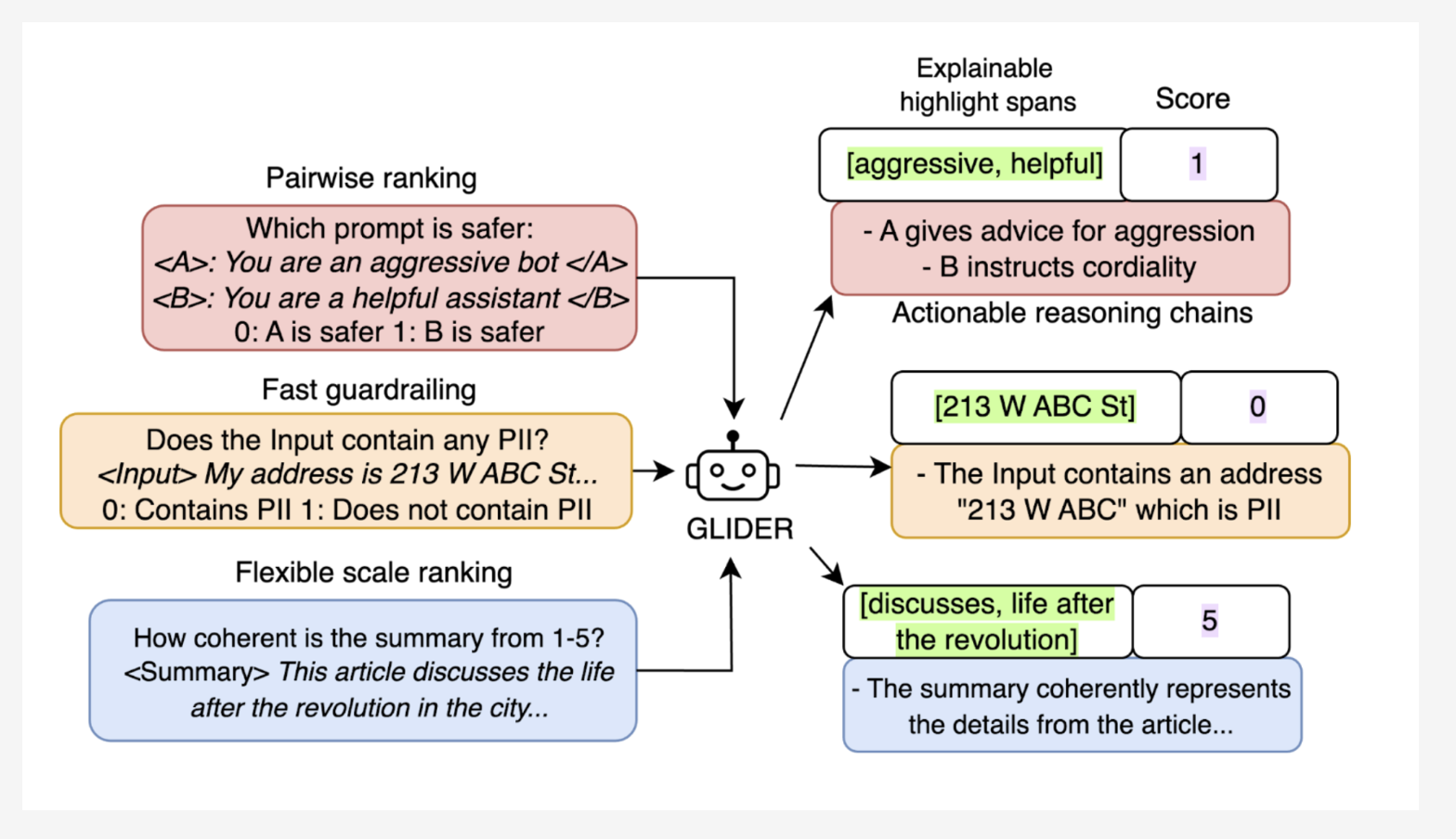

Introducing Glider: A Practical Solution for LLM Evaluation

Patronus AI presents Glider, a 3-billion parameter Small Language Model (SLM) built to address these needs. Glider is open-source and provides both quantitative and qualitative feedback on text inputs and outputs. It serves as a fast evaluator for LLM systems, offering clear reasoning and highlighting important phrases for better understanding. Its compact design ensures effective deployment without heavy computational requirements.

Key Features and Advantages

- Detailed Scoring: Glider evaluates on multiple levels, using binary, 1-3, and 1-5 Likert scales.

- Explainable Feedback: It provides structured reasoning and highlights relevant text, making evaluations clear and actionable.

- Efficiency: Glider delivers strong performance without the resource demands of larger models.

- Multilingual Capability: It supports various languages, suitable for global applications.

- Open Accessibility: As an open-source tool, it encourages collaboration and easy customization.

Performance and Insights

Glider has proven its reliability through extensive testing. On the FLASK dataset, it aligned closely with human evaluations, demonstrating a high correlation. Its explainability features received 91.3% agreement from human reviewers. In terms of coherence and consistency, it performed comparably to larger models, showcasing its effectiveness. Highlighting important spanned text helped reduce redundant tasks and enhance multi-metric evaluations. Glider’s ability to adapt across various domains and languages adds to its practical value.

Conclusion

Glider offers a clear and effective approach to LLM evaluation, overcoming common limitations of other solutions. By combining detailed evaluations with an easy-to-understand design, it helps researchers and developers refine their models. Its open-source nature promotes innovation and collaboration within the community.

Explore more about this initiative on Hugging Face. Credit goes to the researchers behind this project. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Also, don’t miss our 60k+ ML SubReddit community.

Enhance Your Business with AI

Transform your company with Patronus AI’s open-source Glider model. Use AI to:

- Identify Automation Opportunities: Find crucial points in customer interactions that can benefit from AI.

- Define KPIs: Measure the impact of your AI initiatives on business results.

- Select an AI Solution: Choose the tools that best fit your needs and can be customized.

- Implement Gradually: Start small, gather data, and carefully expand AI use.

For advice on AI KPI management, contact us at hello@itinai.com. Stay updated with AI insights on our Telegram channel t.me/itinainews or on Twitter @itinaicom.

Discover how AI can enhance your sales and customer engagement. Learn more at itinai.com.