Overcoming Gradient Inversion Challenges in Federated Learning: The DAGER Algorithm for Exact Text Reconstruction

Practical Solutions and Value

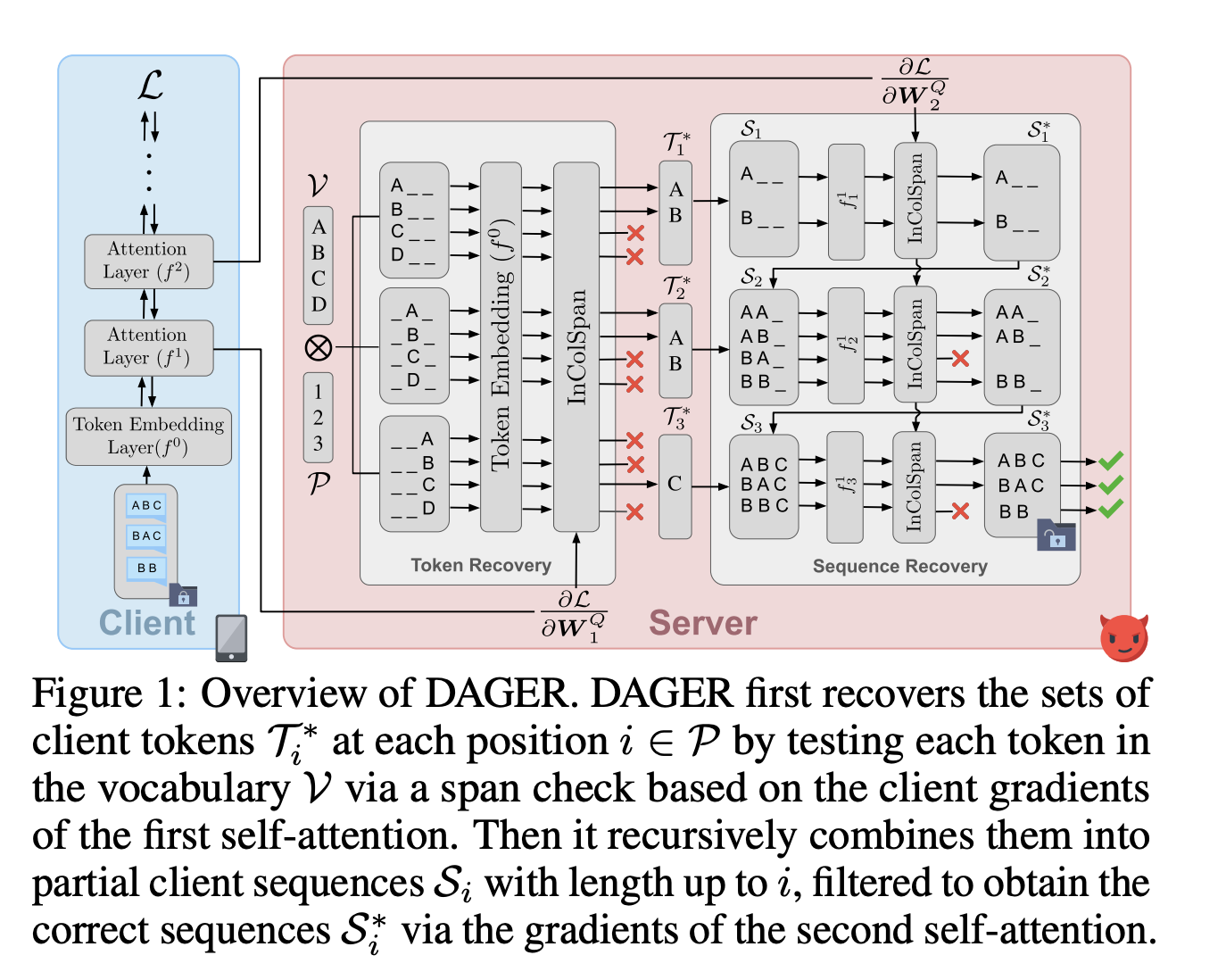

Federated learning allows collaborative model training while preserving private data, but gradient inversion attacks can compromise privacy. DAGER, developed by researchers from INSAIT, Sofia University, ETH Zurich, and LogicStar.ai, precisely recovers entire batches of input text, outperforming previous attacks in speed, scalability, and reconstruction quality. It supports large batches and sequences for encoder and decoder transformers, demonstrating exact reconstruction for transformer-based language models.

DAGER leverages the rank deficiency of the gradient matrix of self-attention layers to efficiently reconstruct full input sequences by progressively extending partial sequences with verified tokens. Experimental evaluation shows its superior performance compared to previous methods, achieving near-perfect sequence reconstructions and showcasing scalability and effectiveness in diverse scenarios.

For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights into leveraging AI, stay tuned on our Telegram or Twitter.

Spotlight on a Practical AI Solution: Consider the AI Sales Bot from itinai.com/aisalesbot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.