Understanding the Need for Robust AI Solutions

Challenges Faced by Large Language Models (LLMs)

As LLMs are increasingly used in real-world applications, concerns about their weaknesses have also grown. These models can be targeted by various attacks, such as:

- Creating harmful content

- Exposing private information

- Manipulative prompt injections

These vulnerabilities raise ethical issues like bias, misinformation, and privacy violations. Thus, we must develop effective strategies to tackle these problems.

The Role of Red Teaming

Red teaming is a method used to test AI systems by simulating attacks to expose vulnerabilities. Past automated red teaming methods faced difficulties in balancing the variety and effectiveness of the attacks. This limitation affected the models’ robustness.

Innovative Solutions by OpenAI Researchers

A New Approach to Red Teaming

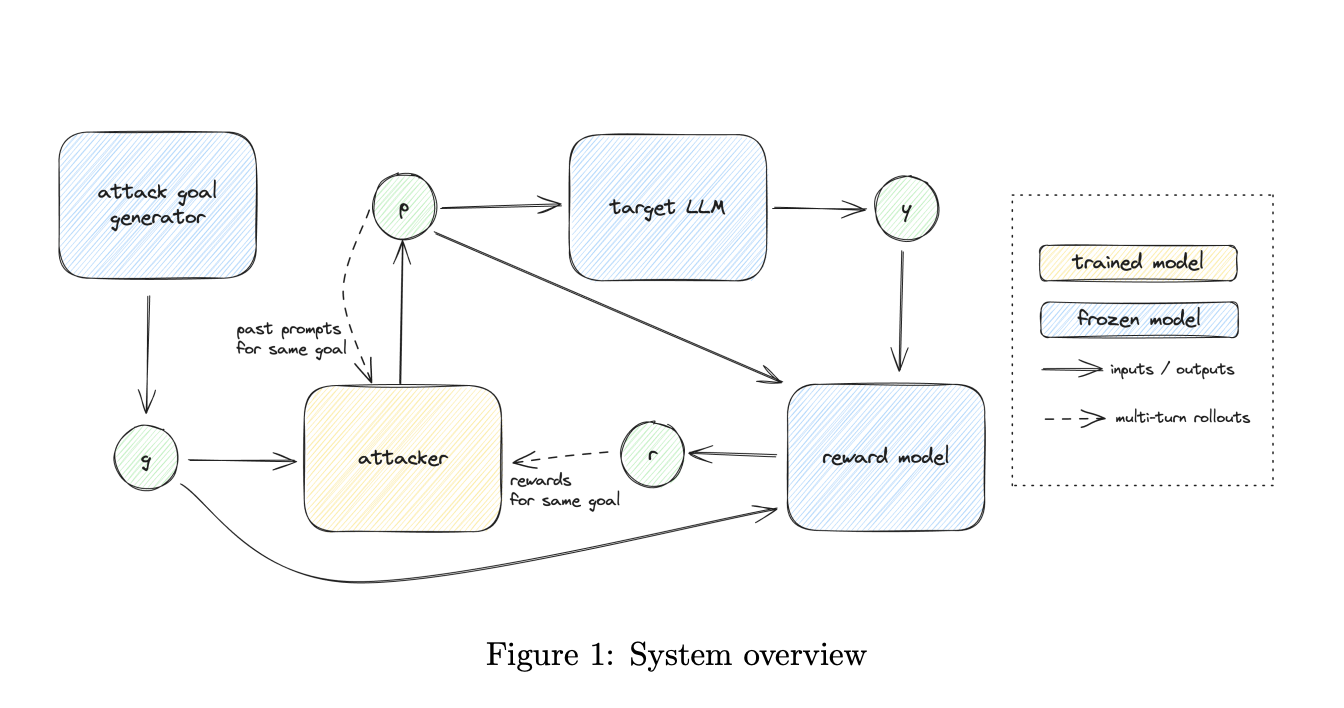

OpenAI researchers have introduced a better automated red teaming method that combines:

- Diversity in attack types

- Effectiveness in achieving attacker goals

This is done by breaking the red teaming process into two clear steps:

- Generating diverse attacker goals.

- Training a reinforcement learning (RL) attacker to achieve these goals effectively.

Key Features of the New Method

The researchers use:

- Multi-step Reinforcement Learning (RL) to refine attacks.

- Automated reward generation to encourage diversity and effectiveness.

This method helps identify model weaknesses while ensuring that generated attacks reflect real-world scenarios.

Benefits of the Proposed Method

Enhanced Attack Diversity and Effectiveness

This innovative approach has shown significant advancements in two critical application areas:

- Prompt injection attacks

- “Jailbreaking” attacks that provoke unsafe responses

In these cases, the new RL-based attacker produced a high success rate of attacks (up to 50%) while demonstrating greater diversity than earlier methods.

Future Directions

The proposed red teaming strategy highlights the importance of enhancing both attack diversity and effectiveness. While promising, further research is needed to refine reward systems and improve training stability for even better outcomes.

Join the Conversation and Explore AI Solutions

For more insights, check out the research paper and follow us on social media:

- Telegram Channel

- LinkedIn Group

If you’re interested in evolving your business with AI, consider:

- Identifying automation opportunities

- Defining clear KPIs for AI initiatives

- Selecting suitable AI solutions

- Implementing changes gradually

For personalized AI KPI management advice, contact us at hello@itinai.com.

Discover How AI Can Transform Your Business

Explore innovative solutions and redefine your sales processes at itinai.com.