Understanding Open-RAG: A New AI Framework

Challenges with Current Models

Large language models (LLMs) have improved many tasks in natural language processing (NLP). However, they often struggle with factual accuracy, especially in complex reasoning situations. Existing retrieval-augmented generation (RAG) methods, especially those using open-source models, find it hard to manage intricate reasoning, leading to unclear outputs and difficulty in identifying relevant information.

Introducing Open-RAG

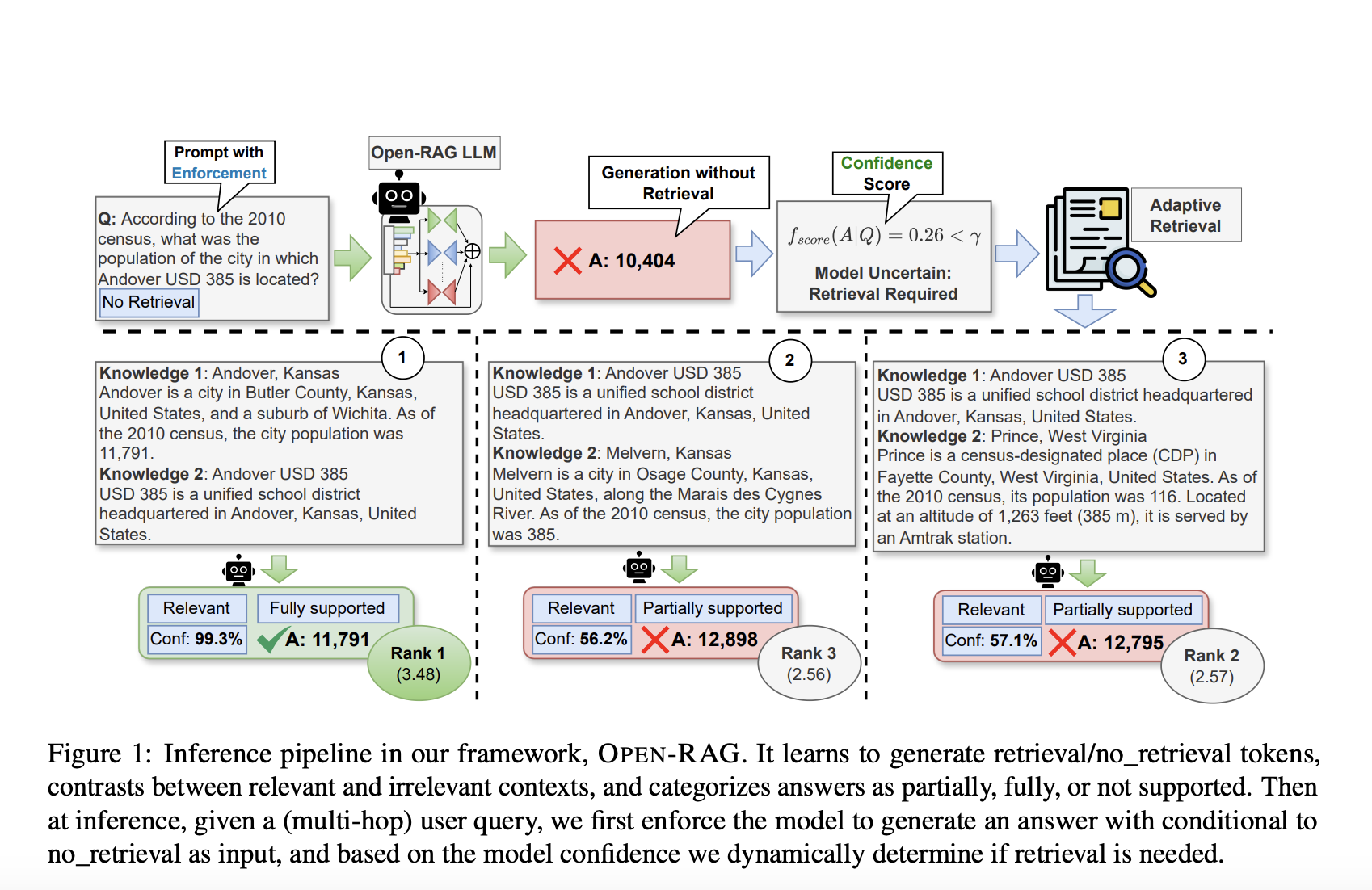

Researchers from several institutions have developed Open-RAG, a new framework that boosts the reasoning skills of retrieval-augmented generation models using open-source LLMs. Open-RAG changes a dense LLM into a more efficient sparse mixture of experts (MoE) model. This allows it to tackle complex reasoning tasks, including both single- and multi-hop queries. By smartly choosing relevant experts, the model can effectively manage misleading information.

How Open-RAG Works

Open-RAG combines several techniques:

– **Constructive Learning**: It trains the model to differentiate useful information from distractions.

– **Architectural Transformation**: It modifies a dense LLM into a more efficient MoE model.

– **Reflection-Based Generation**: It uses reflection tokens to control the retrieval process and evaluate the relevance of information.

This hybrid adaptive retrieval system enhances efficiency and accuracy by deciding when to retrieve information.

Performance Highlights

Open-RAG, built on Llama2-7B, outshines various leading RAG models, including ChatGPT-RAG and Self-RAG. It shows better reasoning and factual accuracy in knowledge-intensive tasks. For instance, it performed exceptionally well in the HotpotQA and MuSiQue datasets, which involve complex questions. Its selective expert activation keeps the computational load manageable and improves response quality.

Conclusion

Open-RAG is a major advancement in enhancing the accuracy and reasoning of RAG models using open-source LLMs. By integrating a parameter-efficient MoE structure with adaptive retrieval, Open-RAG excels in complex reasoning tasks while remaining competitive with top proprietary models. This research showcases the potential of open-source LLMs for achieving high accuracy and efficiency, paving the way for future improvements.

Get Involved

Explore the Paper and Project and acknowledge the researchers behind this work. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you appreciate our efforts, you’ll enjoy our newsletter. Also, join our 50k+ ML SubReddit community.

Upcoming Event

[Upcoming Event- Oct 17, 2024] RetrieveX – The GenAI Data Retrieval Conference (Promoted)

Transform Your Business with AI

Leverage Open-RAG to enhance your company’s AI capabilities and maintain competitiveness. Here’s how to start:

– **Identify Automation Opportunities**: Find customer interaction points to benefit from AI.

– **Define KPIs**: Ensure measurable impacts of your AI initiatives.

– **Select an AI Solution**: Choose tools that fit your needs and allow for customization.

– **Implement Gradually**: Begin with a pilot project, collect data, and expand AI use carefully.

For AI KPI management advice, reach out at hello@itinai.com. Stay updated on AI insights via our Telegram t.me/itinainews or Twitter @itinaicom.

Revolutionize Sales and Engagement

Discover how AI can enhance your sales processes and customer engagement at itinai.com.