OLMoTrace: Enhancing Transparency in Language Models

Introduction to OLMoTrace

The Allen Institute for AI (Ai2) has recently launched OLMoTrace, a pioneering tool that allows businesses to trace outputs from large language models (LLMs) back to their training data in real time. As LLMs become integral to various applications—including enterprise decision-making and educational tools—understanding their decision-making processes is crucial for evaluating their trustworthiness and identifying any biases present. OLMoTrace addresses the challenge of opacity in LLMs, offering insight into how and where model responses originate.

The Importance of Transparency in LLMs

With LLMs trained on vast datasets, the ability to trace outputs back to their sources is fundamental for:

- Trustworthiness: Ensuring that the information provided is accurate and reliable.

- Compliance: Meeting legal standards regarding data usage and copyright.

- Bias Identification: Investigating and mitigating potential biases within LLM outputs.

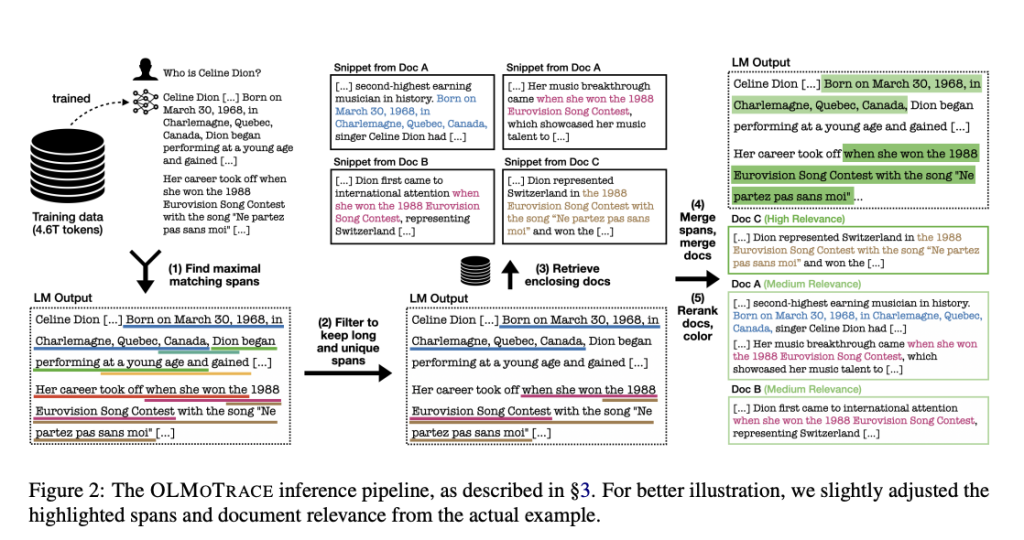

How OLMoTrace Works

System Overview

OLMoTrace utilizes an innovative indexing and search engine called infini-gram to connect generated text back to the training data seamlessly. This tool operates with remarkable efficiency, boasting an average response time of just 4.5 seconds for outputs of up to 450 tokens.

Key Features

- Real-Time Tracing: Users can analyze specific parts of an LLM’s output and see relevant training documents.

- Document Matching: The system identifies verbatim overlaps between generated text and training data, enabling users to verify facts and understand context.

- Detailed Insights: By examining matched documents, users can trace even unique expressions back to their origins, fostering deeper insights into the model’s reasoning.

Technical Architecture

The architecture comprises five essential steps:

- Span Identification: Extracts matching text segments from outputs.

- Span Filtering: Ranks spans by relevance to ensure the most informative matches are highlighted.

- Document Retrieval: Retrieves relevant training documents for each span.

- Merging: Consolidates overlapping spans to reduce clutter.

- Relevance Ranking: Scores documents based on similarity to the original prompt.

Use Cases and Practical Applications

OLMoTrace presents several practical applications for businesses:

- Fact Verification: Determine the origins of factual statements to ensure accuracy.

- Creative Analysis: Trace unique language back to source material, enhancing content quality and originality.

- Mathematical Reasoning: Identify steps in problem-solving to improve educational tools and resources.

Implications for the Industry

OLMoTrace emphasizes the importance of transparency in the development and deployment of open-source LLMs. While the system currently provides lexical matches rather than causal insights, it significantly aids compliance, copyright auditing, and quality assurance in AI applications. The open-source nature of OLMoTrace allows for further research and integration into various LLM evaluation processes.

Conclusion

OLMoTrace represents a significant step forward in enhancing the transparency and accountability of language models. By enabling businesses to trace model outputs back to their training sources, it empowers organizations to build trust with their stakeholders while ensuring compliance and reducing biases. As AI continues to evolve, tools like OLMoTrace will play a critical role in fostering responsible AI practices.