Practical Solutions and Value of OLMoE-1B-7B and OLMoE-1B-7B-INSTRUCT

Introduction

Large-scale language models have changed natural language processing with their capabilities in tasks like text generation and translation. However, their high computational costs make them difficult to access for many.

High Computational Cost Challenge

State-of-the-art language models like GPT-4 require massive resources, limiting access for smaller teams. Dense model approaches, where all parameters are activated for every input, contribute to this inefficiency.

OLMoE: Sparse Approach

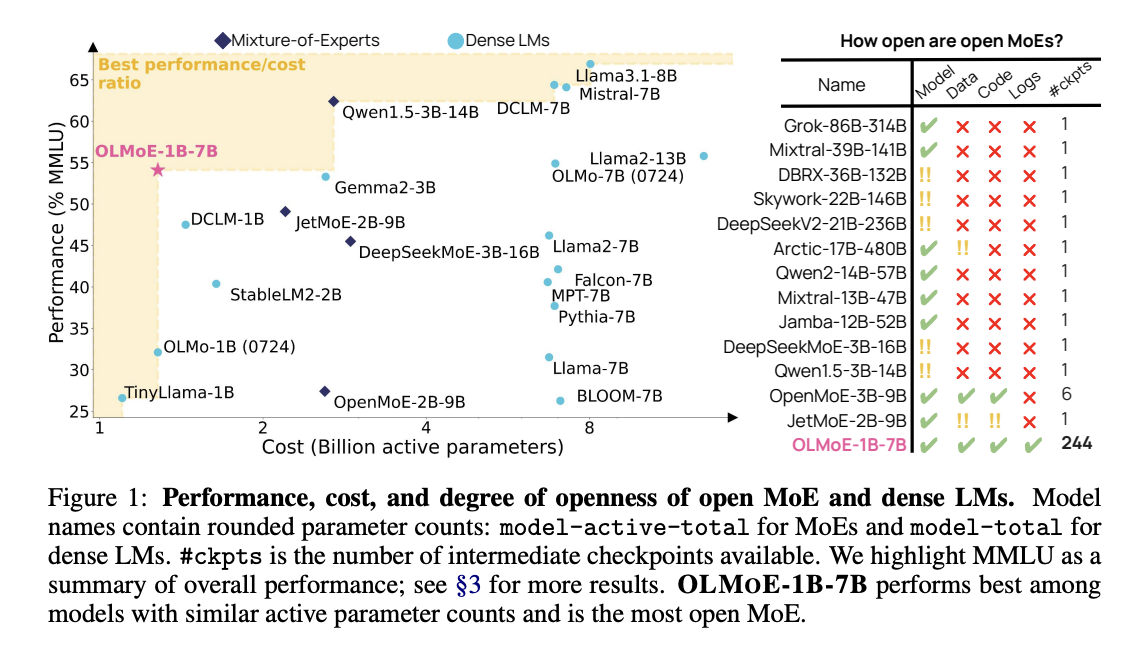

OLMoE introduces a sparse architecture, activating only a small subset of parameters for each input token, significantly reducing computational power. This includes two versions: OLMoE-1B-7B and OLMoE-1B-7B-INSTRUCT, offering efficiency and high performance.

Efficiency and Performance

OLMoE’s fine-grained routing and small expert groups enable the model to handle tasks more efficiently, outperforming larger models while using fewer parameters. The open-source nature of OLMoE encourages innovation and experimentation in the field.

AI Integration

Companies seeking AI integration can benefit from OLMoE-1B-7B and OLMoE-1B-7B-INSTRUCT to redefine work processes and customer engagement. AI adoption can be guided through identifying automation opportunities, defining KPIs, selecting suitable AI solutions, and gradual implementation.

For more AI KPI management advice, contact us at hello@itinai.com, and stay updated on leveraging AI through our Telegram or Twitter.

Discover how AI can redefine your sales processes and customer engagement at itinai.com.