Nvidia’s Eos AI supercomputer, equipped with 10,752 NVIDIA H100 Tensor Core GPUs, achieved new MLPerf AI training benchmark records. It successfully trained a GPT-3 model with 175 billion parameters on one billion tokens in just 3.9 minutes, compared to nearly 11 minutes previously. The improved processing power and efficiency indicate significant advancements in AI technology.

**Nvidia Sets New AI Training Records in MLPerf Benchmarks**

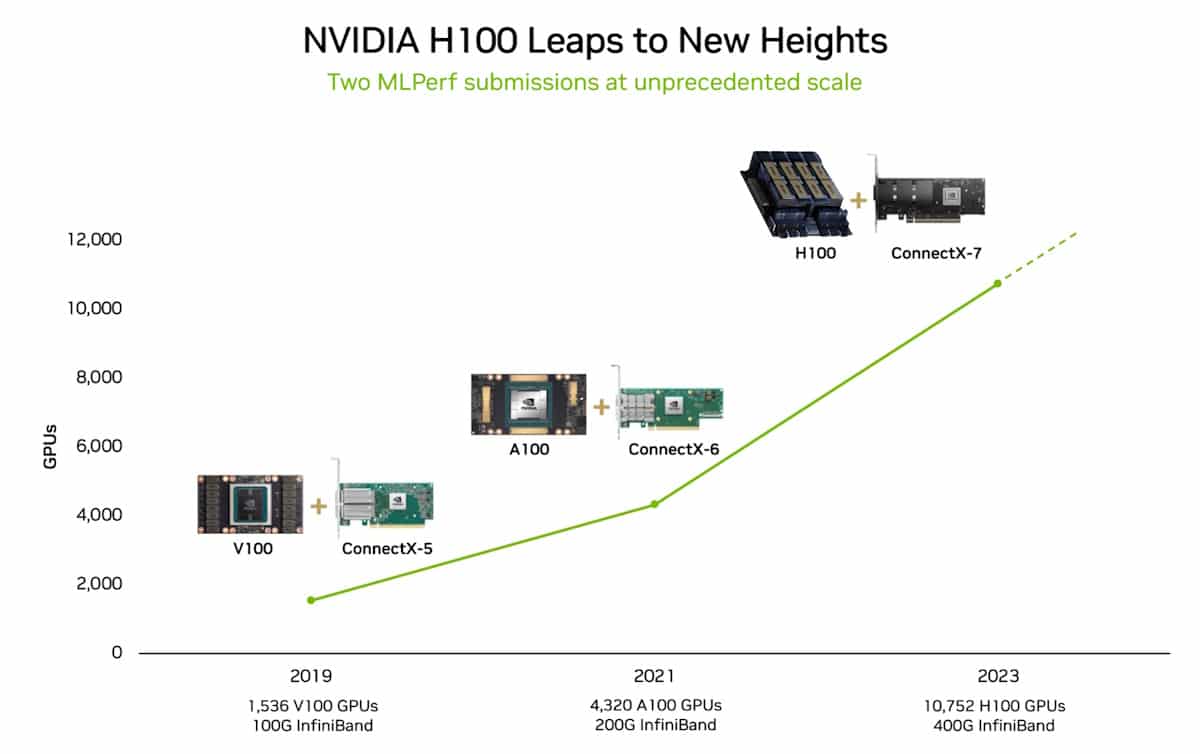

Nvidia has achieved groundbreaking results in AI training benchmarks with its Eos AI supercomputer. By scaling and optimizing the system, Nvidia has set new records in MLPerf AI training. The supercomputer, which initially used 4,608 DGX H100 GPUs, has been upgraded to 10,752 NVIDIA H100 Tensor Core GPUs connected through NVIDIA Quantum-2 InfiniBand.

This significant increase in processing power, combined with software optimizations, has allowed Eos to achieve record-breaking performance in the MLPerf benchmark. The MLPerf benchmark is an open-source benchmark that measures the performance of machine learning workloads on real-world datasets.

One remarkable achievement was Eos’s ability to train a GPT-3 model with 175 billion parameters on one billion tokens in just 3.9 minutes. Comparatively, when Nvidia set the previous record less than six months ago, it took almost three times longer, with a time of 10.9 minutes. During the tests, Nvidia also achieved an impressive 93% efficiency rate, utilizing almost all of Eos’s computing power.

Microsoft Azure, using a similar H100 setup as Eos, came within 2% of Nvidia’s test results in its MLPerf tests.

Nvidia’s continuous improvement in GPU performance, as mentioned by CEO Jensen Huang in 2018, has been proven true. The performance of GPUs has more than doubled every two years, surpassing Moore’s Law.

**The Practical Value:**

The MLPerf benchmark training test that Nvidia excelled in uses only a portion of the full dataset that GPT-3 was trained on. Extrapolating from the MLPerf test results, Eos could potentially train the full GPT-3 model in just eight days. In contrast, using Nvidia’s previous state-of-the-art system, it would have taken around 170 days with 512 A100 GPUs.

The H100 GPUs used in Eos not only provide more power but are also up to 3.5 times more energy-efficient. This addresses concerns regarding energy consumption and AI’s carbon footprint.

The rapid improvement in AI processing power is evident when comparing ChatGPT, which went live less than a year ago. GPT-3, the underlying model, was trained on 10,240 Nvidia V100 GPUs. Now, Eos has 28 times the processing power and is 3.5 times more efficient.

To keep your company competitive and take advantage of the advancements in AI, consider the benefits of Nvidia’s new AI training records in MLPerf benchmarks. Embrace AI to redefine your way of work by identifying automation opportunities, defining measurable KPIs, selecting suitable AI solutions, and implementing them gradually. For AI KPI management advice, connect with us at hello@itinai.com. Stay updated on leveraging AI by following us on Telegram t.me/itinainews or Twitter @itinaicom.

**Spotlight on a Practical AI Solution:**

Discover the AI Sales Bot from itinai.com/aisalesbot, designed to automate customer engagement 24/7 and manage interactions across all stages of the customer journey. Explore how AI can redefine your sales processes and customer engagement at itinai.com.

List of Useful Links:

- AI Lab in Telegram @aiscrumbot – free consultation

- Nvidia sets new AI training records in MLPerf benchmarks

- DailyAI

- Twitter – @itinaicom