Practical AI Solutions for Efficient Natural Language Processing

Challenges in Contextual Information Processing

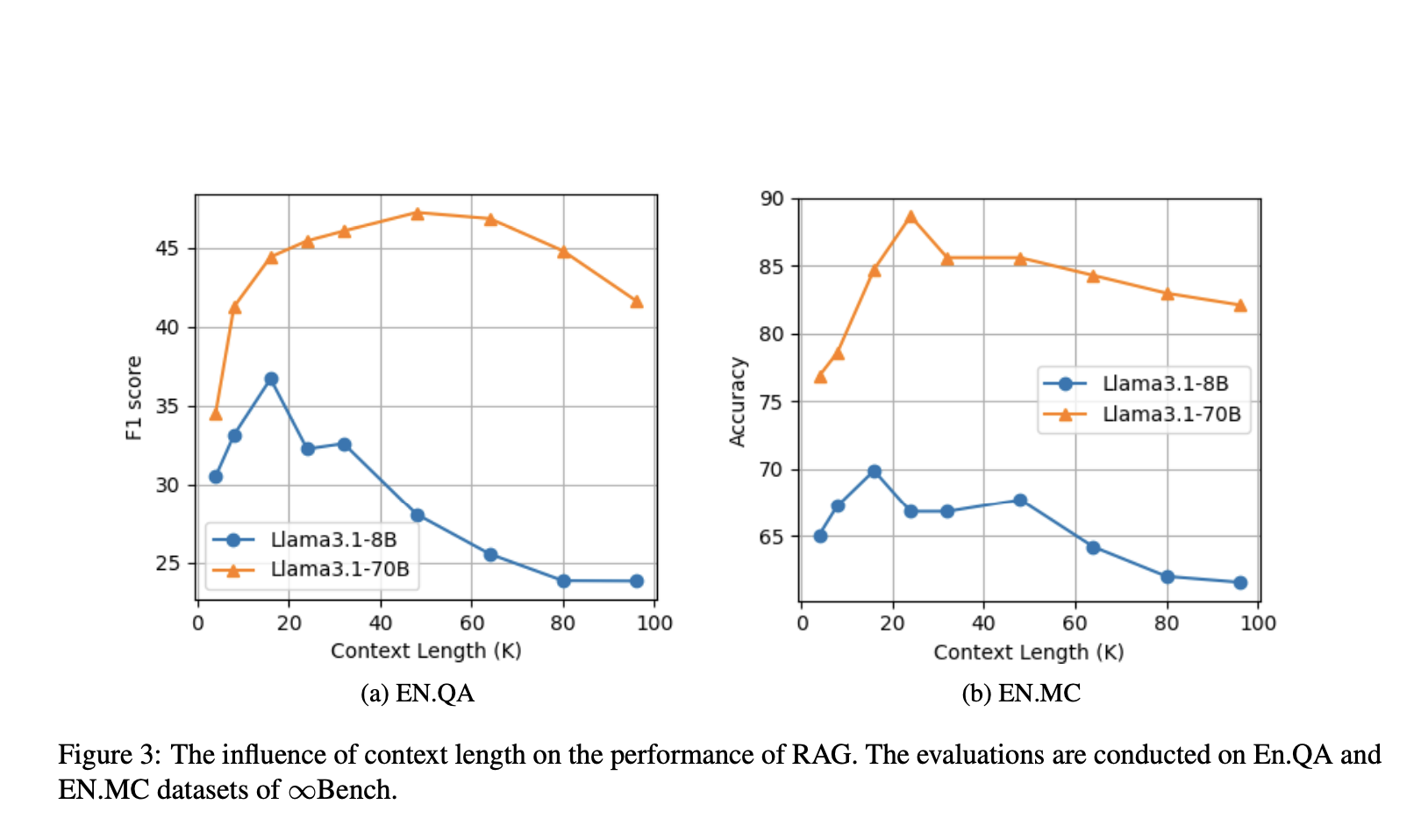

Retrieval-augmented generation (RAG) enhances large language models (LLMs) in processing extensive text, vital for accurate responses in question-answering applications.

Innovative Approach for Addressing Challenges

NVIDIA researchers introduced the order-preserve retrieval-augmented generation (OP-RAG) method, which improves answer quality in long-context scenarios by preserving the order of text chunks during processing.

Enhanced Performance and Efficiency

OP-RAG showed improved precision and efficiency in experiments, outperforming traditional long-context LLMs and reducing the number of tokens needed, making it more valuable for real-world applications.

Breakthrough in Natural Language Processing

OP-RAG offers a promising solution to the limitations of long-context LLMs, providing more coherent and contextually relevant answer generation.

Evolve Your Company with AI

Utilize Order-Preserving OP-RAG for Competitive Advantage

Stay competitive by leveraging OP-RAG for enhanced long-context question answering with LLMs, redefining your work processes with AI.

AI Implementation Strategies

Identify automation opportunities, define KPIs, select suitable AI solutions, and implement gradually for successful AI integration.

AI KPI Management Advice

Connect with us at hello@itinai.com for AI KPI management advice and continuous insights into leveraging AI.

Redefine Sales Processes and Customer Engagement with AI

Discover how AI can redefine your sales processes and customer engagement at itinai.com.