Practical Solutions for Efficient Large Language Model Training

Challenges in Large Language Model Development

Large language models (LLMs) require extensive computational resources and training data, leading to substantial costs.

Addressing Resource-Intensive Training

Researchers are exploring methods to reduce costs without compromising model performance, including pruning techniques and knowledge distillation.

Novel Approach by NVIDIA

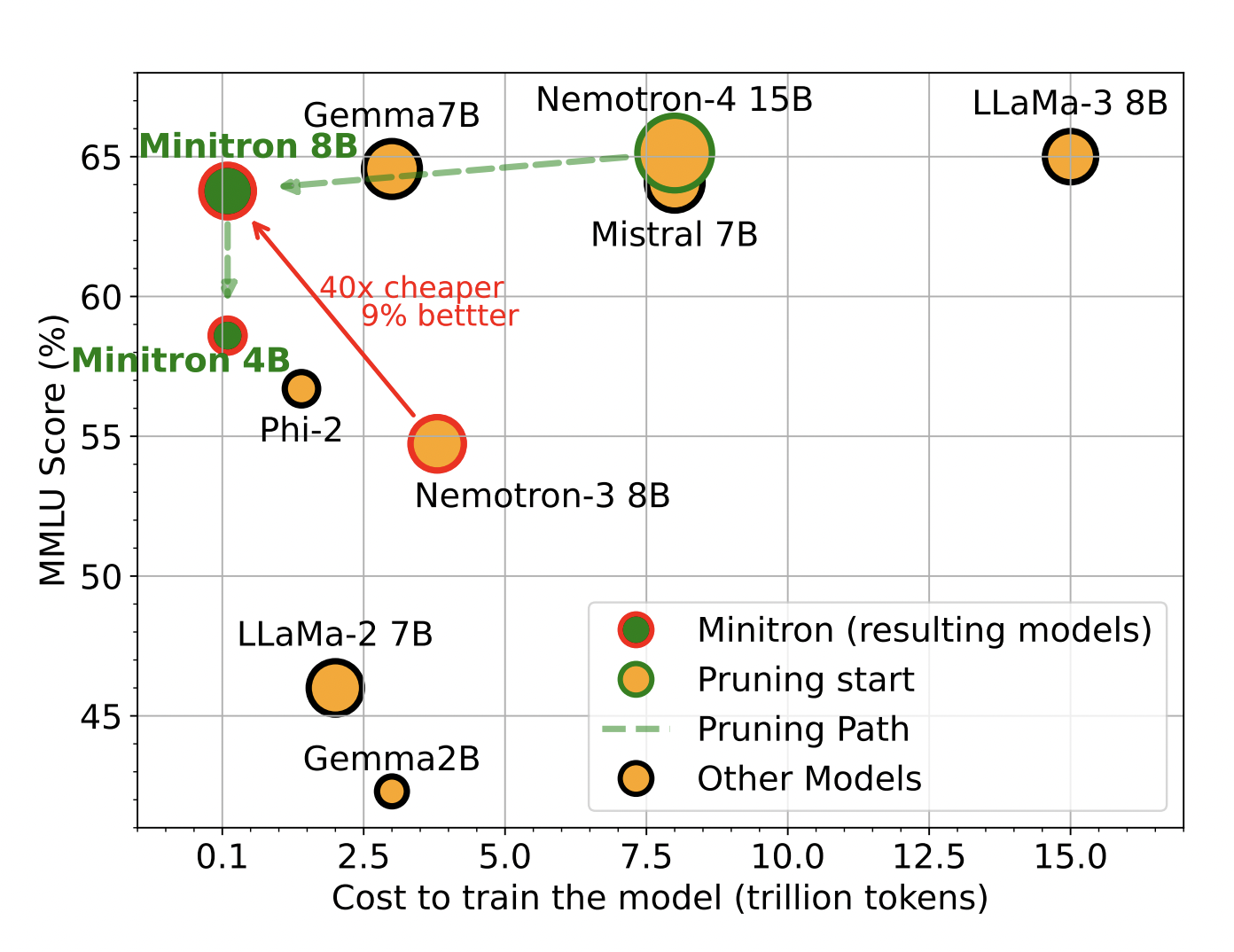

NVIDIA has introduced a structured pruning method combined with knowledge distillation to efficiently retrain pruned LLMs, resulting in significant cost and time savings.

Performance Evaluation and Model Availability

The proposed method achieved a 2-4× reduction in model size while maintaining comparable performance levels. The Minitron models have been made available on Huggingface for public use.

Conclusion and Future Implications

NVIDIA’s innovative approach demonstrates the possibility of maintaining or improving model performance while drastically cutting down on computational costs, paving the way for more accessible and efficient NLP applications.

AI Solutions for Business Transformation

Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually to evolve your company with AI. For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com or stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

AI for Sales Processes and Customer Engagement

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.