Practical AI Tools for Ensuring Model Reliability and Security

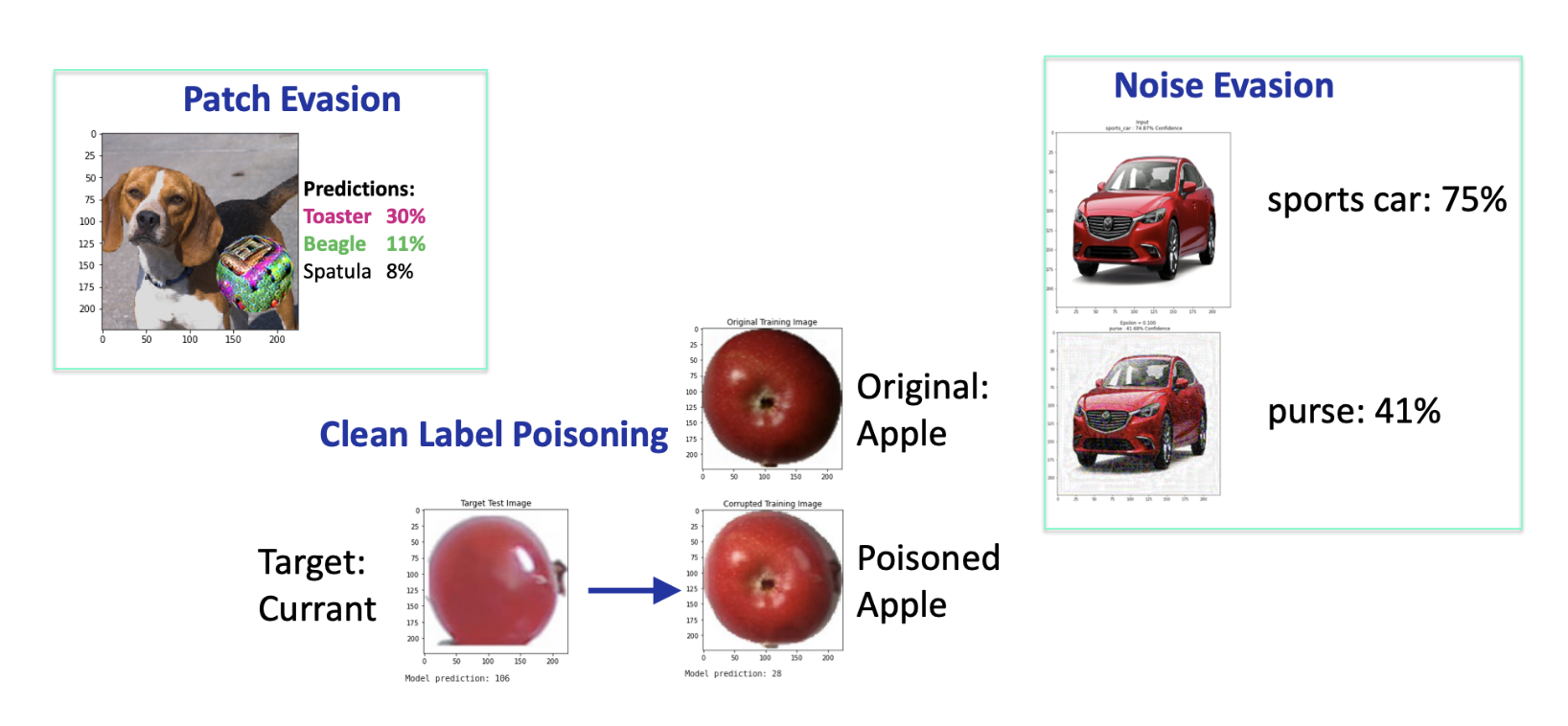

The rapid advancement and widespread adoption of AI systems have brought about numerous benefits but also significant risks. AI systems can be susceptible to attacks, leading to harmful consequences. Building reliable AI models is difficult due to their often opaque inner workings and vulnerability to adversarial attacks, such as evasion, poisoning, and oracle attacks. These attacks can manipulate data to degrade model performance or extract sensitive information, necessitating robust methods to evaluate and mitigate such threats.

Addressing Trustworthiness and Security with Dioptra

Existing methods for evaluating AI security and trustworthiness focus on specific attacks or defenses without considering the broader range of possible threats. These methods can lack reproducibility, traceability, and compatibility, making it difficult to compare results across different studies and applications. The National Institute of Standards and Technology (NIST) has developed Dioptra to address the challenge of ensuring the trustworthiness and security of artificial intelligence (AI) models. Dioptra is a comprehensive software platform that evaluates the trustworthy characteristics of AI. It helps the NIST AI Risk Management Framework’s Measure function by giving tools to evaluate, analyze, and keep track of AI risks, aiming to solve limitations faced by existing models by providing a standardized platform for evaluating the trustworthiness of AI systems.

Features and Benefits of Dioptra

Dioptra is built on a microservices architecture that enables its deployment on various scales, from local laptops to distributed systems with high computational resources. The platform uses a Redis queue and Docker containers to handle experiment jobs, ensuring modularity and scalability. Dioptra’s plugin system allows for the integration of existing Python packages and the development of new functionalities, promoting extensibility. The platform’s modular design supports the combination of different datasets, models, attacks, and defenses, enabling comprehensive evaluations. Its novel features are reproducibility and traceability enabled by creating snapshots of resources and tracking the full history of experiments and inputs. Dioptra’s interactive web interface and multi-tenant deployment capabilities further enhance its usability, allowing users to share and reuse components.

Value of Dioptra for Ensuring AI Reliability and Security

In conclusion, NIST addresses the limitations of existing methods by enabling comprehensive assessments under diverse conditions, promoting reproducibility and traceability, and supporting compatibility between different components. By facilitating detailed evaluations of AI defenses against a wide array of attacks, Dioptra helps researchers and developers better understand and mitigate the risks associated with AI systems. This makes Dioptra a valuable tool for ensuring the reliability and security of AI in various applications.