The Role of Explainable AI in In Vitro Diagnostics Under European Regulations

AI is crucial in healthcare, particularly in vitro diagnostics (IVD) under the European IVDR. AI systems must provide explainable results to comply with regulatory requirements, ensuring trustworthy AI for healthcare professionals.

Explainability and Scientific Validity in AI for In Vitro Diagnostics

AI algorithms must be explainable and supported by scientific evidence, ensuring trustworthiness and accuracy in diagnostic methods.

Explainability in Analytical Performance Evaluation for AI in IVDs

Explainable AI (xAI) methods are essential in evaluating AI’s ability to process input data accurately and identify potential failures.

Explainability in Clinical Performance Evaluation for AI in IVDs

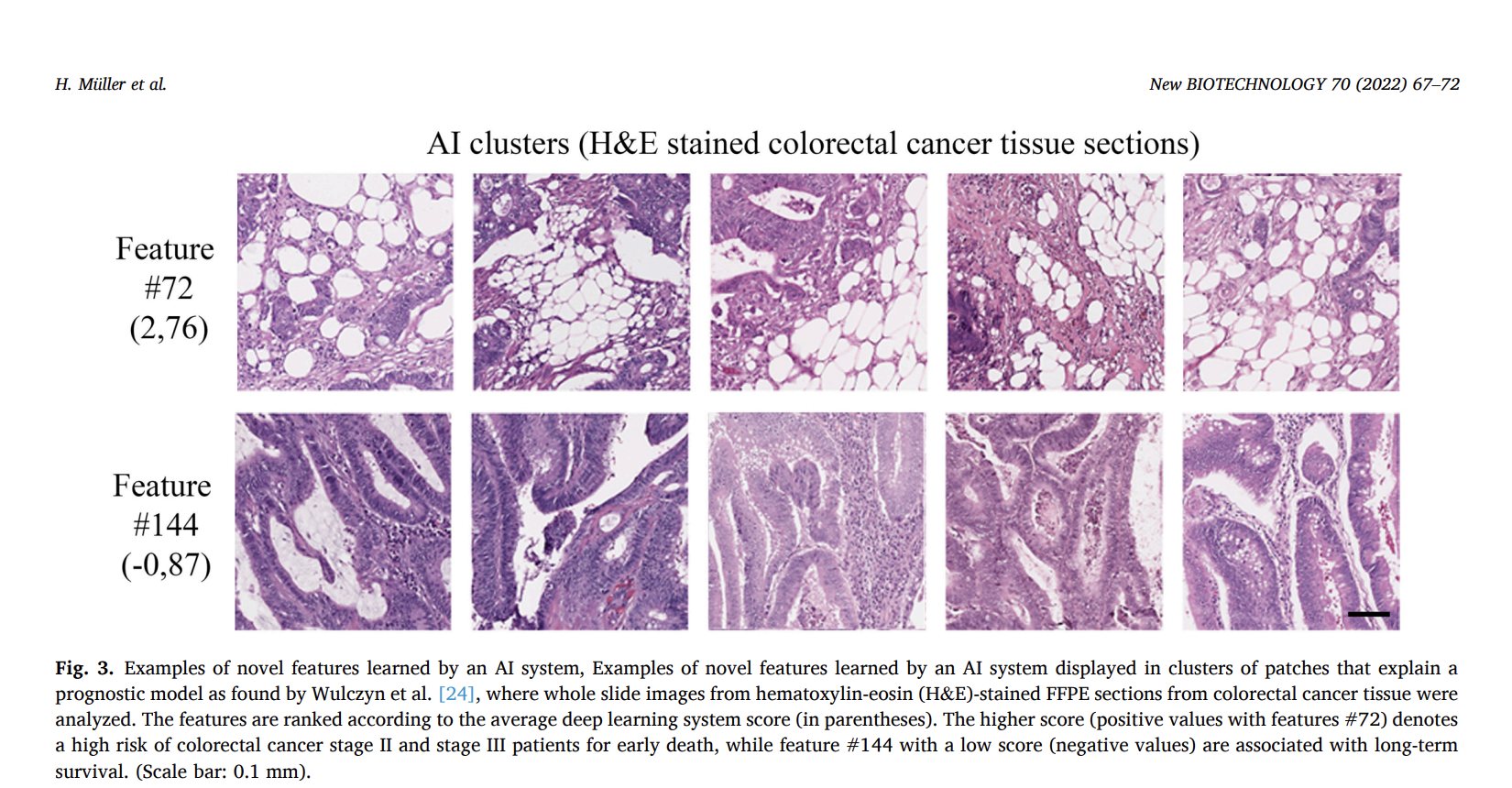

xAI methods ensure that AI supports decision-making effectively by making the AI’s decision process traceable, interpretable, and understandable for medical experts.

Conclusion

Explainability is crucial for AI solutions in IVDs to demonstrate scientific validity, analytical performance, and, where relevant, clinical performance. It is essential for regulatory compliance and empowering healthcare professionals to make informed decisions.

Arcee AI Released DistillKit: An Open Source, Easy-to-Use Tool Transforming Model Distillation for Creating Efficient, High-Performance Small Language Models

Discover how AI can redefine your company’s way of working and sales processes. Identify automation opportunities, define KPIs, select AI solutions, and implement gradually for measurable impacts on business outcomes. Connect with us at hello@itinai.com for AI KPI management advice and stay updated on our Telegram t.me/itinainews or Twitter @itinaicom for continuous AI leveraging insights.