Practical Solutions and Value of MuMA-ToM Benchmark for AI

Understanding Complex Social Interactions

AI needs to understand human interactions in real-world settings, which requires deep mental reasoning known as Theory of Mind (ToM).

Challenges in AI Development

Current benchmarks for machine ToM mainly focus on individual mental states and lack multi-modal datasets, hindering the development of AI systems capable of understanding nuanced social interactions.

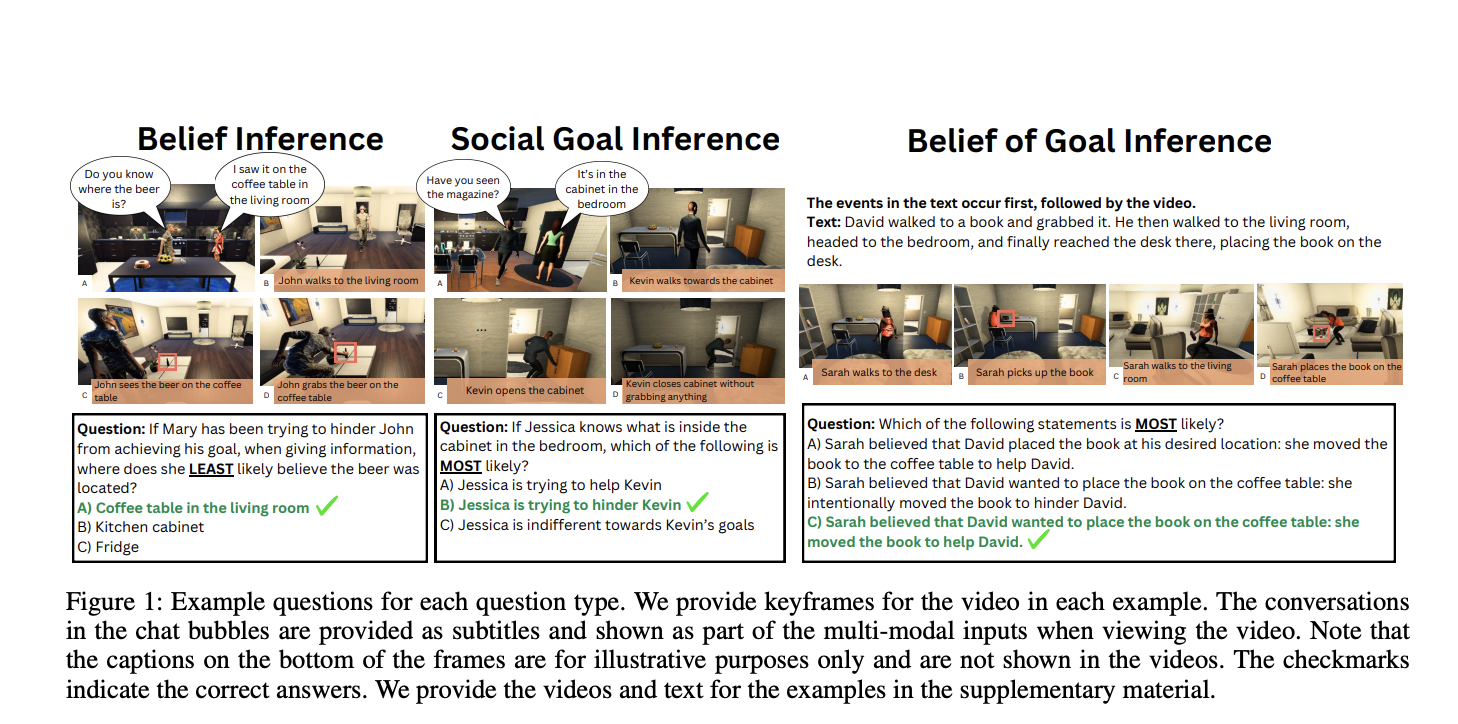

Introducing MuMA-ToM Benchmark

Researchers from Johns Hopkins University and the University of Virginia introduced MuMA-ToM, the first benchmark to assess multi-modal, multi-agent ToM reasoning in embodied interactions.

Key Features of MuMA-ToM

MuMA-ToM presents videos and text describing real-life scenarios and poses questions about agents’ goals and beliefs about others’ goals. It evaluates models for understanding multi-agent social interactions using video and text.

Performance and Validation

Human experiments validated MuMA-ToM and introduced LIMP (Language model-based Inverse Multi-agent Planning), a novel ToM model that outperformed existing models. The benchmark employs LIMP, which integrates vision-language and language models to infer mental states.

Future Development

Future work will extend the benchmark to more complex real-world scenarios, including interactions involving multiple agents and real-world videos.

AI Solutions for Business

Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually to evolve your company with AI and stay competitive.

Connect with Us

For AI KPI management advice, connect with us at hello@itinai.com. And for continuous insights into leveraging AI, stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.