Understanding Large Language Models (LLMs)

Large Language Models (LLMs) are powerful tools for processing language, but understanding how they work internally can be tough. Recent innovations using sparse autoencoders (SAEs) have uncovered interpretable features within these models. However, grasping their complex structures across different levels is still a major challenge.

Key Challenges

- Identifying geometric patterns at a small scale.

- Understanding functional groupings at a mid-level.

- Examining overall feature distribution at a larger scale.

Limitations of Existing Methods

Many past methods to analyze LLM features have limitations. Sparse autoencoders (SAEs) have been useful but often focus on only one scale. Other techniques, like early word embeddings, identified simple relationships but missed the complexity of multi-scale interactions.

New Methodology from MIT

Researchers at MIT propose a new way to analyze feature structures in SAEs using “crystal structures” to highlight semantic relationships. This approach moves beyond basic relationships to explore more complex connections.

Addressing Distractor Features

Initial findings showed that irrelevant features can distort expected patterns. To overcome this, the study introduces Linear Discriminant Analysis (LDA) to filter out distractions, allowing for clearer identification of meaningful patterns.

Analyzing Larger-Scale Structures

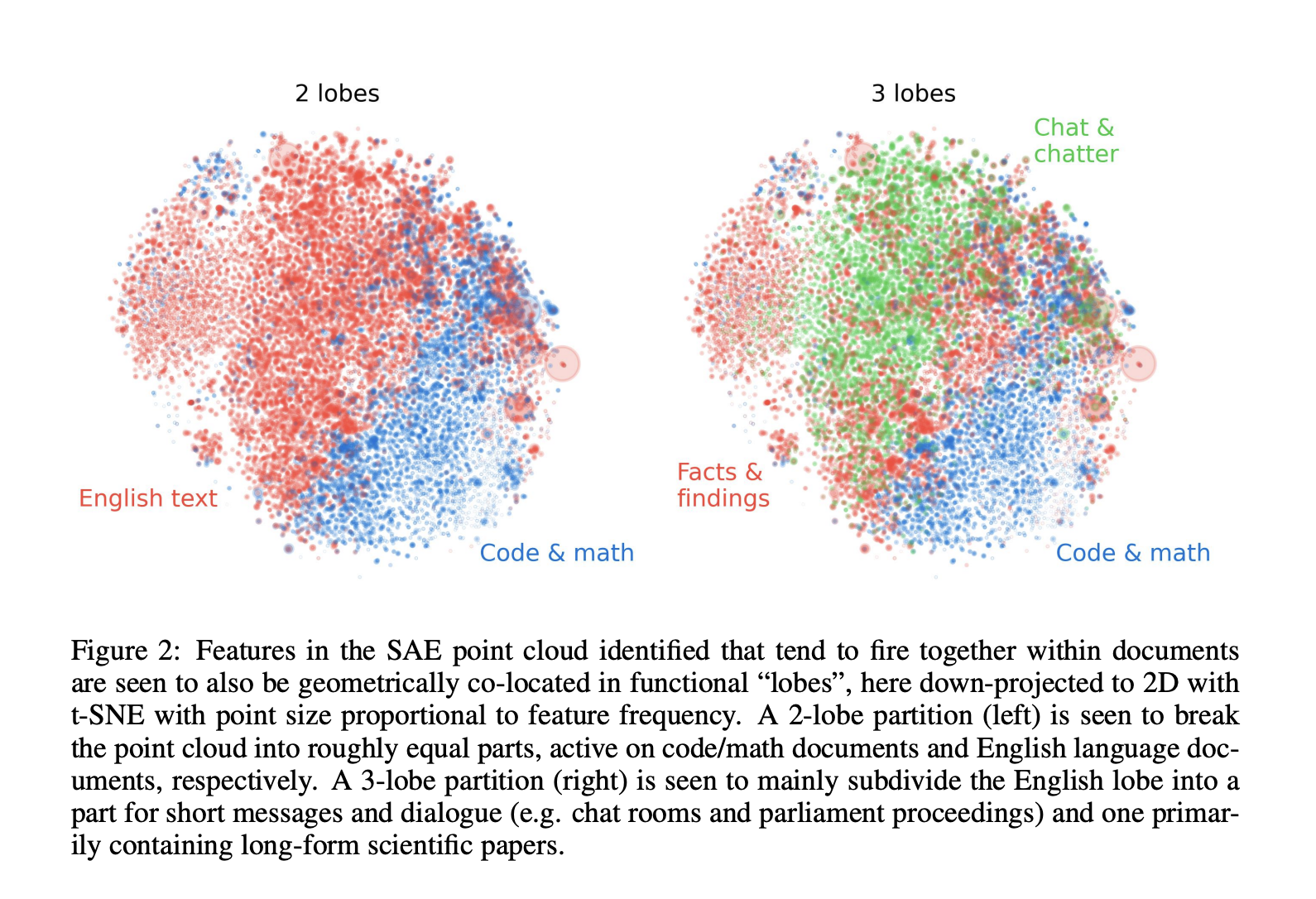

The research also investigates functional groupings within the SAE feature space, similar to how different areas in the brain specialize in various tasks. This analysis uses advanced metrics to explore feature relationships and validate whether related features cluster together in the activation space.

Insights from Galaxy-Scale Analysis

Examining the large-scale structure of the feature point cloud reveals unique patterns that do not follow a simple distribution. The analysis indicates organized and non-random distributions, similar to biological neural networks.

Findings at Different Scales

- At the atomic level: clear geometric patterns emerge, representing semantic relationships.

- At the intermediate level: functional modularity is observed, akin to brain specialization.

- At the galaxy scale: the structure shows non-random distributions with distinct characteristics.

Practical Applications of AI

Utilizing Multi-Scale Geometric Analysis of LLM features can help your business adapt and thrive with AI. Here’s how:

- Identify Automation Opportunities: Find key areas for AI integration.

- Define KPIs: Ensure measurable impacts from AI initiatives.

- Select AI Solutions: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, gather data, and expand wisely.

Stay Connected

For more insights and support on leveraging AI, feel free to contact us at hello@itinai.com. Follow us on Telegram and @Twitter for continuous updates.