MLC LLM: Universal LLM Deployment Engine with Machine Learning ML Compilation

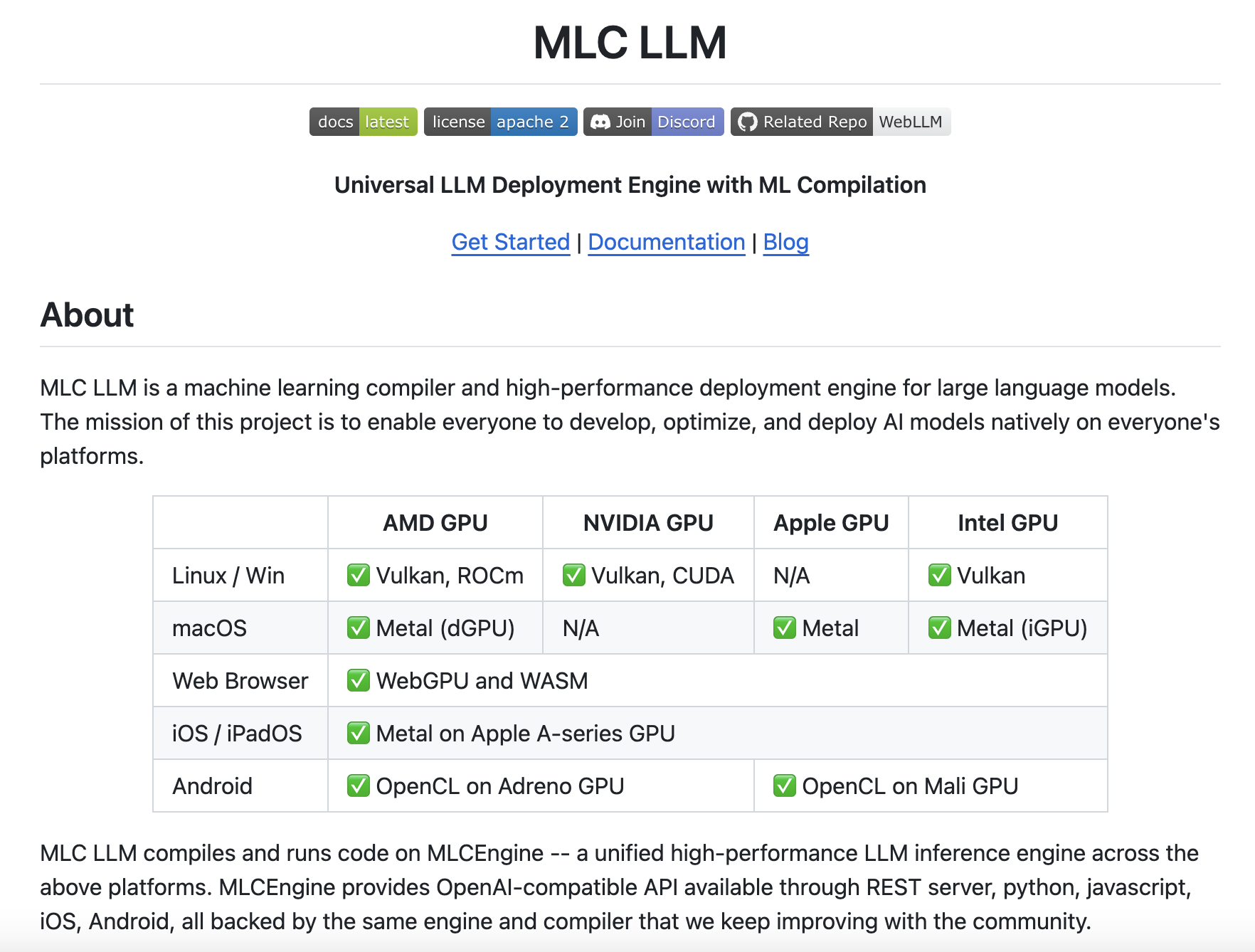

Deploying large language models (LLMs) can be challenging, especially as they become more complex and need to run efficiently on various platforms. MLC LLM offers a new solution to address these challenges by optimizing and deploying LLMs natively across multiple platforms.

Key Features and Benefits

- Supports quantized models, reducing size without sacrificing performance

- Includes tools for automatic model optimization for diverse hardware setups

- Provides a flexible command-line interface, Python API, and REST server for easy integration

MLC LLM simplifies the process of running complex models on diverse hardware setups, making it more accessible for users to deploy LLMs without extensive machine learning or hardware optimization expertise. This enables a broader range of applications, from high-performance computing environments to edge devices.

AI Transformation with MLC LLM

- Automate key customer interaction points with AI

- Define measurable KPIs for impactful AI endeavors

- Choose customizable AI solutions that align with your business needs

- Implement AI gradually and expand usage judiciously

For AI KPI management advice, connect with us at hello@itinai.com. Stay tuned for continuous insights into leveraging AI at our Telegram channel and Twitter.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.