Mixture-of-Experts (MoE) Architectures: Transforming Artificial Intelligence AI with Open-Source Frameworks

Practical Solutions and Value

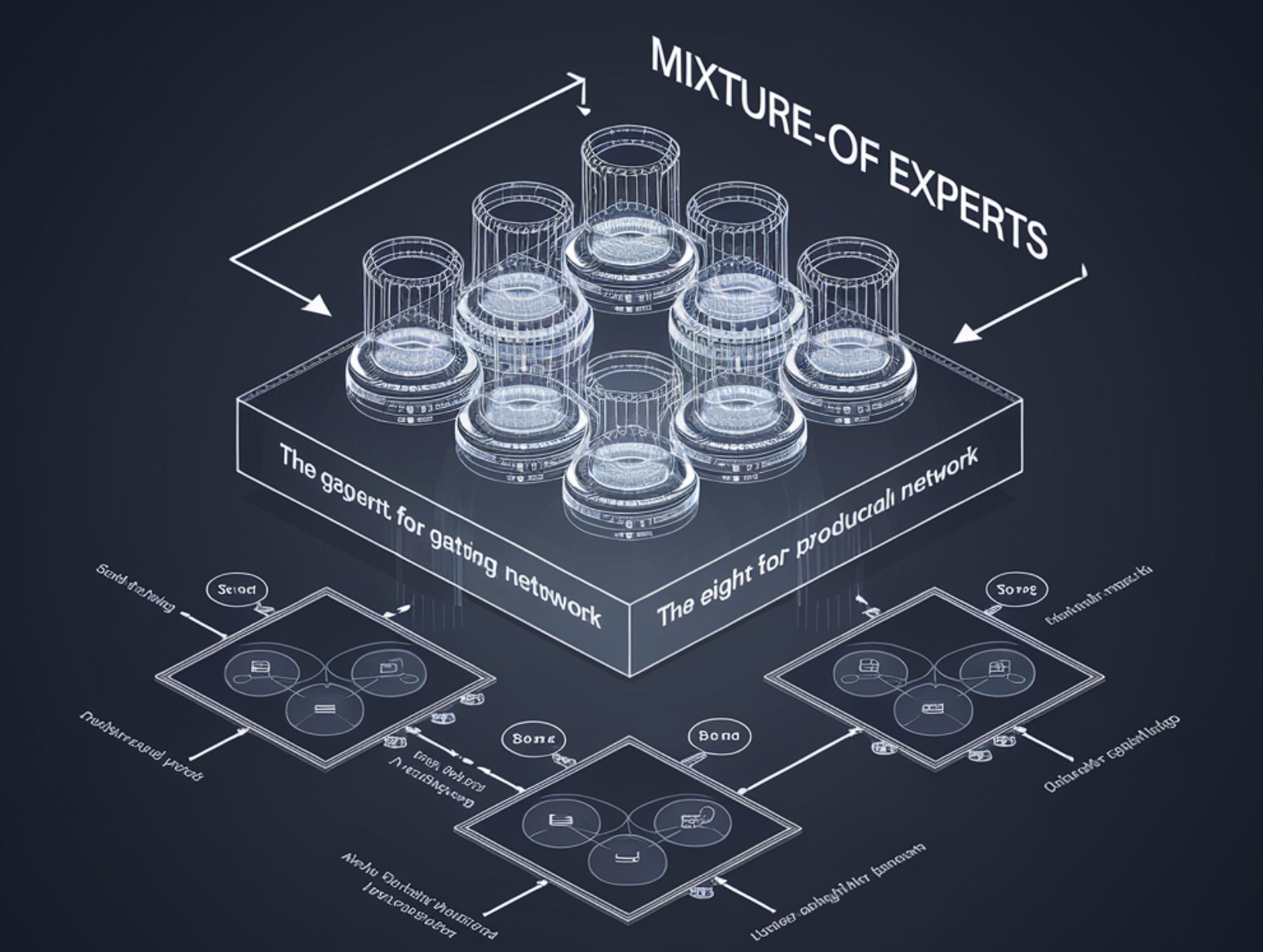

Mixture-of-experts (MoE) architectures optimize computing power and resource utilization by selectively activating specialized sub-models based on input data. This selective activation allows MoE to tackle complex tasks while maintaining computing efficiency, making it an adaptable and effective substitute for large AI models.

MoE’s exceptional balance between performance and computational economy is achieved through its sophisticated mechanisms for gating, expandable effectiveness, and evolution and adaptability. This design ensures effective token routing, dynamic parallelism, and hierarchical pipelining, making it especially helpful in applications like natural language processing (NLP).

The increasing popularity of MoE has led to the development of open-source frameworks such as OpenMoE, ScatterMoE, Megablocks, Tutel, SE-MoE, HetuMoE, FastMoE, Deepspeed-MoE, Fairseq, and Mesh. These frameworks enable large-scale testing and implementation, offering speedups in model training, memory efficiency, and scalability for practical uses.

AI Solutions for Your Company

If you want to evolve your company with AI, Mixture-of-Experts (MoE) Architectures can redefine your way of work. They provide unmatched scalability and efficiency, allowing the building of larger, more complicated models without requiring corresponding increases in computer resources.

To leverage AI for your company, you can identify automation opportunities, define KPIs, select an AI solution, and implement gradually. For AI KPI management advice and continuous insights into leveraging AI, you can connect with us at hello@itinai.com or stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

Discover how AI can redefine your sales processes and customer engagement by exploring solutions at itinai.com.