Understanding MoDE: A New Approach in Imitation Learning

Challenges with Current Models

Diffusion Policies in Imitation Learning (IL) can create various agent behaviors, but larger models require more computing power, leading to slower training and inference. This is a problem for real-time applications, especially on devices like mobile robots, where computing resources are limited. Traditional models have many parameters and require extensive denoising steps, making them impractical for these scenarios.

Current Robotics Solutions

Today, robotics often uses Transformer-based Diffusion Models for tasks like Imitation Learning and robot design. However, these models are costly to run due to their size and complexity. They also face challenges like expert collapse in Mixture-of-Experts (MoE) models, which can hinder performance.

Introducing MoDE

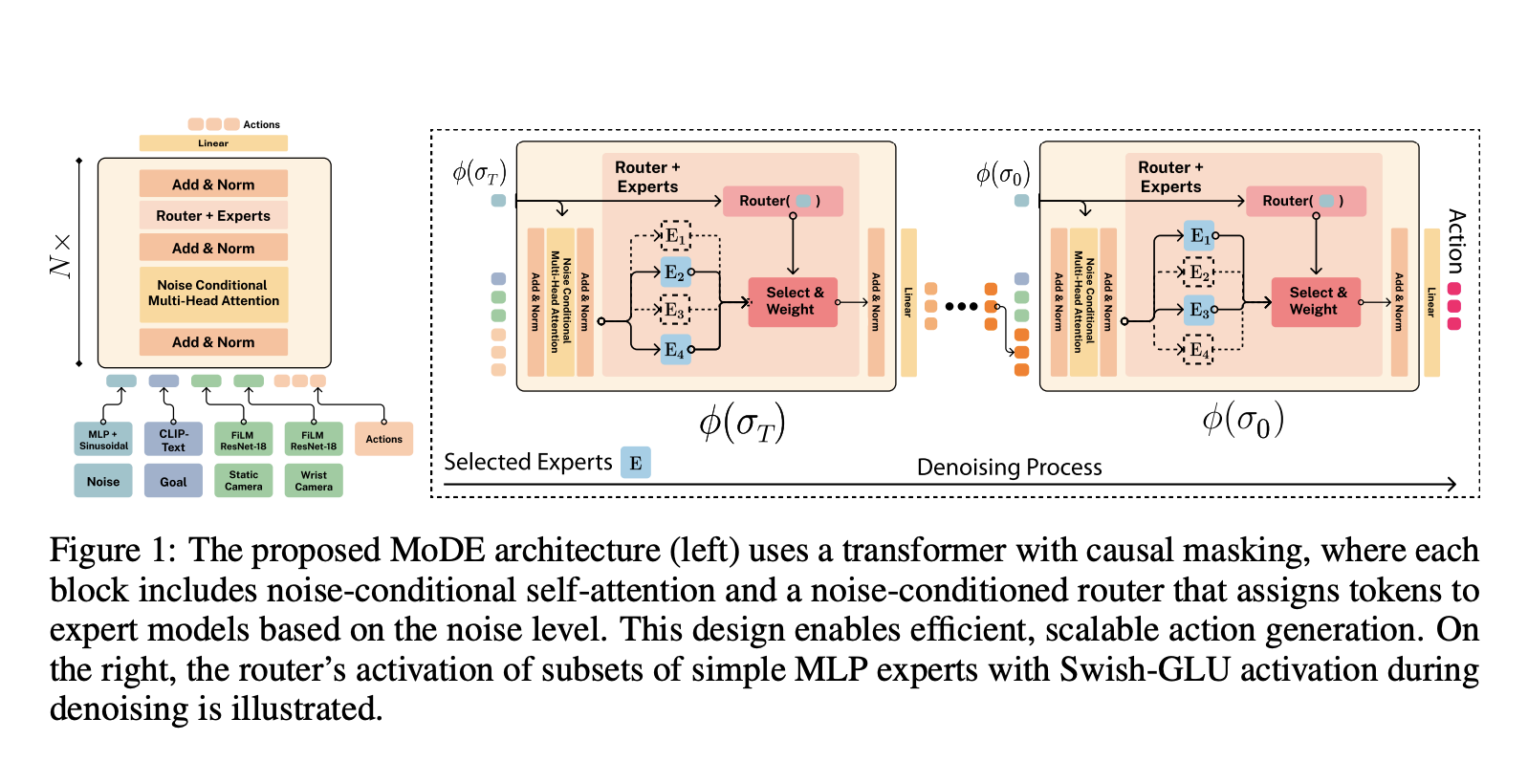

Researchers from the Karlsruhe Institute of Technology and MIT have developed MoDE, a Mixture-of-Experts (MoE) Diffusion Policy. MoDE enhances efficiency by using noise-conditioned routing and a self-attention mechanism, allowing for faster and more effective denoising. It only activates the necessary experts based on noise levels, reducing both latency and computational costs.

Key Features of MoDE

- Utilizes a noise-conditioned approach for expert routing.

- Incorporates a frozen CLIP language encoder and FiLM-conditioned ResNets for image processing.

- Employs transformer blocks for different denoising phases.

- Introduces noise-aware positional embeddings and expert caching to minimize computational load.

Performance Evaluation

MoDE was tested against other policies and architectures, showing superior performance in benchmarks like LIBERO–90 and CALVIN Language-Skills Benchmark. It demonstrated exceptional efficiency and generalization capabilities, making it a strong contender in the field.

Conclusion and Future Directions

MoDE improves both performance and efficiency by combining experts, transformers, and noise-conditioned routing. It requires fewer parameters and lower computational costs, making it a promising framework for future research in scalable machine learning tasks.

Get Involved

Explore the research paper and model on Hugging Face. Follow us on Twitter, join our Telegram Channel, and participate in our LinkedIn Group. Don’t forget to check out our 60k+ ML SubReddit.

Join Our Webinar

Gain actionable insights into enhancing LLM model performance while ensuring data privacy.

Transform Your Business with AI

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Measure the impact of your AI initiatives on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start small, gather data, and expand AI use carefully.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights into leveraging AI, follow us on Telegram or Twitter.

Enhance Your Sales and Customer Engagement with AI

Discover more solutions at itinai.com.