Practical AI Solution: Mistral-finetune

Many developers and researchers struggle with efficiently fine-tuning large language models. Adjusting model weights demands substantial resources and time, hindering accessibility for many users.

Introducing Mistral-finetune

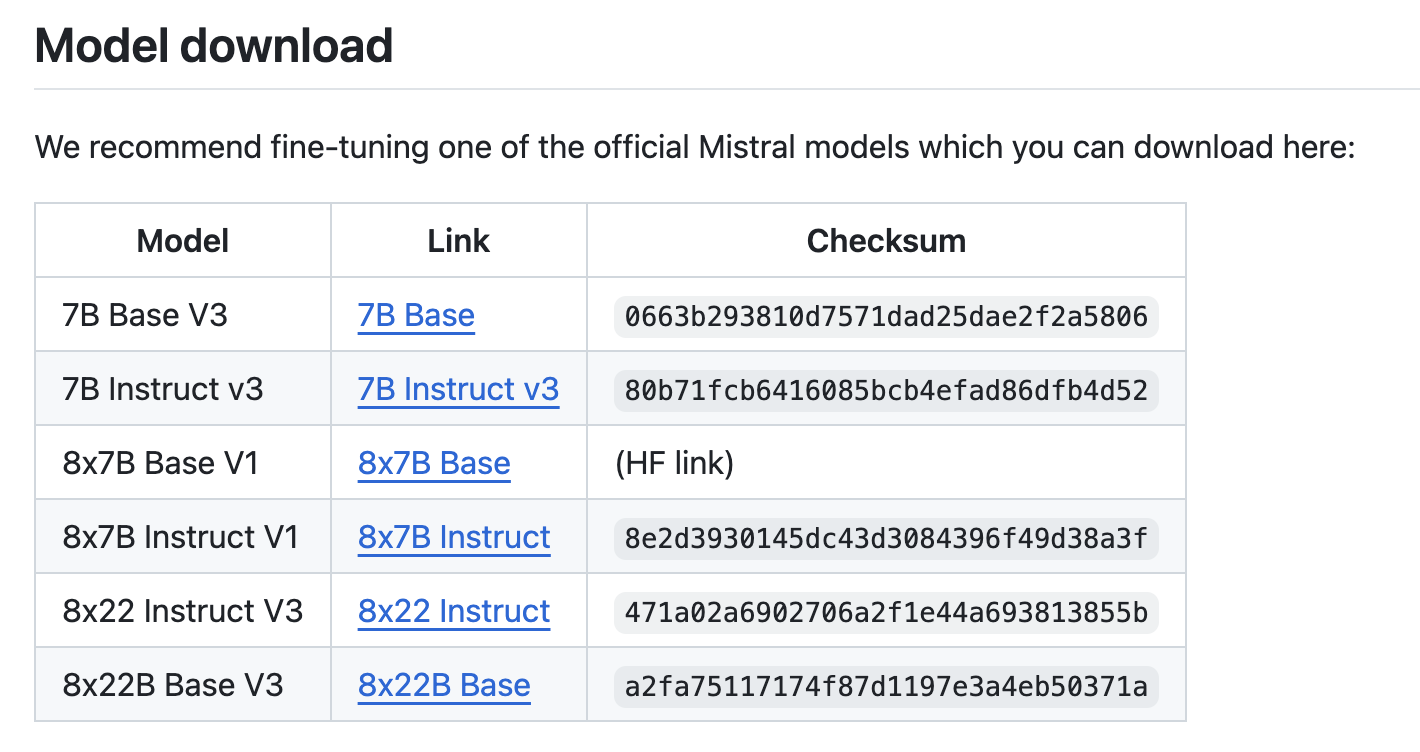

Mistral-finetune is a lightweight codebase designed for memory-efficient and performant fine-tuning of large language models. It leverages Low-Rank Adaptation (LoRA) to reduce computational requirements, making it accessible to a broader audience.

Mistral-finetune is optimized for powerful GPUs like the A100 or H100, while still supporting single GPU setups for smaller models like the 7 billion parameter versions. It also offers support for multi-GPU setups, ensuring scalability for demanding tasks.

This solution enables quick and efficient model fine-tuning, such as completing training on a dataset like Ultra-Chat using an 8xH100 GPU cluster in around 30 minutes. It also effectively handles different data formats, showcasing its versatility and robustness.

In conclusion, Mistral-finetune addresses the common challenges of fine-tuning large language models by offering a more efficient and accessible approach. It significantly reduces the need for extensive computational resources, making advanced AI research and development more achievable.

Maximize Your AI Potential

If you want to evolve your company with AI and stay competitive, consider utilizing Mistral-finetune to enhance your models. Discover how AI can redefine your sales processes and customer engagement with our practical AI solutions.

Connect with us at hello@itinai.com for AI KPI management advice. Stay tuned for continuous insights into leveraging AI on our Telegram or Twitter.

Spotlight on a Practical AI Solution: AI Sales Bot

Consider the AI Sales Bot from itinai.com/aisalesbot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.

Explore solutions at itinai.com and redefine your sales processes and customer engagement with AI.