Challenges in Developing Language Models

Creating compact and efficient language models is a major challenge in AI. Large models need a lot of computing power, making them hard to access for many users and organizations with limited resources. There is a strong need for models that can perform various tasks, support multiple languages, and give accurate responses quickly without losing quality. It’s essential to find a balance between performance, scalability, and accessibility, especially for local use and data privacy.

Recent Developments in Language Models

Recent advancements in natural language processing have produced large models like GPT-4, Llama 3, and Qwen 2.5. These models perform well but require significant computational resources. To address this, efforts are being made to create smaller, more efficient models using techniques like instruction fine-tuning and quantization, allowing for local deployment while maintaining strong performance. Models like Gemma-2 enhance multilingual understanding, while innovations in function calling improve task adaptability. However, achieving a balance between performance, efficiency, and accessibility is still a key goal.

Mistral AI’s New Model: Mistral-Small

Mistral AI has launched the Mistral-Small-24B-Instruct-2501, a compact yet powerful language model with 24 billion parameters. This model is designed for high performance on instruction-based tasks, offering advanced reasoning and multilingual capabilities. It is optimized for efficient local deployment on devices like RTX 4090 GPUs or laptops with 32GB RAM. With a 32k context window, it can handle large inputs while remaining responsive. It also features JSON-based output and native function calling, making it versatile for various applications.

Open-Source and Flexible

The Mistral-Small model is open-sourced under the Apache 2.0 license, allowing developers to use it for both commercial and non-commercial purposes. Its architecture ensures low latency and quick responses, making it suitable for both businesses and hobbyists. This model emphasizes accessibility without compromising quality, bridging the gap between large-scale performance and resource-efficient deployment.

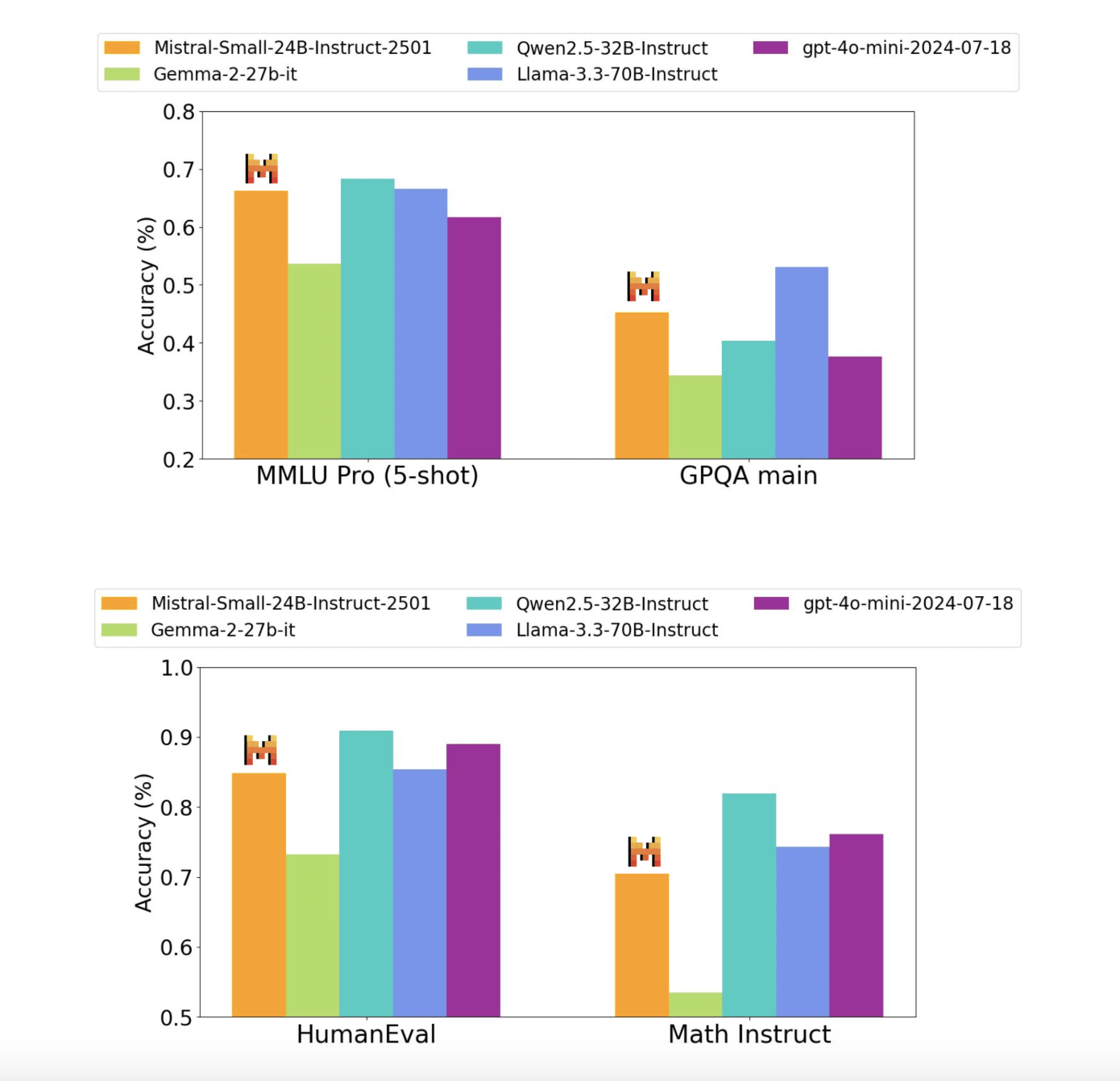

Performance and Benchmarks

The Mistral-Small-24B-Instruct-2501 model shows impressive results, competing with larger models like Llama 3.3-70B and GPT-4o-mini in various tasks. It achieves high accuracy in reasoning, multilingual processing, and coding benchmarks, such as 84.8% on HumanEval and 70.6% on math tasks. Its ability to manage extensive inputs effectively ensures strong instruction-following capabilities, making it a viable alternative for diverse applications.

Conclusion

The Mistral-Small-24B-Instruct-2501 sets a new benchmark for efficiency and performance in smaller language models. With its 24 billion parameters, it provides state-of-the-art results in reasoning, multilingual understanding, and coding while being resource-efficient. Its 32k context window and compatibility with local deployment make it ideal for various applications, from chatbots to specialized tasks. The model’s open-source nature enhances its accessibility and adaptability, representing a significant advancement in creating powerful and compact AI solutions.

Explore More

For more technical details, visit Mistral AI. Stay connected with us on Twitter, join our Telegram Channel, and participate in our LinkedIn Group. Don’t forget to join our 70k+ ML SubReddit community.

Transform Your Business with AI

If you want to enhance your company with AI and stay competitive, consider the Mistral-Small-24B-Instruct-2501 model. Here are some steps to redefine your work with AI:

- Identify Automation Opportunities: Find key customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that suit your needs and allow for customization.

- Implement Gradually: Start with a pilot project, gather data, and expand AI use wisely.

For AI KPI management advice, contact us at hello@itinai.com. Stay updated on AI insights by following us on Telegram or on Twitter @itinaicom.

Discover how AI can enhance your sales processes and customer engagement at itinai.com.