High-Performance AI Models for On-Device Use

To address the challenges of current large-scale AI models, we need high-performance AI models that can operate on personal devices and at the edge. Traditional models rely heavily on cloud resources, which can lead to privacy concerns, increased latency, and higher costs. Moreover, cloud dependency is not ideal for offline usage.

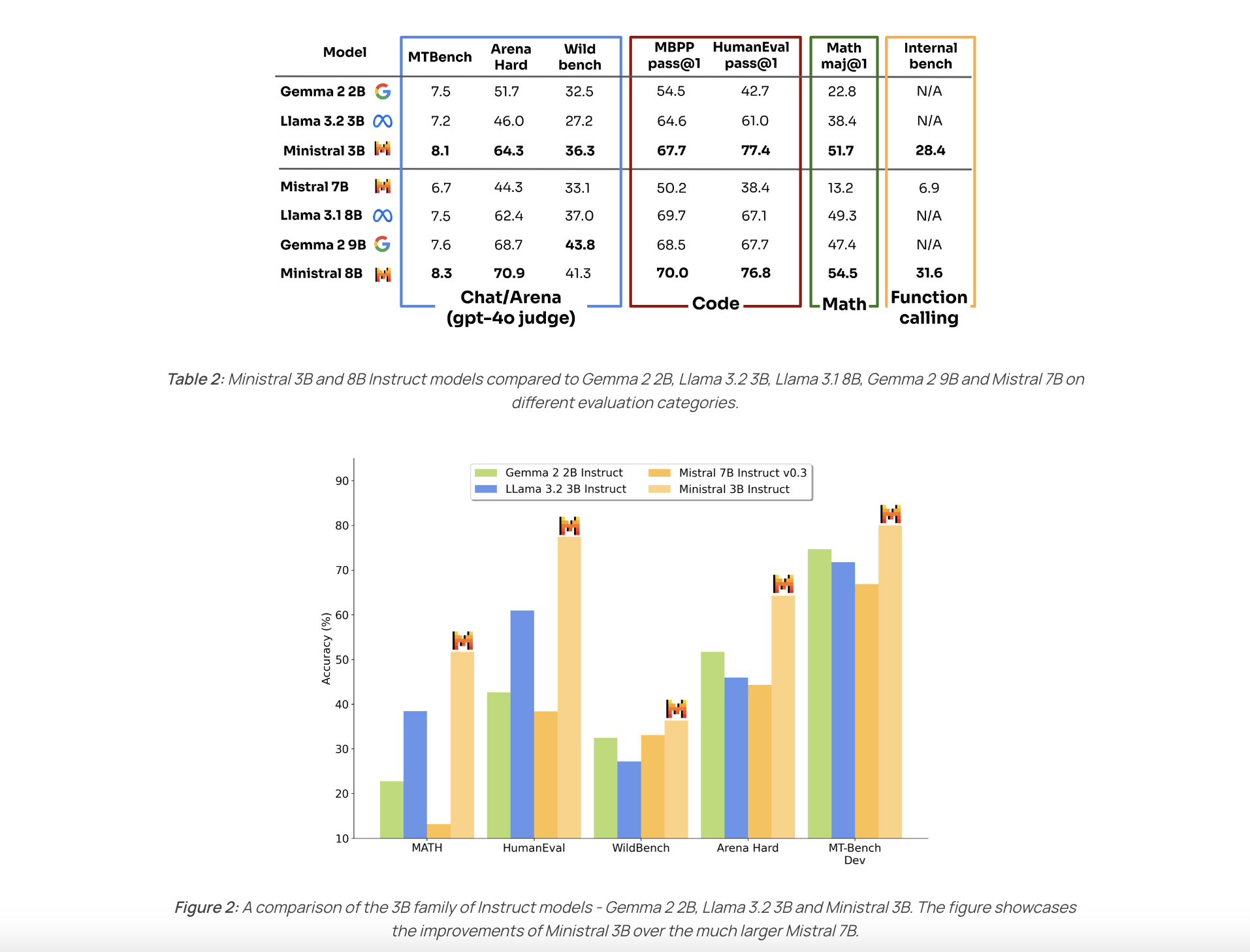

Introducing Ministral 3B and Ministral 8B

Mistral AI has launched two innovative models, Ministral 3B and Ministral 8B, designed to enhance AI capabilities on devices without needing cloud support. These models, known as les Ministraux, enable powerful language processing directly on devices. This shift is crucial for sectors like healthcare, industrial automation, and consumer electronics, allowing applications to perform advanced tasks locally, securely, and cost-effectively. They represent a significant advancement in how AI can interact with the physical world, providing greater autonomy and flexibility.

Technical Details and Benefits

Les Ministraux are engineered for efficiency and performance. With 3 billion and 8 billion parameters, these transformer-based models are optimized for lower power use while maintaining high accuracy. They utilize pruning and quantization techniques to minimize computational demands, making them suitable for devices with limited hardware, such as smartphones and embedded systems. Ministral 3B is tailored for ultra-efficient deployment, while Ministral 8B is designed for more complex language tasks.

Importance and Performance Results

The impact of Ministral 3B and 8B goes beyond technical specs. They tackle critical issues in edge AI, such as reducing latency and enhancing data privacy by processing information locally. This is vital for fields like healthcare and finance. Early tests show that Ministral 8B significantly improves task completion rates compared to existing on-device models while remaining efficient. These models also allow developers to create applications that function without constant internet access, ensuring reliability in remote or low-connectivity areas, which is essential for field operations and emergency responses.

Conclusion

The launch of les Ministraux, Ministral 3B and 8B, is a pivotal moment in the AI industry, bringing powerful computing directly to edge devices. Mistral AI’s commitment to optimizing these models for on-device use solves key challenges related to privacy, latency, and cost, making AI more accessible across various fields. By offering top-tier performance without relying on the cloud, these models enable seamless, secure, and efficient AI operations at the edge. This not only improves user experiences but also opens new possibilities for integrating AI into daily devices and workflows.

For more details on the 8B model, check out our resources. A big thank you to the researchers behind this project. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you appreciate our work, you’ll love our newsletter. Join our 50k+ ML SubReddit community!

Upcoming Live Webinar – Oct 29, 2024

Learn about the best platform for serving fine-tuned models: Predibase Inference Engine.

Transform Your Company with AI

Stay competitive and leverage Mistral AI’s solutions to revolutionize your work. Here’s how:

- Identify Automation Opportunities: Find key customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI projects have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that meet your needs and allow for customization.

- Implement Gradually: Start small, gather data, and expand AI usage wisely.

For AI KPI management advice, reach out to us at hello@itinai.com. For ongoing insights on leveraging AI, follow us on Telegram at t.me/itinainews or Twitter @itinaicom.

Discover how AI can transform your sales processes and customer engagement. Explore solutions at itinai.com.