<>

Model2Vec: Revolutionizing NLP with Small, Efficient Models

Practical Solutions and Value:

Model2Vec by Minish Lab distills small, fast models from any Sentence Transformer, offering researchers and developers an efficient NLP solution.

Key Features:

- Creates compact models for NLP tasks without training data

- Two modes: Output for quick, compact models and Vocab for improved performance

- Utilizes PCA and Zipf weighting for enhanced performance

Distillation and Inference:

- Distills models in as little as 30 seconds without additional training data

- Reduces model size by 15 times, making it only 30 MB on disk

- Enables faster inference up to 500 times compared to traditional methods

Advantages:

- Works with any Sentence Transformer model

- Handles multi-lingual tasks efficiently

- Easy evaluation on benchmark tasks

Performance and Evaluation:

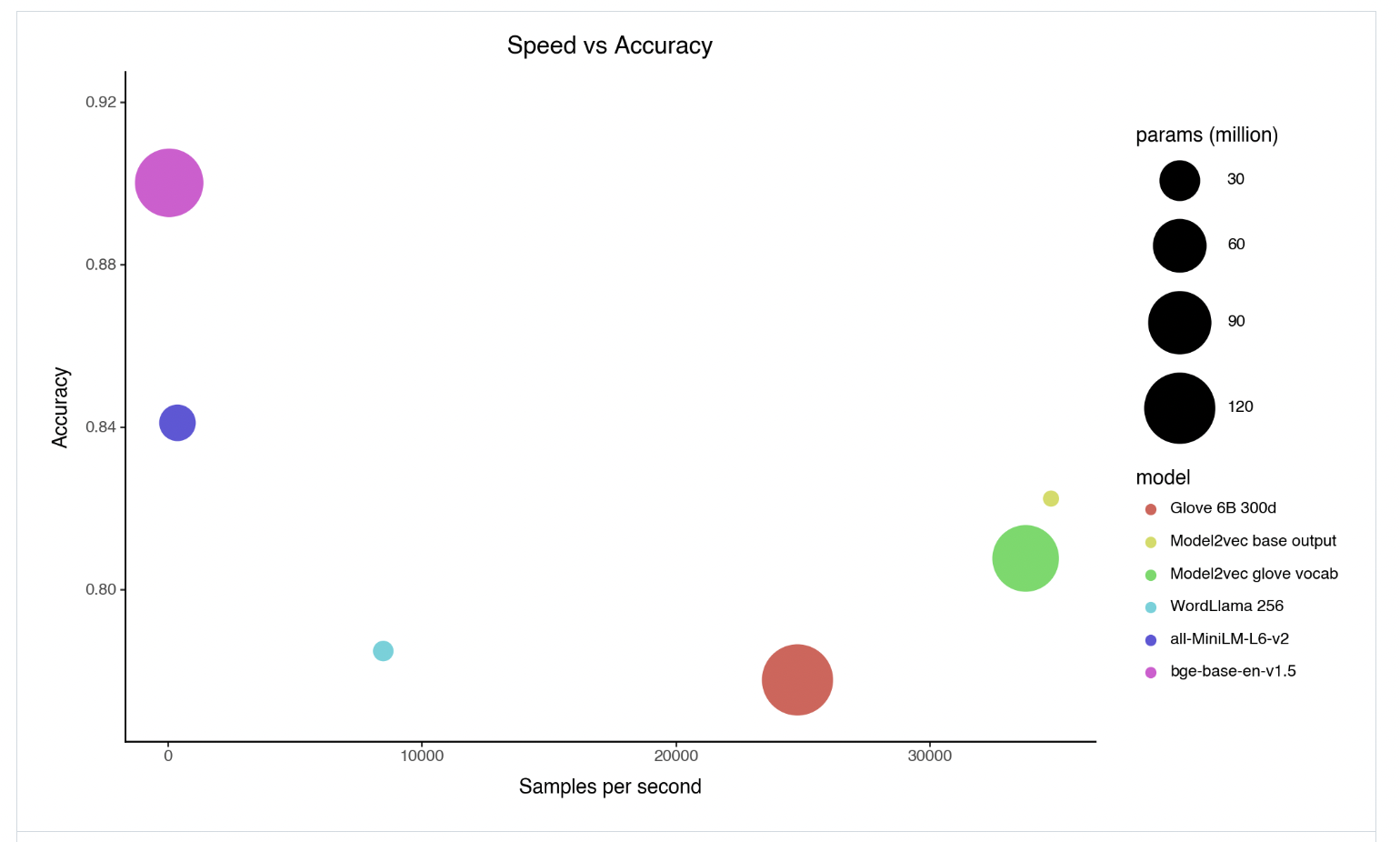

- Outperforms traditional static embedding models in benchmark evaluations

- Competitive with state-of-the-art models while being smaller and faster

Use Cases and Applications:

Suitable for edge devices, data-scarce environments, sentiment analysis, document classification, and more.

Conclusion:

Model2Vec democratizes NLP technology with its small, efficient models, offering a scalable solution for various language-related tasks.