Practical Solutions for Long-Context LLMs

Accelerating Processing with MInference

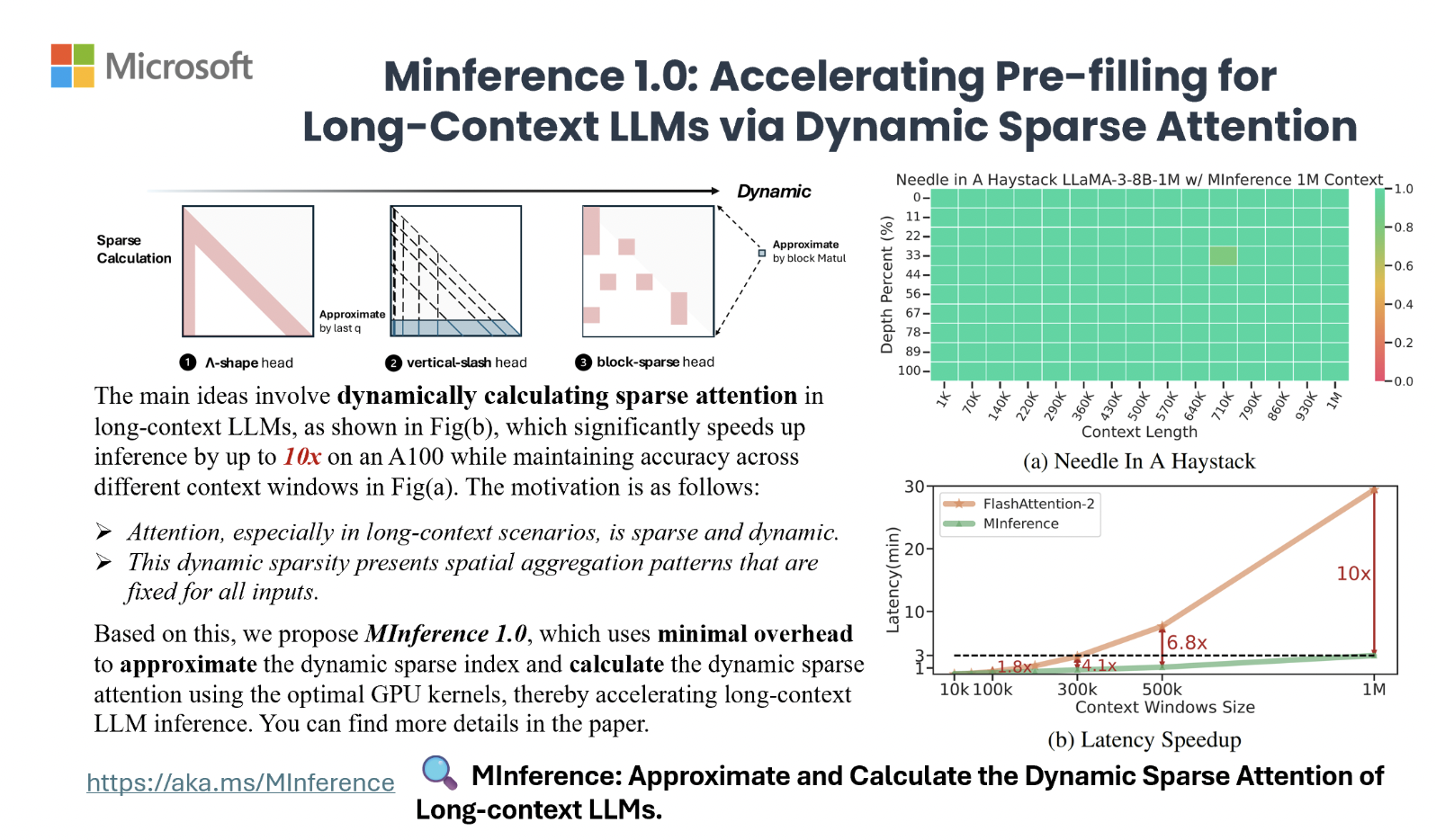

The MInference method optimizes sparse calculations for GPUs, reducing latency without altering pre-training or needing fine-tuning. It achieves up to a 10x speedup, cutting the pre-filling stage from 30 minutes to 3 minutes on a single A100 GPU while maintaining accuracy.

Efficiency Improvement with Sparse Attention

Sparse attention methods aim to improve Transformer efficiency by reducing the quadratic complexity of attention, including static sparse patterns and dynamic sparse attention. Recent approaches extend LLM context windows but do not reduce high inference costs.

Dynamic Sparse Attention for Optimization

Leveraging specific attention patterns, such as A-shape, Vertical-Slash, and Block-Sparse, significantly optimizes sparse computations on GPUs, reducing computational overhead while maintaining accuracy in long-context LLMs.

Performance Testing and Practical Value

MInference’s performance was tested on various context lengths, demonstrating superiority in maintaining context and processing speed over competing methods. It integrates efficiently with KV cache compression techniques and significantly reduces latency, proving its practical value in optimizing long-context language model performance.

Application and Practical Value

MInference maintains long-context performance while achieving up to a 10x speedup, drastically cutting latency on a single A100 GPU from 30 minutes to 3 minutes for prompts up to 1 million tokens. Similar patterns have potential in multi-modal and encoder-decoder LLMs, indicating promising pre-filling stage acceleration applications.

Evolve Your Company with AI

AI Solutions for Business Transformation

Use MInference to redefine your way of work and stay competitive. Identify automation opportunities, define KPIs, select an AI solution, and implement gradually to evolve your company with AI.

AI KPI Management and Continuous Insights

Connect with us at hello@itinai.com for AI KPI management advice, and stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom for continuous insights into leveraging AI.

AI for Sales Processes and Customer Engagement

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.