Advancements in AI Debugging Tools: Microsoft’s Debug-Gym

The Challenges of Debugging in AI Coding Tools

Despite notable advancements in code generation, AI coding tools still encounter significant challenges when it comes to debugging. Debugging is a critical process in software development, yet large language models (LLMs) often struggle with identifying and resolving runtime errors or logical faults. Human developers utilize interactive debuggers such as Python’s pdb to inspect variables and trace program execution, allowing for a deeper understanding of program flow. This exploratory reasoning is currently lacking in LLM capabilities, which typically operate in static environments with limited dynamic feedback.

Introducing Debug-Gym: A Solution for AI Agents

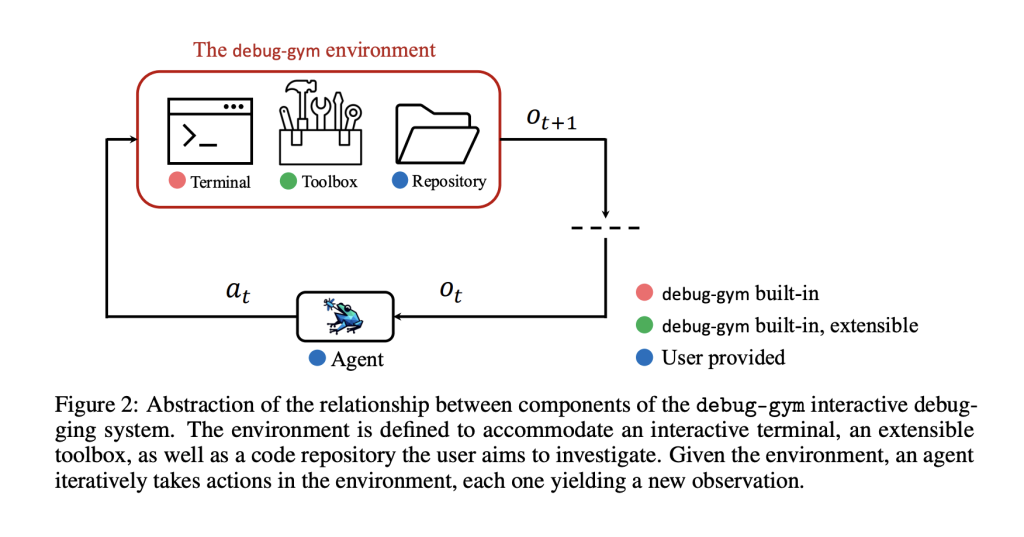

To enhance the debugging capabilities of LLMs, Microsoft has launched Debug-Gym, a Python-based framework designed to evaluate AI agents in realistic code-repair tasks. Debug-Gym creates a structured environment where LLMs can utilize debugging commands, observe runtime behavior, and refine their strategies through active exploration. Unlike traditional models that merely predict corrections, agents in Debug-Gym can interact with their environment to gather evidence before proposing solutions, mirroring human debugging approaches.

Key Features of Debug-Gym

- Buggy Program Scenarios: A collection of Python scripts with known syntax, runtime, and logical errors.

- Debugger Access: An interface that provides commands similar to those in Python’s pdb, allowing for stack inspection and variable evaluation.

- Observation and Action Spaces: Inputs such as traceback data are provided, enabling agents to respond with commands or code modifications.

This modular architecture supports deterministic execution, permitting the easy substitution or enhancement of agents and debugging tools. The open-source nature of Debug-Gym fosters collaboration and comparative evaluation among researchers and developers.

Evaluation Results and Insights

Initial evaluations using Debug-Gym indicate that AI agents that leverage interactive tools are more successful in resolving complex bugs. Microsoft’s studies reveal that LLMs utilizing debugging commands—like variable printing and stack navigation—achieved higher accuracy and efficiency in code repairs. In a benchmark of 150 diverse bug cases, interactive agents resolved over half of the problems in fewer iterations compared to their static counterparts.

Debug-Gym also offers insights into agent behavior, enabling researchers to analyze tool usage patterns and identify areas where agents deviate from effective debugging strategies. This introspection supports the iterative development of agent policies and opens pathways for enhancing models with richer feedback mechanisms.

Moreover, Debug-Gym accommodates training methodologies such as reinforcement learning from interaction histories, allowing future models to learn from both human demonstrations and structured debugging actions.

Conclusion

Debug-Gym represents a significant advancement in the development of LLM-based coding tools, aligning AI capabilities more closely with real-world software development workflows. By supporting interactive debugging, this framework not only enhances the precision of agent capabilities in dynamic code repair but also establishes a foundation for training and evaluating agents through exploratory learning.

Although current systems still face challenges in grasping nuanced runtime contexts, Debug-Gym paves the way for creating agents that can systematically approach bug resolution using external tools. This transition from passive code suggestion to active problem-solving is a crucial step towards integrating LLMs into professional software development environments.

Transform Your Business with AI

Explore how artificial intelligence can transform your business processes. Identify areas where automation can enhance efficiency, determine key performance indicators (KPIs) to measure the success of your AI investments, and choose tools that can be customized to meet your objectives. Start small, gather data on the effectiveness of AI implementations, and gradually expand your use of these technologies.

For expert guidance on managing AI in your business, contact us at hello@itinai.ru.